02. Neural Network Classification with TensorFlow¶

Okay, we've seen how to deal with a regression problem in TensorFlow, let's look at how we can approach a classification problem.

A classification problem involves predicting whether something is one thing or another.

For example, you might want to:

- Predict whether or not someone has heart disease based on their health parameters. This is called binary classification since there are only two options.

- Decide whether a photo of is of food, a person or a dog. This is called multi-class classification since there are more than two options.

- Predict what categories should be assigned to a Wikipedia article. This is called multi-label classification since a single article could have more than one category assigned.

In this notebook, we're going to work through a number of different classification problems with TensorFlow. In other words, taking a set of inputs and predicting what class those set of inputs belong to.

What we're going to cover¶

Specifically, we're going to go through doing the following with TensorFlow:

- Architecture of a classification model

- Input shapes and output shapes

X: features/data (inputs)y: labels (outputs)- "What class do the inputs belong to?"

- Creating custom data to view and fit

- Steps in modelling for binary and mutliclass classification

- Creating a model

- Compiling a model

- Defining a loss function

- Setting up an optimizer

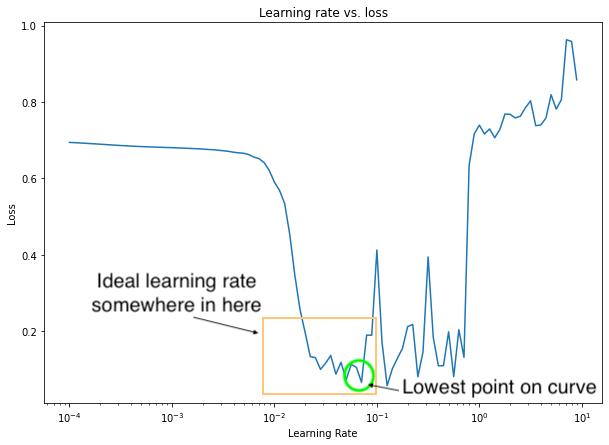

- Finding the best learning rate

- Creating evaluation metrics

- Fitting a model (getting it to find patterns in our data)

- Improving a model

- The power of non-linearity

- Evaluating classification models

- Visualizng the model ("visualize, visualize, visualize")

- Looking at training curves

- Compare predictions to ground truth (using our evaluation metrics)

How you can use this notebook¶

You can read through the descriptions and the code (it should all run, except for the cells which error on purpose), but there's a better option.

Write all of the code yourself.

Yes. I'm serious. Create a new notebook, and rewrite each line by yourself. Investigate it, see if you can break it, why does it break?

You don't have to write the text descriptions but writing the code yourself is a great way to get hands-on experience.

Don't worry if you make mistakes, we all do. The way to get better and make less mistakes is to write more code.

Typical architecture of a classification neural network¶

The word typical is on purpose.

Because the architecture of a classification neural network can widely vary depending on the problem you're working on.

However, there are some fundamentals all deep neural networks contain:

- An input layer.

- Some hidden layers.

- An output layer.

Much of the rest is up to the data analyst creating the model.

The following are some standard values you'll often use in your classification neural networks.

| Hyperparameter | Binary Classification | Multiclass classification |

|---|---|---|

| Input layer shape | Same as number of features (e.g. 5 for age, sex, height, weight, smoking status in heart disease prediction) | Same as binary classification |

| Hidden layer(s) | Problem specific, minimum = 1, maximum = unlimited | Same as binary classification |

| Neurons per hidden layer | Problem specific, generally 10 to 100 | Same as binary classification |

| Output layer shape | 1 (one class or the other) | 1 per class (e.g. 3 for food, person or dog photo) |

| Hidden activation | Usually ReLU (rectified linear unit) | Same as binary classification |

| Output activation | Sigmoid | Softmax |

| Loss function | Cross entropy (tf.keras.losses.BinaryCrossentropy in TensorFlow) |

Cross entropy (tf.keras.losses.CategoricalCrossentropy in TensorFlow) |

| Optimizer | SGD (stochastic gradient descent), Adam | Same as binary classification |

Table 1: Typical architecture of a classification network. Source: Adapted from page 295 of Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow Book by Aurélien Géron

Don't worry if not much of the above makes sense right now, we'll get plenty of experience as we go through this notebook.

Let's start by importing TensorFlow as the common alias tf. For this notebook, make sure you're using version 2.x+.

import tensorflow as tf

print(tf.__version__)

import datetime

print(f"Notebook last run (end-to-end): {datetime.datetime.now()}")

2.13.0 Notebook last run (end-to-end): 2023-10-12 04:07:12.774646

Creating data to view and fit¶

We could start by importing a classification dataset but let's practice making some of our own classification data.

🔑 Note: It's a common practice to get you and model you build working on a toy (or simple) dataset before moving to your actual problem. Treat it as a rehersal experiment before the actual experiment(s).

Since classification is predicting whether something is one thing or another, let's make some data to reflect that.

To do so, we'll use Scikit-Learn's make_circles() function.

from sklearn.datasets import make_circles

# Make 1000 examples

n_samples = 1000

# Create circles

X, y = make_circles(n_samples,

noise=0.03,

random_state=42)

Wonderful, now we've created some data, let's look at the features (X) and labels (y).

# Check out the features

X

array([[ 0.75424625, 0.23148074],

[-0.75615888, 0.15325888],

[-0.81539193, 0.17328203],

...,

[-0.13690036, -0.81001183],

[ 0.67036156, -0.76750154],

[ 0.28105665, 0.96382443]])

# See the first 10 labels

y[:10]

array([1, 1, 1, 1, 0, 1, 1, 1, 1, 0])

Okay, we've seen some of our data and labels, how about we move towards visualizing?

🔑 Note: One important step of starting any kind of machine learning project is to become one with the data. And one of the best ways to do this is to visualize the data you're working with as much as possible. The data explorer's motto is "visualize, visualize, visualize".

We'll start with a DataFrame.

# Make dataframe of features and labels

import pandas as pd

circles = pd.DataFrame({"X0":X[:, 0], "X1":X[:, 1], "label":y})

circles.head()

| X0 | X1 | label | |

|---|---|---|---|

| 0 | 0.754246 | 0.231481 | 1 |

| 1 | -0.756159 | 0.153259 | 1 |

| 2 | -0.815392 | 0.173282 | 1 |

| 3 | -0.393731 | 0.692883 | 1 |

| 4 | 0.442208 | -0.896723 | 0 |

What kind of labels are we dealing with?

# Check out the different labels

circles.label.value_counts()

1 500 0 500 Name: label, dtype: int64

Alright, looks like we're dealing with a binary classification problem. It's binary because there are only two labels (0 or 1).

If there were more label options (e.g. 0, 1, 2, 3 or 4), it would be called multiclass classification.

Let's take our visualization a step further and plot our data.

# Visualize with a plot

import matplotlib.pyplot as plt

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=plt.cm.RdYlBu);

Nice! From the plot, can you guess what kind of model we might want to build?

How about we try and build one to classify blue or red dots? As in, a model which is able to distinguish blue from red dots.

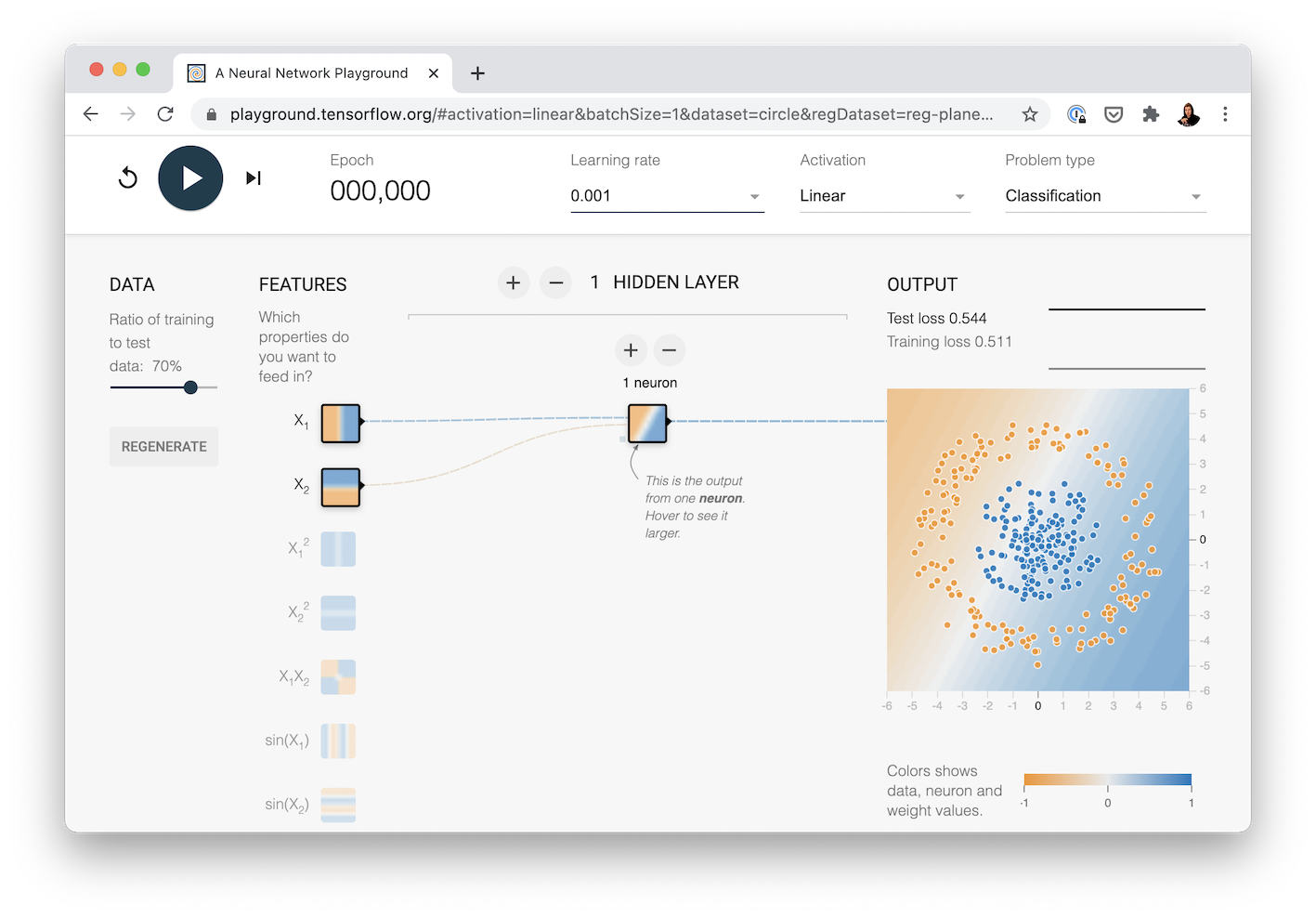

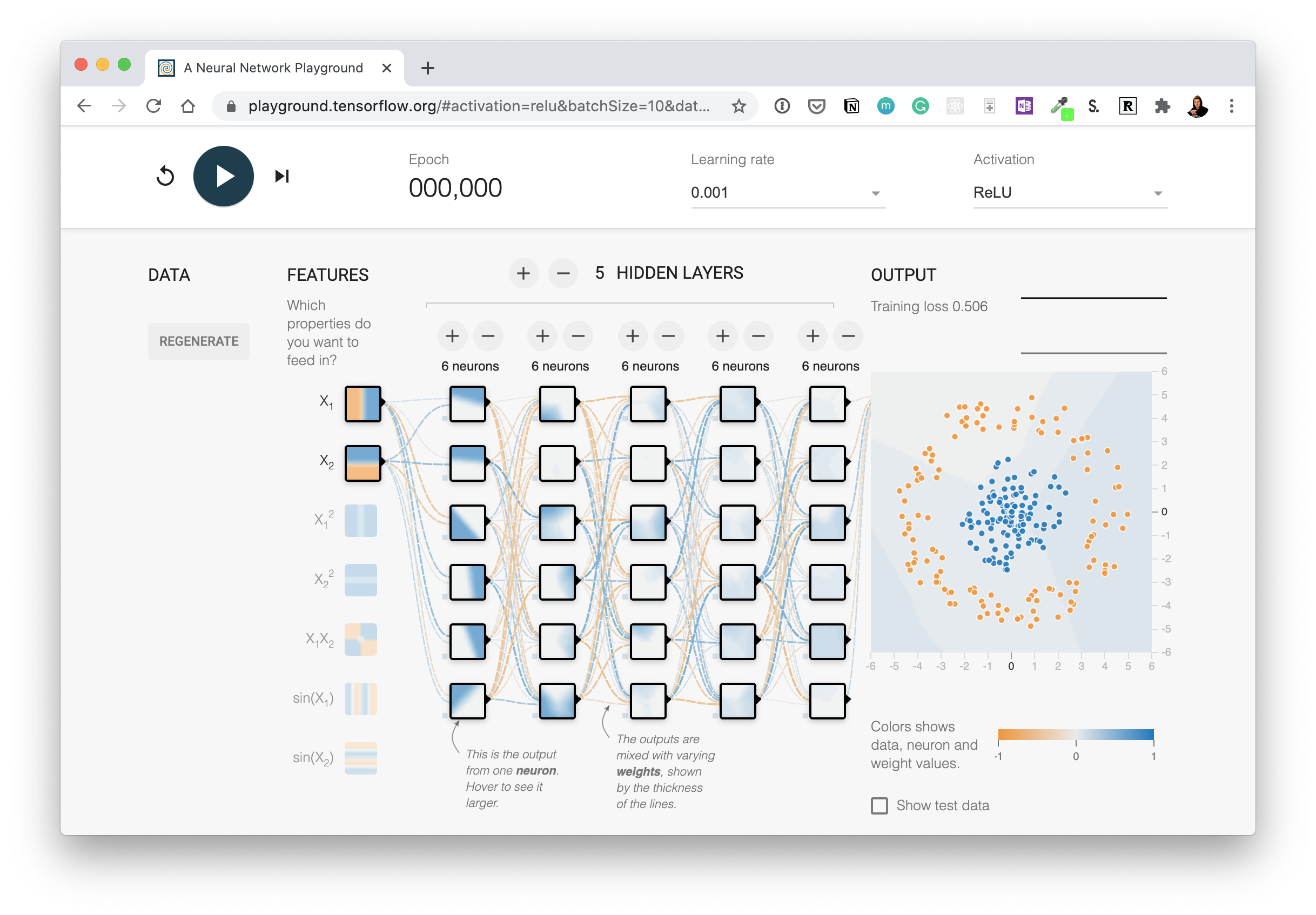

🛠 Practice: Before pushing forward, you might want to spend 10 minutes playing around with the TensorFlow Playground. Try adjusting the different hyperparameters you see and click play to see a neural network train. I think you'll find the data very similar to what we've just created.

Input and output shapes¶

One of the most common issues you'll run into when building neural networks is shape mismatches.

More specifically, the shape of the input data and the shape of the output data.

In our case, we want to input X and get our model to predict y.

So let's check out the shapes of X and y.

# Check the shapes of our features and labels

X.shape, y.shape

((1000, 2), (1000,))

Hmm, where do these numbers come from?

# Check how many samples we have

len(X), len(y)

(1000, 1000)

So we've got as many X values as we do y values, that makes sense.

Let's check out one example of each.

# View the first example of features and labels

X[0], y[0]

(array([0.75424625, 0.23148074]), 1)

Alright, so we've got two X features which lead to one y value.

This means our neural network input shape will has to accept a tensor with at least one dimension being two and output a tensor with at least one value.

🤔 Note:

yhaving a shape of (1000,) can seem confusing. However, this is because allyvalues are actually scalars (single values) and therefore don't have a dimension. For now, think of your output shape as being at least the same value as one example ofy(in our case, the output from our neural network has to be at least one value).

Steps in modelling¶

Now we know what data we have as well as the input and output shapes, let's see how we'd build a neural network to model it.

In TensorFlow, there are typically 3 fundamental steps to creating and training a model.

- Creating a model - piece together the layers of a neural network yourself (using the functional or sequential API) or import a previously built model (known as transfer learning).

- Compiling a model - defining how a model's performance should be measured (loss/metrics) as well as defining how it should improve (optimizer).

- Fitting a model - letting the model try to find patterns in the data (how does

Xget toy).

Let's see these in action using the Sequential API to build a model for our regression data. And then we'll step through each.

# Set random seed

tf.random.set_seed(42)

# 1. Create the model using the Sequential API

model_1 = tf.keras.Sequential([

tf.keras.layers.Dense(1)

])

# 2. Compile the model

model_1.compile(loss=tf.keras.losses.BinaryCrossentropy(), # binary since we are working with 2 clases (0 & 1)

optimizer=tf.keras.optimizers.SGD(),

metrics=['accuracy'])

# 3. Fit the model

model_1.fit(X, y, epochs=5)

Epoch 1/5 32/32 [==============================] - 5s 3ms/step - loss: 5.9177 - accuracy: 0.4800 Epoch 2/5 32/32 [==============================] - 0s 3ms/step - loss: 5.1146 - accuracy: 0.4620 Epoch 3/5 32/32 [==============================] - 0s 3ms/step - loss: 4.6022 - accuracy: 0.4720 Epoch 4/5 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 5/5 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000

<keras.src.callbacks.History at 0x7a25080c58d0>

Looking at the accuracy metric, our model performs poorly (50% accuracy on a binary classification problem is the equivalent of guessing), but what if we trained it for longer?

# Train our model for longer (more chances to look at the data)

model_1.fit(X, y, epochs=200, verbose=0) # set verbose=0 to remove training updates

model_1.evaluate(X, y)

32/32 [==============================] - 0s 2ms/step - loss: 7.7125 - accuracy: 0.5000

[7.712474346160889, 0.5]

Even after 200 passes of the data, it's still performing as if it's guessing.

What if we added an extra layer and trained for a little longer?

# Set random seed

tf.random.set_seed(42)

# 1. Create the model (same as model_1 but with an extra layer)

model_2 = tf.keras.Sequential([

tf.keras.layers.Dense(1), # add an extra layer

tf.keras.layers.Dense(1)

])

# 2. Compile the model

model_2.compile(loss=tf.keras.losses.BinaryCrossentropy(),

optimizer=tf.keras.optimizers.SGD(),

metrics=['accuracy'])

# 3. Fit the model

model_2.fit(X, y, epochs=100, verbose=0) # set verbose=0 to make the output print less

<keras.src.callbacks.History at 0x7a24b4b323e0>

# Evaluate the model

model_2.evaluate(X, y)

32/32 [==============================] - 0s 2ms/step - loss: 0.6934 - accuracy: 0.5000

[0.6933949589729309, 0.5]

Still not even as good as guessing (~50% accuracy)... hmm...?

Let's remind ourselves of a couple more ways we can use to improve our models.

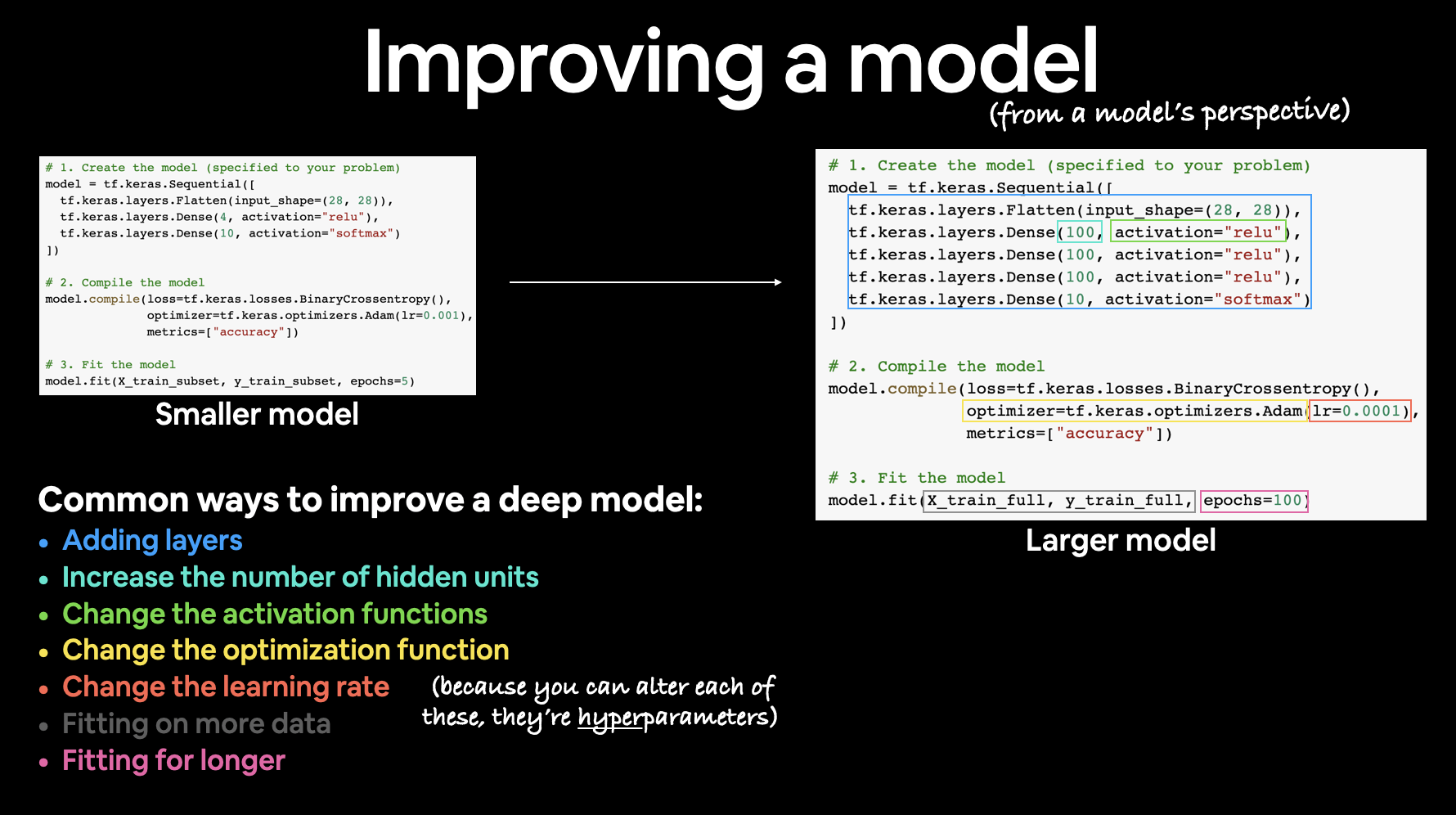

Improving a model¶

To improve our model, we can alter almost every part of the 3 steps we went through before.

- Creating a model - here you might want to add more layers, increase the number of hidden units (also called neurons) within each layer, change the activation functions of each layer.

- Compiling a model - you might want to choose a different optimization function (such as the Adam optimizer, which is usually pretty good for many problems) or perhaps change the learning rate of the optimization function.

- Fitting a model - perhaps you could fit a model for more epochs (leave it training for longer).

There are many different ways to potentially improve a neural network. Some of the most common include: increasing the number of layers (making the network deeper), increasing the number of hidden units (making the network wider) and changing the learning rate. Because these values are all human-changeable, they're referred to as hyperparameters) and the practice of trying to find the best hyperparameters is referred to as hyperparameter tuning.

There are many different ways to potentially improve a neural network. Some of the most common include: increasing the number of layers (making the network deeper), increasing the number of hidden units (making the network wider) and changing the learning rate. Because these values are all human-changeable, they're referred to as hyperparameters) and the practice of trying to find the best hyperparameters is referred to as hyperparameter tuning.

How about we try adding more neurons, an extra layer and our friend the Adam optimizer?

Surely doing this will result in predictions better than guessing...

Note: The following message (below this one) can be ignored if you're running TensorFlow 2.8.0+, the error seems to have been fixed.

Note: If you're using TensorFlow 2.7.0+ (but not 2.8.0+) the original code from the following cells may have caused some errors. They've since been updated to fix those errors. You can see explanations on what happened at the following resources:

# Set random seed

tf.random.set_seed(42)

# 1. Create the model (this time 3 layers)

model_3 = tf.keras.Sequential([

# Before TensorFlow 2.7.0

# tf.keras.layers.Dense(100), # add 100 dense neurons

# With TensorFlow 2.7.0

# tf.keras.layers.Dense(100, input_shape=(None, 1)), # add 100 dense neurons

## After TensorFlow 2.8.0 ##

tf.keras.layers.Dense(100), # add 100 dense neurons

tf.keras.layers.Dense(10), # add another layer with 10 neurons

tf.keras.layers.Dense(1)

])

# 2. Compile the model

model_3.compile(loss=tf.keras.losses.BinaryCrossentropy(),

optimizer=tf.keras.optimizers.Adam(), # use Adam instead of SGD

metrics=['accuracy'])

# 3. Fit the model

model_3.fit(X, y, epochs=100, verbose=1) # fit for 100 passes of the data

Epoch 1/100 32/32 [==============================] - 2s 3ms/step - loss: 3.5433 - accuracy: 0.4520 Epoch 2/100 32/32 [==============================] - 0s 3ms/step - loss: 1.0533 - accuracy: 0.4910 Epoch 3/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7210 - accuracy: 0.5000 Epoch 4/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6998 - accuracy: 0.5000 Epoch 5/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6952 - accuracy: 0.4830 Epoch 6/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6941 - accuracy: 0.4910 Epoch 7/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6938 - accuracy: 0.4940 Epoch 8/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6945 - accuracy: 0.4990 Epoch 9/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6943 - accuracy: 0.4880 Epoch 10/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6945 - accuracy: 0.4550 Epoch 11/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6954 - accuracy: 0.4490 Epoch 12/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6946 - accuracy: 0.4860 Epoch 13/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6958 - accuracy: 0.4920 Epoch 14/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6949 - accuracy: 0.5150 Epoch 15/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6961 - accuracy: 0.4720 Epoch 16/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6943 - accuracy: 0.4880 Epoch 17/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6983 - accuracy: 0.4930 Epoch 18/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6945 - accuracy: 0.4730 Epoch 19/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6954 - accuracy: 0.5030 Epoch 20/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6957 - accuracy: 0.4600 Epoch 21/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6956 - accuracy: 0.4790 Epoch 22/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6948 - accuracy: 0.4440 Epoch 23/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6943 - accuracy: 0.4850 Epoch 24/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6962 - accuracy: 0.4690 Epoch 25/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6973 - accuracy: 0.5070 Epoch 26/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6978 - accuracy: 0.4870 Epoch 27/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.5010 Epoch 28/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6947 - accuracy: 0.4690 Epoch 29/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6974 - accuracy: 0.4860 Epoch 30/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6997 - accuracy: 0.4870 Epoch 31/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6947 - accuracy: 0.5060 Epoch 32/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.4710 Epoch 33/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6955 - accuracy: 0.4590 Epoch 34/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6972 - accuracy: 0.4770 Epoch 35/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6961 - accuracy: 0.5020 Epoch 36/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6946 - accuracy: 0.4680 Epoch 37/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6952 - accuracy: 0.4980 Epoch 38/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6976 - accuracy: 0.4930 Epoch 39/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6950 - accuracy: 0.4750 Epoch 40/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6966 - accuracy: 0.4970 Epoch 41/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6978 - accuracy: 0.4840 Epoch 42/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6994 - accuracy: 0.4770 Epoch 43/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6954 - accuracy: 0.5060 Epoch 44/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6982 - accuracy: 0.4900 Epoch 45/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6960 - accuracy: 0.5040 Epoch 46/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6948 - accuracy: 0.4810 Epoch 47/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6974 - accuracy: 0.5120 Epoch 48/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6967 - accuracy: 0.4930 Epoch 49/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6975 - accuracy: 0.4820 Epoch 50/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6970 - accuracy: 0.4640 Epoch 51/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6977 - accuracy: 0.4810 Epoch 52/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6963 - accuracy: 0.5080 Epoch 53/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6969 - accuracy: 0.5070 Epoch 54/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6997 - accuracy: 0.5120 Epoch 55/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6952 - accuracy: 0.5180 Epoch 56/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.4890 Epoch 57/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6980 - accuracy: 0.4730 Epoch 58/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.5080 Epoch 59/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7003 - accuracy: 0.4970 Epoch 60/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7009 - accuracy: 0.4930 Epoch 61/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6997 - accuracy: 0.4710 Epoch 62/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.4950 Epoch 63/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6948 - accuracy: 0.4840 Epoch 64/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6961 - accuracy: 0.4920 Epoch 65/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6993 - accuracy: 0.4830 Epoch 66/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6966 - accuracy: 0.4980 Epoch 67/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6977 - accuracy: 0.4490 Epoch 68/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6958 - accuracy: 0.5060 Epoch 69/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6949 - accuracy: 0.5280 Epoch 70/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6987 - accuracy: 0.4720 Epoch 71/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6976 - accuracy: 0.4720 Epoch 72/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.5010 Epoch 73/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6960 - accuracy: 0.4890 Epoch 74/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6958 - accuracy: 0.5050 Epoch 75/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6960 - accuracy: 0.5070 Epoch 76/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6980 - accuracy: 0.4810 Epoch 77/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6972 - accuracy: 0.4990 Epoch 78/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6972 - accuracy: 0.4710 Epoch 79/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7007 - accuracy: 0.5130 Epoch 80/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6978 - accuracy: 0.5000 Epoch 81/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6975 - accuracy: 0.5020 Epoch 82/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6966 - accuracy: 0.4880 Epoch 83/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7003 - accuracy: 0.4510 Epoch 84/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6964 - accuracy: 0.5010 Epoch 85/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6968 - accuracy: 0.4660 Epoch 86/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7005 - accuracy: 0.4940 Epoch 87/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6976 - accuracy: 0.4540 Epoch 88/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6981 - accuracy: 0.4570 Epoch 89/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6982 - accuracy: 0.4740 Epoch 90/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6978 - accuracy: 0.4560 Epoch 91/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6980 - accuracy: 0.4880 Epoch 92/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6984 - accuracy: 0.4730 Epoch 93/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6982 - accuracy: 0.4710 Epoch 94/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7001 - accuracy: 0.4790 Epoch 95/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6972 - accuracy: 0.4560 Epoch 96/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6979 - accuracy: 0.4860 Epoch 97/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6972 - accuracy: 0.4580 Epoch 98/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6987 - accuracy: 0.4800 Epoch 99/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6975 - accuracy: 0.5080 Epoch 100/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6967 - accuracy: 0.4810

<keras.src.callbacks.History at 0x7a249bd86620>

Still!

We've pulled out a few tricks but our model isn't even doing better than guessing.

Let's make some visualizations to see what's happening.

🔑 Note: Whenever your model is performing strangely or there's something going on with your data you're not quite sure of, remember these three words: visualize, visualize, visualize. Inspect your data, inspect your model, inpsect your model's predictions.

To visualize our model's predictions we're going to create a function plot_decision_boundary() which:

- Takes in a trained model, features (

X) and labels (y). - Creates a meshgrid of the different

Xvalues. - Makes predictions across the meshgrid.

- Plots the predictions as well as a line between the different zones (where each unique class falls).

If this sounds confusing, let's see it in code and then see the output.

🔑 Note: If you're ever unsure of what a function does, try unraveling it and writing it line by line for yourself to see what it does. Break it into small parts and see what each part outputs.

import numpy as np

def plot_decision_boundary(model, X, y):

"""

Plots the decision boundary created by a model predicting on X.

This function has been adapted from two phenomenal resources:

1. CS231n - https://cs231n.github.io/neural-networks-case-study/

2. Made with ML basics - https://github.com/GokuMohandas/MadeWithML/blob/main/notebooks/08_Neural_Networks.ipynb

"""

# Define the axis boundaries of the plot and create a meshgrid

x_min, x_max = X[:, 0].min() - 0.1, X[:, 0].max() + 0.1

y_min, y_max = X[:, 1].min() - 0.1, X[:, 1].max() + 0.1

xx, yy = np.meshgrid(np.linspace(x_min, x_max, 100),

np.linspace(y_min, y_max, 100))

# Create X values (we're going to predict on all of these)

x_in = np.c_[xx.ravel(), yy.ravel()] # stack 2D arrays together: https://numpy.org/devdocs/reference/generated/numpy.c_.html

# Make predictions using the trained model

y_pred = model.predict(x_in)

# Check for multi-class

if model.output_shape[-1] > 1: # checks the final dimension of the model's output shape, if this is > (greater than) 1, it's multi-class

print("doing multiclass classification...")

# We have to reshape our predictions to get them ready for plotting

y_pred = np.argmax(y_pred, axis=1).reshape(xx.shape)

else:

print("doing binary classifcation...")

y_pred = np.round(np.max(y_pred, axis=1)).reshape(xx.shape)

# Plot decision boundary

plt.contourf(xx, yy, y_pred, cmap=plt.cm.RdYlBu, alpha=0.7)

plt.scatter(X[:, 0], X[:, 1], c=y, s=40, cmap=plt.cm.RdYlBu)

plt.xlim(xx.min(), xx.max())

plt.ylim(yy.min(), yy.max())

Now we've got a function to plot our model's decision boundary (the cut off point its making between red and blue dots), let's try it out.

# Check out the predictions our model is making

plot_decision_boundary(model_3, X, y)

313/313 [==============================] - 0s 1ms/step doing binary classifcation...

Looks like our model is trying to draw a straight line through the data.

What's wrong with doing this?

The main issue is our data isn't separable by a straight line.

In a regression problem, our model might work. In fact, let's try it.

# Set random seed

tf.random.set_seed(42)

# Create some regression data

X_regression = np.arange(0, 1000, 5)

y_regression = np.arange(100, 1100, 5)

# Split it into training and test sets

X_reg_train = X_regression[:150]

X_reg_test = X_regression[150:]

y_reg_train = y_regression[:150]

y_reg_test = y_regression[150:]

# Fit our model to the data

# Note: Before TensorFlow 2.7.0, this line would work

# model_3.fit(X_reg_train, y_reg_train, epochs=100)

# After TensorFlow 2.7.0, see here for more: https://github.com/mrdbourke/tensorflow-deep-learning/discussions/278

model_3.fit(tf.expand_dims(X_reg_train, axis=-1),

y_reg_train,

epochs=100)

Epoch 1/100

--------------------------------------------------------------------------- ValueError Traceback (most recent call last) <ipython-input-18-2f683d96fa34> in <cell line: 19>() 17 18 # After TensorFlow 2.7.0, see here for more: https://github.com/mrdbourke/tensorflow-deep-learning/discussions/278 ---> 19 model_3.fit(tf.expand_dims(X_reg_train, axis=-1), 20 y_reg_train, 21 epochs=100) /usr/local/lib/python3.10/dist-packages/keras/src/utils/traceback_utils.py in error_handler(*args, **kwargs) 68 # To get the full stack trace, call: 69 # `tf.debugging.disable_traceback_filtering()` ---> 70 raise e.with_traceback(filtered_tb) from None 71 finally: 72 del filtered_tb /usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py in tf__train_function(iterator) 13 try: 14 do_return = True ---> 15 retval_ = ag__.converted_call(ag__.ld(step_function), (ag__.ld(self), ag__.ld(iterator)), None, fscope) 16 except: 17 do_return = False ValueError: in user code: File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 1338, in train_function * return step_function(self, iterator) File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 1322, in step_function ** outputs = model.distribute_strategy.run(run_step, args=(data,)) File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 1303, in run_step ** outputs = model.train_step(data) File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py", line 1080, in train_step y_pred = self(x, training=True) File "/usr/local/lib/python3.10/dist-packages/keras/src/utils/traceback_utils.py", line 70, in error_handler raise e.with_traceback(filtered_tb) from None File "/usr/local/lib/python3.10/dist-packages/keras/src/engine/input_spec.py", line 280, in assert_input_compatibility raise ValueError( ValueError: Exception encountered when calling layer 'sequential_2' (type Sequential). Input 0 of layer "dense_3" is incompatible with the layer: expected axis -1 of input shape to have value 2, but received input with shape (None, 1) Call arguments received by layer 'sequential_2' (type Sequential): • inputs=tf.Tensor(shape=(None, 1), dtype=int64) • training=True • mask=None

model_3.summary()

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_3 (Dense) (None, 100) 300

dense_4 (Dense) (None, 10) 1010

dense_5 (Dense) (None, 1) 11

=================================================================

Total params: 1321 (5.16 KB)

Trainable params: 1321 (5.16 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Oh wait... we compiled our model for a binary classification problem.

No trouble, we can recreate it for a regression problem.

# Setup random seed

tf.random.set_seed(42)

# Recreate the model

model_3 = tf.keras.Sequential([

tf.keras.layers.Dense(100),

tf.keras.layers.Dense(10),

tf.keras.layers.Dense(1)

])

# Change the loss and metrics of our compiled model

model_3.compile(loss=tf.keras.losses.mae, # change the loss function to be regression-specific

optimizer=tf.keras.optimizers.Adam(),

metrics=['mae']) # change the metric to be regression-specific

# Fit the recompiled model

model_3.fit(tf.expand_dims(X_reg_train, axis=-1),

y_reg_train,

epochs=100)

Epoch 1/100 5/5 [==============================] - 1s 5ms/step - loss: 447.6102 - mae: 447.6102 Epoch 2/100 5/5 [==============================] - 0s 4ms/step - loss: 313.3108 - mae: 313.3108 Epoch 3/100 5/5 [==============================] - 0s 4ms/step - loss: 182.3118 - mae: 182.3118 Epoch 4/100 5/5 [==============================] - 0s 4ms/step - loss: 58.6317 - mae: 58.6317 Epoch 5/100 5/5 [==============================] - 0s 4ms/step - loss: 83.0025 - mae: 83.0025 Epoch 6/100 5/5 [==============================] - 0s 4ms/step - loss: 86.4671 - mae: 86.4671 Epoch 7/100 5/5 [==============================] - 0s 3ms/step - loss: 49.6151 - mae: 49.6151 Epoch 8/100 5/5 [==============================] - 0s 4ms/step - loss: 57.7703 - mae: 57.7703 Epoch 9/100 5/5 [==============================] - 0s 3ms/step - loss: 50.1641 - mae: 50.1641 Epoch 10/100 5/5 [==============================] - 0s 4ms/step - loss: 47.7698 - mae: 47.7698 Epoch 11/100 5/5 [==============================] - 0s 4ms/step - loss: 48.6194 - mae: 48.6194 Epoch 12/100 5/5 [==============================] - 0s 4ms/step - loss: 43.2044 - mae: 43.2044 Epoch 13/100 5/5 [==============================] - 0s 4ms/step - loss: 42.6293 - mae: 42.6293 Epoch 14/100 5/5 [==============================] - 0s 4ms/step - loss: 42.4557 - mae: 42.4557 Epoch 15/100 5/5 [==============================] - 0s 4ms/step - loss: 41.9446 - mae: 41.9446 Epoch 16/100 5/5 [==============================] - 0s 4ms/step - loss: 41.7290 - mae: 41.7290 Epoch 17/100 5/5 [==============================] - 0s 4ms/step - loss: 41.5669 - mae: 41.5669 Epoch 18/100 5/5 [==============================] - 0s 4ms/step - loss: 41.2955 - mae: 41.2955 Epoch 19/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1274 - mae: 41.1274 Epoch 20/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1121 - mae: 41.1121 Epoch 21/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1519 - mae: 41.1519 Epoch 22/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0565 - mae: 41.0565 Epoch 23/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1133 - mae: 41.1133 Epoch 24/100 5/5 [==============================] - 0s 60ms/step - loss: 41.0074 - mae: 41.0074 Epoch 25/100 5/5 [==============================] - 0s 4ms/step - loss: 40.9870 - mae: 40.9870 Epoch 26/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0249 - mae: 41.0249 Epoch 27/100 5/5 [==============================] - 0s 3ms/step - loss: 40.8403 - mae: 40.8403 Epoch 28/100 5/5 [==============================] - 0s 4ms/step - loss: 40.9965 - mae: 40.9965 Epoch 29/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0225 - mae: 41.0225 Epoch 30/100 5/5 [==============================] - 0s 4ms/step - loss: 40.8058 - mae: 40.8058 Epoch 31/100 5/5 [==============================] - 0s 4ms/step - loss: 41.3589 - mae: 41.3589 Epoch 32/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0084 - mae: 41.0084 Epoch 33/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0701 - mae: 41.0701 Epoch 34/100 5/5 [==============================] - 0s 4ms/step - loss: 41.2035 - mae: 41.2035 Epoch 35/100 5/5 [==============================] - 0s 3ms/step - loss: 40.5885 - mae: 40.5885 Epoch 36/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0615 - mae: 41.0615 Epoch 37/100 5/5 [==============================] - 0s 4ms/step - loss: 40.6438 - mae: 40.6438 Epoch 38/100 5/5 [==============================] - 0s 4ms/step - loss: 40.3412 - mae: 40.3412 Epoch 39/100 5/5 [==============================] - 0s 4ms/step - loss: 40.6498 - mae: 40.6498 Epoch 40/100 5/5 [==============================] - 0s 4ms/step - loss: 40.4421 - mae: 40.4421 Epoch 41/100 5/5 [==============================] - 0s 4ms/step - loss: 40.3558 - mae: 40.3558 Epoch 42/100 5/5 [==============================] - 0s 4ms/step - loss: 40.3041 - mae: 40.3041 Epoch 43/100 5/5 [==============================] - 0s 4ms/step - loss: 40.5277 - mae: 40.5277 Epoch 44/100 5/5 [==============================] - 0s 4ms/step - loss: 40.1808 - mae: 40.1808 Epoch 45/100 5/5 [==============================] - 0s 4ms/step - loss: 40.6292 - mae: 40.6292 Epoch 46/100 5/5 [==============================] - 0s 3ms/step - loss: 40.4382 - mae: 40.4382 Epoch 47/100 5/5 [==============================] - 0s 4ms/step - loss: 40.1801 - mae: 40.1801 Epoch 48/100 5/5 [==============================] - 0s 4ms/step - loss: 40.2386 - mae: 40.2386 Epoch 49/100 5/5 [==============================] - 0s 3ms/step - loss: 40.7914 - mae: 40.7914 Epoch 50/100 5/5 [==============================] - 0s 3ms/step - loss: 40.1259 - mae: 40.1259 Epoch 51/100 5/5 [==============================] - 0s 4ms/step - loss: 40.4617 - mae: 40.4617 Epoch 52/100 5/5 [==============================] - 0s 4ms/step - loss: 40.8686 - mae: 40.8686 Epoch 53/100 5/5 [==============================] - 0s 4ms/step - loss: 41.0441 - mae: 41.0441 Epoch 54/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1022 - mae: 41.1022 Epoch 55/100 5/5 [==============================] - 0s 4ms/step - loss: 42.1498 - mae: 42.1498 Epoch 56/100 5/5 [==============================] - 0s 4ms/step - loss: 42.3732 - mae: 42.3732 Epoch 57/100 5/5 [==============================] - 0s 4ms/step - loss: 40.9868 - mae: 40.9868 Epoch 58/100 5/5 [==============================] - 0s 4ms/step - loss: 40.4022 - mae: 40.4022 Epoch 59/100 5/5 [==============================] - 0s 4ms/step - loss: 41.1762 - mae: 41.1762 Epoch 60/100 5/5 [==============================] - 0s 4ms/step - loss: 40.0280 - mae: 40.0280 Epoch 61/100 5/5 [==============================] - 0s 4ms/step - loss: 39.4377 - mae: 39.4377 Epoch 62/100 5/5 [==============================] - 0s 4ms/step - loss: 40.2227 - mae: 40.2227 Epoch 63/100 5/5 [==============================] - 0s 4ms/step - loss: 39.7379 - mae: 39.7379 Epoch 64/100 5/5 [==============================] - 0s 4ms/step - loss: 39.4851 - mae: 39.4851 Epoch 65/100 5/5 [==============================] - 0s 4ms/step - loss: 39.8735 - mae: 39.8735 Epoch 66/100 5/5 [==============================] - 0s 4ms/step - loss: 39.5498 - mae: 39.5498 Epoch 67/100 5/5 [==============================] - 0s 3ms/step - loss: 39.5944 - mae: 39.5944 Epoch 68/100 5/5 [==============================] - 0s 3ms/step - loss: 39.5087 - mae: 39.5087 Epoch 69/100 5/5 [==============================] - 0s 3ms/step - loss: 39.3758 - mae: 39.3758 Epoch 70/100 5/5 [==============================] - 0s 4ms/step - loss: 39.8543 - mae: 39.8543 Epoch 71/100 5/5 [==============================] - 0s 4ms/step - loss: 41.2924 - mae: 41.2924 Epoch 72/100 5/5 [==============================] - 0s 4ms/step - loss: 39.0309 - mae: 39.0309 Epoch 73/100 5/5 [==============================] - 0s 3ms/step - loss: 39.7582 - mae: 39.7582 Epoch 74/100 5/5 [==============================] - 0s 4ms/step - loss: 39.1621 - mae: 39.1621 Epoch 75/100 5/5 [==============================] - 0s 4ms/step - loss: 39.9158 - mae: 39.9158 Epoch 76/100 5/5 [==============================] - 0s 4ms/step - loss: 40.2419 - mae: 40.2419 Epoch 77/100 5/5 [==============================] - 0s 4ms/step - loss: 38.9030 - mae: 38.9030 Epoch 78/100 5/5 [==============================] - 0s 3ms/step - loss: 39.5400 - mae: 39.5400 Epoch 79/100 5/5 [==============================] - 0s 3ms/step - loss: 39.2044 - mae: 39.2044 Epoch 80/100 5/5 [==============================] - 0s 3ms/step - loss: 38.7893 - mae: 38.7893 Epoch 81/100 5/5 [==============================] - 0s 3ms/step - loss: 38.8879 - mae: 38.8879 Epoch 82/100 5/5 [==============================] - 0s 4ms/step - loss: 38.9441 - mae: 38.9441 Epoch 83/100 5/5 [==============================] - 0s 4ms/step - loss: 38.6721 - mae: 38.6721 Epoch 84/100 5/5 [==============================] - 0s 4ms/step - loss: 38.7601 - mae: 38.7601 Epoch 85/100 5/5 [==============================] - 0s 4ms/step - loss: 39.0045 - mae: 39.0045 Epoch 86/100 5/5 [==============================] - 0s 4ms/step - loss: 38.9378 - mae: 38.9378 Epoch 87/100 5/5 [==============================] - 0s 3ms/step - loss: 38.3988 - mae: 38.3988 Epoch 88/100 5/5 [==============================] - 0s 3ms/step - loss: 38.5840 - mae: 38.5840 Epoch 89/100 5/5 [==============================] - 0s 3ms/step - loss: 38.4868 - mae: 38.4868 Epoch 90/100 5/5 [==============================] - 0s 4ms/step - loss: 38.3730 - mae: 38.3730 Epoch 91/100 5/5 [==============================] - 0s 3ms/step - loss: 38.2209 - mae: 38.2209 Epoch 92/100 5/5 [==============================] - 0s 4ms/step - loss: 38.3540 - mae: 38.3540 Epoch 93/100 5/5 [==============================] - 0s 4ms/step - loss: 38.6931 - mae: 38.6931 Epoch 94/100 5/5 [==============================] - 0s 4ms/step - loss: 37.9931 - mae: 37.9931 Epoch 95/100 5/5 [==============================] - 0s 4ms/step - loss: 38.0585 - mae: 38.0585 Epoch 96/100 5/5 [==============================] - 0s 4ms/step - loss: 38.4031 - mae: 38.4031 Epoch 97/100 5/5 [==============================] - 0s 4ms/step - loss: 38.0610 - mae: 38.0610 Epoch 98/100 5/5 [==============================] - 0s 4ms/step - loss: 38.3810 - mae: 38.3810 Epoch 99/100 5/5 [==============================] - 0s 4ms/step - loss: 38.4900 - mae: 38.4900 Epoch 100/100 5/5 [==============================] - 0s 4ms/step - loss: 37.9673 - mae: 37.9673

<keras.src.callbacks.History at 0x7a24c5b179a0>

Okay, it seems like our model is learning something (the mae value trends down with each epoch), let's plot its predictions.

# Make predictions with our trained model

y_reg_preds = model_3.predict(X_reg_test)

# Plot the model's predictions against our regression data

plt.figure(figsize=(10, 7))

plt.scatter(X_reg_train, y_reg_train, c='b', label='Training data')

plt.scatter(X_reg_test, y_reg_test, c='g', label='Testing data')

plt.scatter(X_reg_test, y_reg_preds.squeeze(), c='r', label='Predictions')

plt.legend();

2/2 [==============================] - 0s 4ms/step

Okay, the predictions aren't perfect (if the predictions were perfect, the red would line up with the green), but they look better than complete guessing.

So this means our model must be learning something...

There must be something we're missing out on for our classification problem.

The missing piece: Non-linearity¶

Okay, so we saw our neural network can model straight lines (with ability a little bit better than guessing).

What about non-straight (non-linear) lines?

If we're going to model our classification data (the red and blue circles), we're going to need some non-linear lines.

🔨 Practice: Before we get to the next steps, I'd encourage you to play around with the TensorFlow Playground (check out what the data has in common with our own classification data) for 10-minutes. In particular the tab which says "activation". Once you're done, come back.

Did you try out the activation options? If so, what did you find?

If you didn't, don't worry, let's see it in code.

We're going to replicate the neural network you can see at this link: TensorFlow Playground.

The neural network we're going to recreate with TensorFlow code. See it live at TensorFlow Playground.

The neural network we're going to recreate with TensorFlow code. See it live at TensorFlow Playground.

The main change we'll add to models we've built before is the use of the activation keyword.

# Set the random seed

tf.random.set_seed(42)

# Create the model

model_4 = tf.keras.Sequential([

tf.keras.layers.Dense(1, activation=tf.keras.activations.linear), # 1 hidden layer with linear activation

tf.keras.layers.Dense(1) # output layer

])

# Compile the model

model_4.compile(loss=tf.keras.losses.binary_crossentropy,

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001), # note: "lr" used to be what was used, now "learning_rate" is favoured

metrics=["accuracy"])

# Fit the model

history = model_4.fit(X, y, epochs=100)

Epoch 1/100 32/32 [==============================] - 1s 3ms/step - loss: 4.2584 - accuracy: 0.5000 Epoch 2/100 32/32 [==============================] - 0s 3ms/step - loss: 4.0332 - accuracy: 0.5000 Epoch 3/100 32/32 [==============================] - 0s 3ms/step - loss: 3.8635 - accuracy: 0.4970 Epoch 4/100 32/32 [==============================] - 0s 3ms/step - loss: 3.6277 - accuracy: 0.4670 Epoch 5/100 32/32 [==============================] - 0s 3ms/step - loss: 3.3772 - accuracy: 0.4490 Epoch 6/100 32/32 [==============================] - 0s 3ms/step - loss: 3.0635 - accuracy: 0.4460 Epoch 7/100 32/32 [==============================] - 0s 3ms/step - loss: 2.7472 - accuracy: 0.4450 Epoch 8/100 32/32 [==============================] - 0s 3ms/step - loss: 2.1925 - accuracy: 0.4470 Epoch 9/100 32/32 [==============================] - 0s 3ms/step - loss: 1.1191 - accuracy: 0.4790 Epoch 10/100 32/32 [==============================] - 0s 3ms/step - loss: 0.9511 - accuracy: 0.4900 Epoch 11/100 32/32 [==============================] - 0s 3ms/step - loss: 0.9207 - accuracy: 0.4880 Epoch 12/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8991 - accuracy: 0.4850 Epoch 13/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8814 - accuracy: 0.4770 Epoch 14/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8662 - accuracy: 0.4720 Epoch 15/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8530 - accuracy: 0.4620 Epoch 16/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8421 - accuracy: 0.4510 Epoch 17/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8320 - accuracy: 0.4470 Epoch 18/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8227 - accuracy: 0.4420 Epoch 19/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8146 - accuracy: 0.4330 Epoch 20/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8070 - accuracy: 0.4290 Epoch 21/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8001 - accuracy: 0.4210 Epoch 22/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7937 - accuracy: 0.4140 Epoch 23/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7877 - accuracy: 0.4090 Epoch 24/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7823 - accuracy: 0.4090 Epoch 25/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7771 - accuracy: 0.4100 Epoch 26/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7722 - accuracy: 0.4140 Epoch 27/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7677 - accuracy: 0.4220 Epoch 28/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7633 - accuracy: 0.4320 Epoch 29/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7594 - accuracy: 0.4350 Epoch 30/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7557 - accuracy: 0.4460 Epoch 31/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7522 - accuracy: 0.4480 Epoch 32/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7489 - accuracy: 0.4470 Epoch 33/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7460 - accuracy: 0.4540 Epoch 34/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7432 - accuracy: 0.4520 Epoch 35/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7406 - accuracy: 0.4590 Epoch 36/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7381 - accuracy: 0.4620 Epoch 37/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7357 - accuracy: 0.4640 Epoch 38/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7335 - accuracy: 0.4640 Epoch 39/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7314 - accuracy: 0.4660 Epoch 40/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7295 - accuracy: 0.4670 Epoch 41/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7276 - accuracy: 0.4720 Epoch 42/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7259 - accuracy: 0.4740 Epoch 43/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7241 - accuracy: 0.4780 Epoch 44/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7227 - accuracy: 0.4770 Epoch 45/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7212 - accuracy: 0.4770 Epoch 46/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7198 - accuracy: 0.4760 Epoch 47/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7186 - accuracy: 0.4760 Epoch 48/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7173 - accuracy: 0.4780 Epoch 49/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7161 - accuracy: 0.4810 Epoch 50/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7150 - accuracy: 0.4780 Epoch 51/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7139 - accuracy: 0.4800 Epoch 52/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7129 - accuracy: 0.4820 Epoch 53/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7119 - accuracy: 0.4850 Epoch 54/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7110 - accuracy: 0.4850 Epoch 55/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7101 - accuracy: 0.4880 Epoch 56/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7092 - accuracy: 0.4870 Epoch 57/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7085 - accuracy: 0.4860 Epoch 58/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7078 - accuracy: 0.4910 Epoch 59/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7072 - accuracy: 0.4870 Epoch 60/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7064 - accuracy: 0.4900 Epoch 61/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7058 - accuracy: 0.4890 Epoch 62/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7053 - accuracy: 0.4900 Epoch 63/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7046 - accuracy: 0.4900 Epoch 64/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7041 - accuracy: 0.4900 Epoch 65/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7036 - accuracy: 0.4910 Epoch 66/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7031 - accuracy: 0.4870 Epoch 67/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7026 - accuracy: 0.4880 Epoch 68/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7022 - accuracy: 0.4880 Epoch 69/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7017 - accuracy: 0.4860 Epoch 70/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7013 - accuracy: 0.4860 Epoch 71/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7009 - accuracy: 0.4870 Epoch 72/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7005 - accuracy: 0.4900 Epoch 73/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7002 - accuracy: 0.4890 Epoch 74/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6999 - accuracy: 0.4890 Epoch 75/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6996 - accuracy: 0.4900 Epoch 76/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6993 - accuracy: 0.4900 Epoch 77/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6990 - accuracy: 0.4890 Epoch 78/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6987 - accuracy: 0.4890 Epoch 79/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6984 - accuracy: 0.4890 Epoch 80/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6981 - accuracy: 0.4870 Epoch 81/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6978 - accuracy: 0.4850 Epoch 82/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6976 - accuracy: 0.4850 Epoch 83/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6974 - accuracy: 0.4860 Epoch 84/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6971 - accuracy: 0.4870 Epoch 85/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6969 - accuracy: 0.4880 Epoch 86/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6968 - accuracy: 0.4880 Epoch 87/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6965 - accuracy: 0.4900 Epoch 88/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6964 - accuracy: 0.4860 Epoch 89/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6963 - accuracy: 0.4840 Epoch 90/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6962 - accuracy: 0.4900 Epoch 91/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6959 - accuracy: 0.4890 Epoch 92/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6958 - accuracy: 0.4880 Epoch 93/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6957 - accuracy: 0.4910 Epoch 94/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6956 - accuracy: 0.4860 Epoch 95/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6954 - accuracy: 0.4880 Epoch 96/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6953 - accuracy: 0.4850 Epoch 97/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6952 - accuracy: 0.4880 Epoch 98/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6951 - accuracy: 0.4880 Epoch 99/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6950 - accuracy: 0.4850 Epoch 100/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6948 - accuracy: 0.4900

Okay, our model performs a little worse than guessing.

Let's remind ourselves what our data looks like.

# Check out our data

plt.scatter(X[:, 0], X[:, 1], c=y, cmap=plt.cm.RdYlBu);

And let's see how our model is making predictions on it.

# Check the deicison boundary (blue is blue class, yellow is the crossover, red is red class)

plot_decision_boundary(model_4, X, y)

313/313 [==============================] - 0s 1ms/step doing binary classifcation...

Well, it looks like we're getting a straight (linear) line prediction again.

But our data is non-linear (not a straight line)...

What we're going to have to do is add some non-linearity to our model.

To do so, we'll use the activation parameter in on of our layers.

# Set random seed

tf.random.set_seed(42)

# Create a model with a non-linear activation

model_5 = tf.keras.Sequential([

tf.keras.layers.Dense(1, activation=tf.keras.activations.relu), # can also do activation='relu'

tf.keras.layers.Dense(1) # output layer

])

# Compile the model

model_5.compile(loss=tf.keras.losses.binary_crossentropy,

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

# Fit the model

history = model_5.fit(X, y, epochs=100)

Epoch 1/100 32/32 [==============================] - 1s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 2/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 3/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 4/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 5/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 6/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 7/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 8/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 9/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 10/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 11/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 12/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 13/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 14/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 15/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 16/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 17/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 18/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 19/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 20/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 21/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 22/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 23/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 24/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 25/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 26/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 27/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 28/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 29/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 30/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 31/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 32/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 33/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 34/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 35/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 36/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 37/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 38/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 39/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 40/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 41/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 42/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 43/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 44/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 45/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 46/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 47/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 48/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 49/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 50/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 51/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 52/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 53/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 54/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 55/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 56/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 57/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 58/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 59/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 60/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 61/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 62/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 63/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 64/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 65/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 66/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 67/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 68/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 69/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 70/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 71/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 72/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 73/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 74/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 75/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 76/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 77/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 78/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 79/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 80/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 81/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 82/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 83/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 84/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 85/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 86/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 87/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 88/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 89/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 90/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 91/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 92/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 93/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 94/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 95/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 96/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 97/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 98/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 99/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000 Epoch 100/100 32/32 [==============================] - 0s 3ms/step - loss: 7.7125 - accuracy: 0.5000

Hmm... still not learning...

What we if increased the number of neurons and layers?

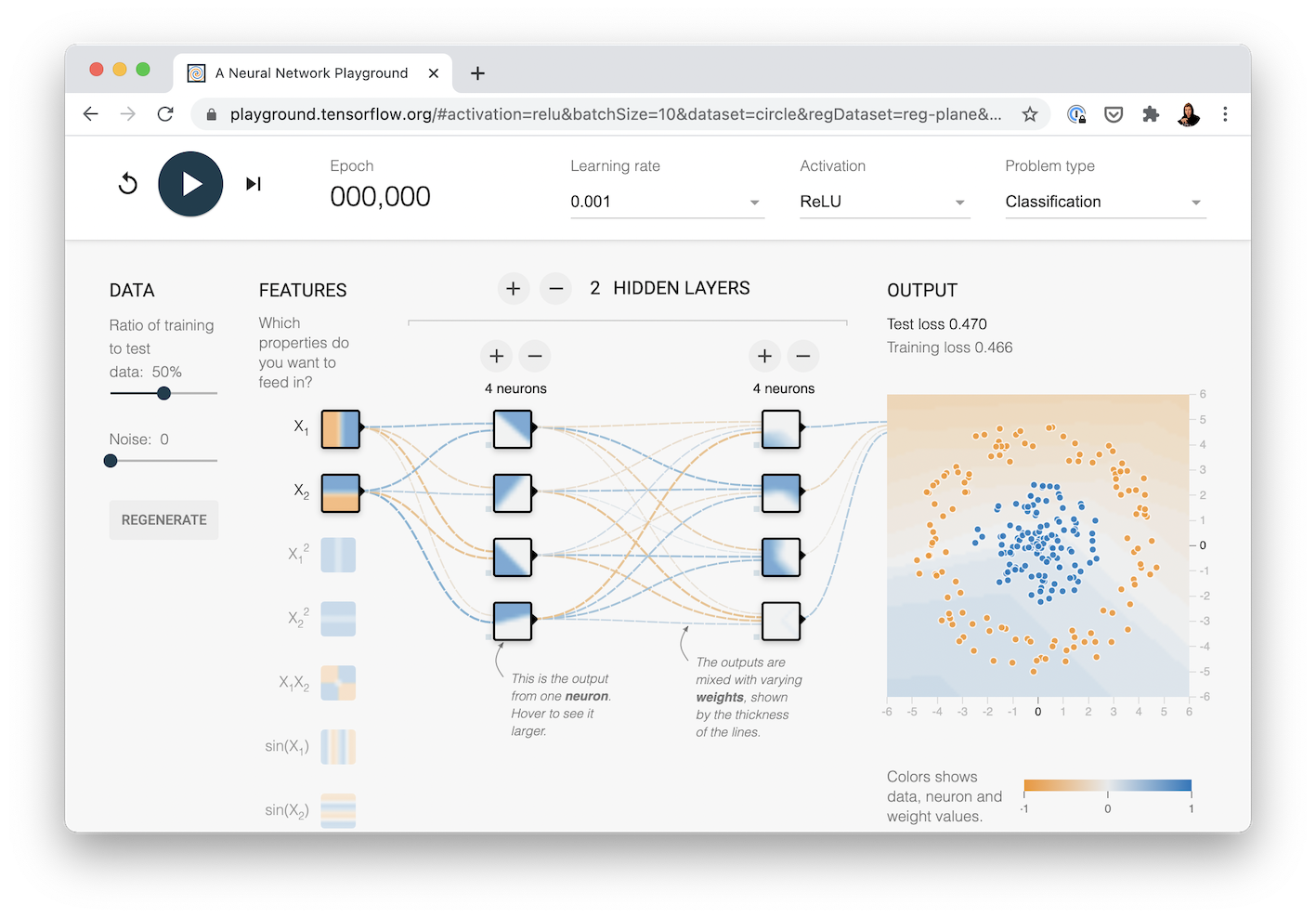

Say, 2 hidden layers, with ReLU, pronounced "rel-u", (short for rectified linear unit), activation on the first one, and 4 neurons each?

To see this network in action, check out the TensorFlow Playground demo.

The neural network we're going to recreate with TensorFlow code. See it live at TensorFlow Playground.

The neural network we're going to recreate with TensorFlow code. See it live at TensorFlow Playground.

Let's try.

Note: in the course, Daniel used lr instead of learning_rate. But for the update, we had changed to learning_rate instead of lr.

# Set random seed

tf.random.set_seed(42)

# Create a model

model_6 = tf.keras.Sequential([

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu), # hidden layer 1, 4 neurons, ReLU activation

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu), # hidden layer 2, 4 neurons, ReLU activation

tf.keras.layers.Dense(1) # ouput layer

])

# Compile the model

model_6.compile(loss=tf.keras.losses.binary_crossentropy,

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001), # Adam's default learning rate is 0.001

metrics=['accuracy'])

# Fit the model

history = model_6.fit(X, y, epochs=100)

Epoch 1/100 32/32 [==============================] - 2s 3ms/step - loss: 4.3069 - accuracy: 0.5000 Epoch 2/100 32/32 [==============================] - 0s 3ms/step - loss: 4.0916 - accuracy: 0.5000 Epoch 3/100 32/32 [==============================] - 0s 3ms/step - loss: 3.9820 - accuracy: 0.4520 Epoch 4/100 32/32 [==============================] - 0s 3ms/step - loss: 3.8302 - accuracy: 0.4150 Epoch 5/100 32/32 [==============================] - 0s 3ms/step - loss: 3.7048 - accuracy: 0.4500 Epoch 6/100 32/32 [==============================] - 0s 3ms/step - loss: 3.5944 - accuracy: 0.4650 Epoch 7/100 32/32 [==============================] - 0s 3ms/step - loss: 3.1921 - accuracy: 0.4650 Epoch 8/100 32/32 [==============================] - 0s 3ms/step - loss: 2.6196 - accuracy: 0.4680 Epoch 9/100 32/32 [==============================] - 0s 3ms/step - loss: 1.3332 - accuracy: 0.4740 Epoch 10/100 32/32 [==============================] - 0s 3ms/step - loss: 0.9951 - accuracy: 0.4750 Epoch 11/100 32/32 [==============================] - 0s 3ms/step - loss: 0.9489 - accuracy: 0.4750 Epoch 12/100 32/32 [==============================] - 0s 3ms/step - loss: 0.9082 - accuracy: 0.4730 Epoch 13/100 32/32 [==============================] - 0s 3ms/step - loss: 0.8345 - accuracy: 0.4720 Epoch 14/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7435 - accuracy: 0.4690 Epoch 15/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7288 - accuracy: 0.4550 Epoch 16/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7245 - accuracy: 0.4620 Epoch 17/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7213 - accuracy: 0.4630 Epoch 18/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7186 - accuracy: 0.4670 Epoch 19/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7166 - accuracy: 0.4600 Epoch 20/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7143 - accuracy: 0.4560 Epoch 21/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7127 - accuracy: 0.4600 Epoch 22/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7107 - accuracy: 0.4580 Epoch 23/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7090 - accuracy: 0.4590 Epoch 24/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7075 - accuracy: 0.4640 Epoch 25/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7061 - accuracy: 0.4690 Epoch 26/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7046 - accuracy: 0.4640 Epoch 27/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7033 - accuracy: 0.4720 Epoch 28/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7012 - accuracy: 0.4650 Epoch 29/100 32/32 [==============================] - 0s 3ms/step - loss: 0.7002 - accuracy: 0.4660 Epoch 30/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6987 - accuracy: 0.4650 Epoch 31/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6973 - accuracy: 0.4660 Epoch 32/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6961 - accuracy: 0.4710 Epoch 33/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6944 - accuracy: 0.4760 Epoch 34/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6935 - accuracy: 0.4690 Epoch 35/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6922 - accuracy: 0.4850 Epoch 36/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6907 - accuracy: 0.4740 Epoch 37/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6895 - accuracy: 0.4940 Epoch 38/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6887 - accuracy: 0.4770 Epoch 39/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6875 - accuracy: 0.4680 Epoch 40/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6867 - accuracy: 0.4800 Epoch 41/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6862 - accuracy: 0.4850 Epoch 42/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6847 - accuracy: 0.4640 Epoch 43/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6837 - accuracy: 0.4730 Epoch 44/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6841 - accuracy: 0.4590 Epoch 45/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6820 - accuracy: 0.4520 Epoch 46/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6812 - accuracy: 0.4500 Epoch 47/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6813 - accuracy: 0.4730 Epoch 48/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6798 - accuracy: 0.4570 Epoch 49/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6791 - accuracy: 0.5190 Epoch 50/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6784 - accuracy: 0.5360 Epoch 51/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6779 - accuracy: 0.5270 Epoch 52/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6773 - accuracy: 0.5030 Epoch 53/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6766 - accuracy: 0.5350 Epoch 54/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6757 - accuracy: 0.5400 Epoch 55/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6756 - accuracy: 0.5410 Epoch 56/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6749 - accuracy: 0.5430 Epoch 57/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6744 - accuracy: 0.5420 Epoch 58/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6741 - accuracy: 0.5430 Epoch 59/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6737 - accuracy: 0.5420 Epoch 60/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6729 - accuracy: 0.5400 Epoch 61/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6727 - accuracy: 0.5410 Epoch 62/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6722 - accuracy: 0.5420 Epoch 63/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6715 - accuracy: 0.5390 Epoch 64/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6712 - accuracy: 0.5410 Epoch 65/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6709 - accuracy: 0.5400 Epoch 66/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6704 - accuracy: 0.5390 Epoch 67/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6701 - accuracy: 0.5400 Epoch 68/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6698 - accuracy: 0.5400 Epoch 69/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6691 - accuracy: 0.5420 Epoch 70/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6691 - accuracy: 0.5420 Epoch 71/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6684 - accuracy: 0.5410 Epoch 72/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6682 - accuracy: 0.5440 Epoch 73/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6680 - accuracy: 0.5480 Epoch 74/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6673 - accuracy: 0.5400 Epoch 75/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6675 - accuracy: 0.5500 Epoch 76/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6671 - accuracy: 0.5420 Epoch 77/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6666 - accuracy: 0.5530 Epoch 78/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6661 - accuracy: 0.5440 Epoch 79/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6657 - accuracy: 0.5490 Epoch 80/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6648 - accuracy: 0.5570 Epoch 81/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6647 - accuracy: 0.5580 Epoch 82/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6643 - accuracy: 0.5510 Epoch 83/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6637 - accuracy: 0.5460 Epoch 84/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6628 - accuracy: 0.5590 Epoch 85/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6625 - accuracy: 0.5540 Epoch 86/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6624 - accuracy: 0.5600 Epoch 87/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6615 - accuracy: 0.5560 Epoch 88/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6606 - accuracy: 0.5590 Epoch 89/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6605 - accuracy: 0.5650 Epoch 90/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6604 - accuracy: 0.5480 Epoch 91/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6588 - accuracy: 0.5580 Epoch 92/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6587 - accuracy: 0.5580 Epoch 93/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6586 - accuracy: 0.5490 Epoch 94/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6574 - accuracy: 0.5610 Epoch 95/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6567 - accuracy: 0.5560 Epoch 96/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6559 - accuracy: 0.5560 Epoch 97/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6551 - accuracy: 0.5590 Epoch 98/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6535 - accuracy: 0.5580 Epoch 99/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6538 - accuracy: 0.5760 Epoch 100/100 32/32 [==============================] - 0s 3ms/step - loss: 0.6523 - accuracy: 0.5640

# Evaluate the model

model_6.evaluate(X, y)

32/32 [==============================] - 0s 2ms/step - loss: 0.6508 - accuracy: 0.5640

[0.6507635116577148, 0.5640000104904175]

We're still hitting 50% accuracy, our model is still practically as good as guessing.

How do the predictions look?

# Check out the predictions using 2 hidden layers

plot_decision_boundary(model_6, X, y)

313/313 [==============================] - 0s 1ms/step doing binary classifcation...

What gives?

It seems like our model is the same as the one in the TensorFlow Playground but model it's still drawing straight lines...

Ideally, the yellow lines go on the inside of the red circle and the blue circle.

Okay, okay, let's model this circle once and for all.

One more model (I promise... actually, I'm going to have to break that promise... we'll be building plenty more models).

This time we'll change the activation function on our output layer too. Remember the architecture of a classification model? For binary classification, the output layer activation is usually the Sigmoid activation function.

# Set random seed

tf.random.set_seed(42)

# Create a model

model_7 = tf.keras.Sequential([

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu), # hidden layer 1, ReLU activation

tf.keras.layers.Dense(4, activation=tf.keras.activations.relu), # hidden layer 2, ReLU activation

tf.keras.layers.Dense(1, activation=tf.keras.activations.sigmoid) # ouput layer, sigmoid activation

])

# Compile the model

model_7.compile(loss=tf.keras.losses.binary_crossentropy,