08. Natural Language Processing with TensorFlow¶

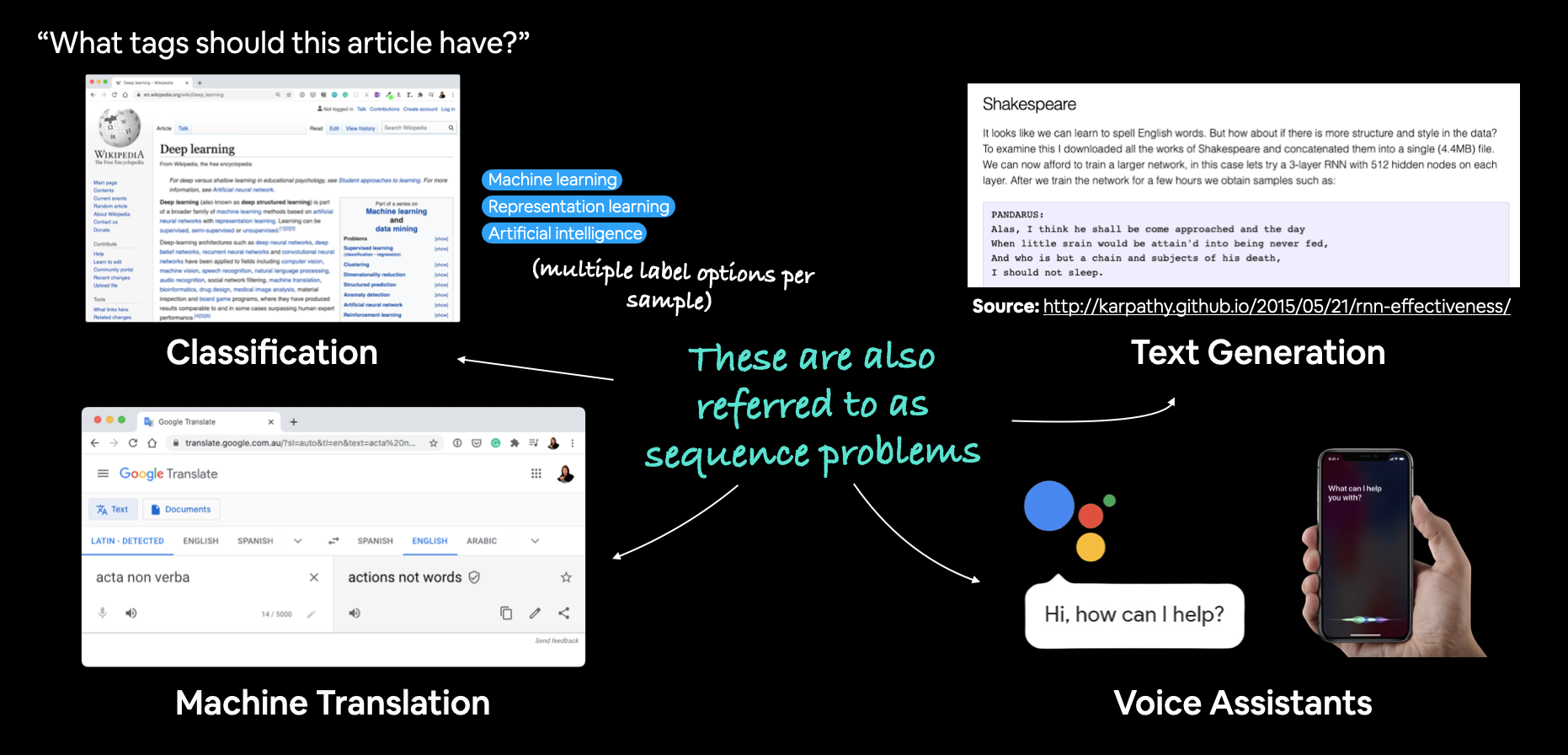

A handful of example natural language processing (NLP) and natural language understanding (NLU) problems. These are also often referred to as sequence problems (going from one sequence to another).

A handful of example natural language processing (NLP) and natural language understanding (NLU) problems. These are also often referred to as sequence problems (going from one sequence to another).

The main goal of natural language processing (NLP) is to derive information from natural language.

Natural language is a broad term but you can consider it to cover any of the following:

- Text (such as that contained in an email, blog post, book, Tweet)

- Speech (a conversation you have with a doctor, voice commands you give to a smart speaker)

Under the umbrellas of text and speech there are many different things you might want to do.

If you're building an email application, you might want to scan incoming emails to see if they're spam or not spam (classification).

If you're trying to analyse customer feedback complaints, you might want to discover which section of your business they're for.

🔑 Note: Both of these types of data are often referred to as sequences (a sentence is a sequence of words). So a common term you'll come across in NLP problems is called seq2seq, in other words, finding information in one sequence to produce another sequence (e.g. converting a speech command to a sequence of text-based steps).

To get hands-on with NLP in TensorFlow, we're going to practice the steps we've used previously but this time with text data:

Text -> turn into numbers -> build a model -> train the model to find patterns -> use patterns (make predictions)

📖 Resource: For a great overview of NLP and the different problems within it, read the article A Simple Introduction to Natural Language Processing.

What we're going to cover¶

Let's get specific hey?

- Downloading a text dataset

- Visualizing text data

- Converting text into numbers using tokenization

- Turning our tokenized text into an embedding

- Modelling a text dataset

- Starting with a baseline (TF-IDF)

- Building several deep learning text models

- Dense, LSTM, GRU, Conv1D, Transfer learning

- Comparing the performance of each our models

- Combining our models into an ensemble

- Saving and loading a trained model

- Find the most wrong predictions

How you should approach this notebook¶

You can read through the descriptions and the code (it should all run, except for the cells which error on purpose), but there's a better option.

Write all of the code yourself.

Yes. I'm serious. Create a new notebook, and rewrite each line by yourself. Investigate it, see if you can break it, why does it break?

You don't have to write the text descriptions but writing the code yourself is a great way to get hands-on experience.

Don't worry if you make mistakes, we all do. The way to get better and make less mistakes is to write more code.

📖 Resource: See the full set of course materials on GitHub: https://github.com/mrdbourke/tensorflow-deep-learning

import datetime

print(f"Notebook last run (end-to-end): {datetime.datetime.now()}")

Notebook last run (end-to-end): 2023-05-26 00:14:41.453244

Check for GPU¶

In order for our deep learning models to run as fast as possible, we'll need access to a GPU.

In Google Colab, you can set this up by going to Runtime -> Change runtime type -> Hardware accelerator -> GPU.

After selecting GPU, you may have to restart the runtime.

# Check for GPU

!nvidia-smi -L

GPU 0: NVIDIA A100-SXM4-40GB (UUID: GPU-a07b6e3e-3ef6-217b-d41f-dc5c4d6babfd)

Get helper functions¶

In past modules, we've created a bunch of helper functions to do small tasks required for our notebooks.

Rather than rewrite all of these, we can import a script and load them in from there.

The script containing our helper functions can be found on GitHub.

# Download helper functions script

!wget https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/helper_functions.py

--2023-05-26 00:14:41-- https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/helper_functions.py Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ... Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 10246 (10K) [text/plain] Saving to: ‘helper_functions.py’ helper_functions.py 100%[===================>] 10.01K --.-KB/s in 0s 2023-05-26 00:14:41 (94.5 MB/s) - ‘helper_functions.py’ saved [10246/10246]

# Import series of helper functions for the notebook

from helper_functions import unzip_data, create_tensorboard_callback, plot_loss_curves, compare_historys

Download a text dataset¶

Let's start by download a text dataset. We'll be using the Real or Not? dataset from Kaggle which contains text-based Tweets about natural disasters.

The Real Tweets are actually about disasters, for example:

Jetstar and Virgin forced to cancel Bali flights again because of ash from Mount Raung volcano

The Not Real Tweets are Tweets not about disasters (they can be on anything), for example:

'Education is the most powerful weapon which you can use to change the world.' Nelson #Mandela #quote

For convenience, the dataset has been downloaded from Kaggle (doing this requires a Kaggle account) and uploaded as a downloadable zip file.

🔑 Note: The original downloaded data has not been altered to how you would download it from Kaggle.

# Download data (same as from Kaggle)

!wget "https://storage.googleapis.com/ztm_tf_course/nlp_getting_started.zip"

# Unzip data

unzip_data("nlp_getting_started.zip")

--2023-05-26 00:14:45-- https://storage.googleapis.com/ztm_tf_course/nlp_getting_started.zip Resolving storage.googleapis.com (storage.googleapis.com)... 172.217.194.128, 74.125.200.128, 74.125.68.128, ... Connecting to storage.googleapis.com (storage.googleapis.com)|172.217.194.128|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 607343 (593K) [application/zip] Saving to: ‘nlp_getting_started.zip’ nlp_getting_started 100%[===================>] 593.11K 731KB/s in 0.8s 2023-05-26 00:14:46 (731 KB/s) - ‘nlp_getting_started.zip’ saved [607343/607343]

Unzipping nlp_getting_started.zip gives the following 3 .csv files:

sample_submission.csv- an example of the file you'd submit to the Kaggle competition of your model's predictions.train.csv- training samples of real and not real diaster Tweets.test.csv- testing samples of real and not real diaster Tweets.

Visualizing a text dataset¶

Once you've acquired a new dataset to work with, what should you do first?

Explore it? Inspect it? Verify it? Become one with it?

All correct.

Remember the motto: visualize, visualize, visualize.

Right now, our text data samples are in the form of .csv files. For an easy way to make them visual, let's turn them into pandas DataFrame's.

📖 Reading: You might come across text datasets in many different formats. Aside from CSV files (what we're working with), you'll probably encounter

.txtfiles and.jsonfiles too. For working with these type of files, I'd recommend reading the two following articles by RealPython:

# Turn .csv files into pandas DataFrame's

import pandas as pd

train_df = pd.read_csv("train.csv")

test_df = pd.read_csv("test.csv")

train_df.head()

| id | keyword | location | text | target | |

|---|---|---|---|---|---|

| 0 | 1 | NaN | NaN | Our Deeds are the Reason of this #earthquake M... | 1 |

| 1 | 4 | NaN | NaN | Forest fire near La Ronge Sask. Canada | 1 |

| 2 | 5 | NaN | NaN | All residents asked to 'shelter in place' are ... | 1 |

| 3 | 6 | NaN | NaN | 13,000 people receive #wildfires evacuation or... | 1 |

| 4 | 7 | NaN | NaN | Just got sent this photo from Ruby #Alaska as ... | 1 |

The training data we downloaded is probably shuffled already. But just to be sure, let's shuffle it again.

# Shuffle training dataframe

train_df_shuffled = train_df.sample(frac=1, random_state=42) # shuffle with random_state=42 for reproducibility

train_df_shuffled.head()

| id | keyword | location | text | target | |

|---|---|---|---|---|---|

| 2644 | 3796 | destruction | NaN | So you have a new weapon that can cause un-ima... | 1 |

| 2227 | 3185 | deluge | NaN | The f$&@ing things I do for #GISHWHES Just... | 0 |

| 5448 | 7769 | police | UK | DT @georgegalloway: RT @Galloway4Mayor: ÛÏThe... | 1 |

| 132 | 191 | aftershock | NaN | Aftershock back to school kick off was great. ... | 0 |

| 6845 | 9810 | trauma | Montgomery County, MD | in response to trauma Children of Addicts deve... | 0 |

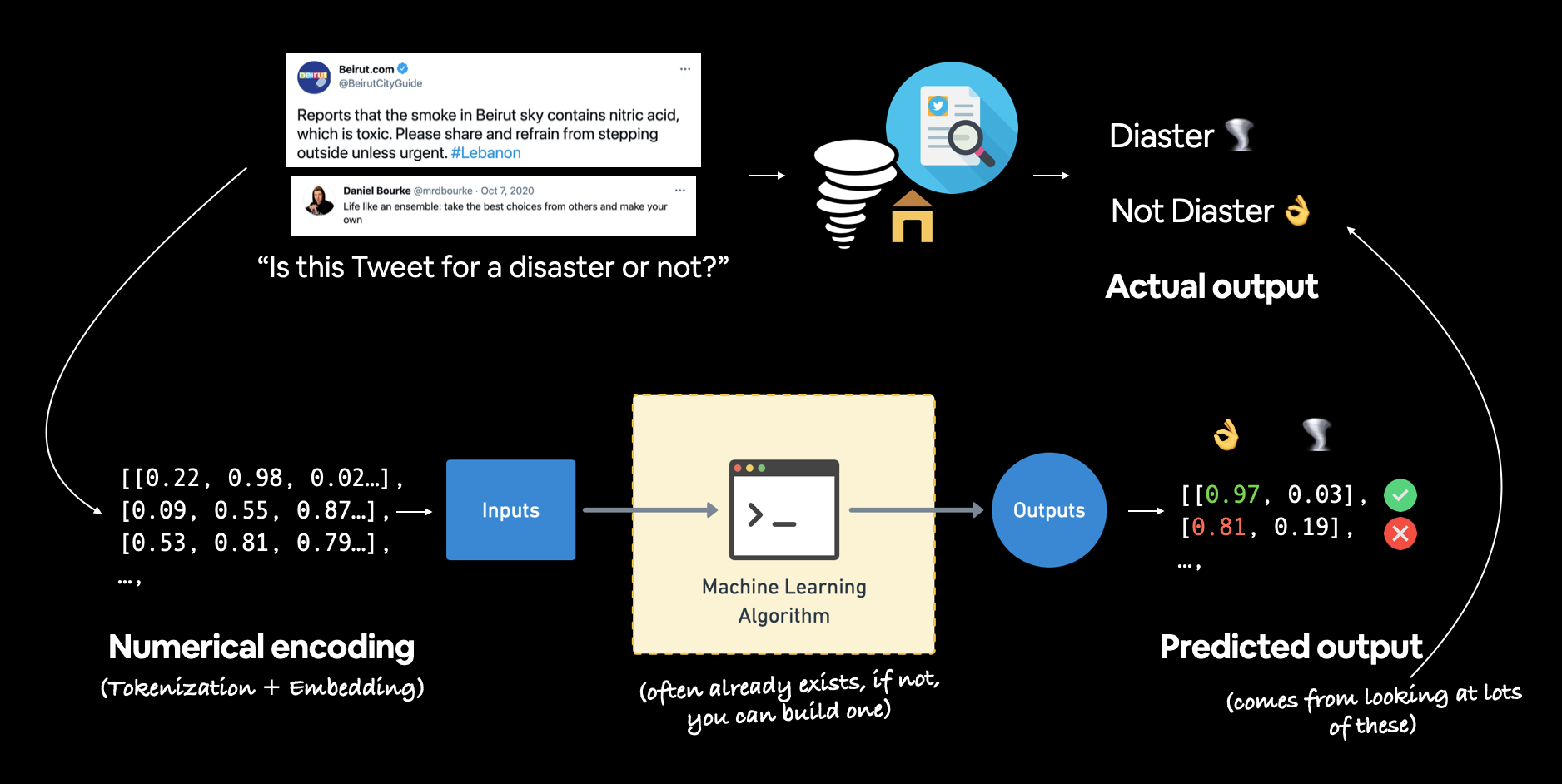

Notice how the training data has a "target" column.

We're going to be writing code to find patterns (e.g. different combinations of words) in the "text" column of the training dataset to predict the value of the "target" column.

The test dataset doesn't have a "target" column.

Inputs (text column) -> Machine Learning Algorithm -> Outputs (target column)

Example text classification inputs and outputs for the problem of classifying whether a Tweet is about a disaster or not.

Example text classification inputs and outputs for the problem of classifying whether a Tweet is about a disaster or not.

# The test data doesn't have a target (that's what we'd try to predict)

test_df.head()

| id | keyword | location | text | |

|---|---|---|---|---|

| 0 | 0 | NaN | NaN | Just happened a terrible car crash |

| 1 | 2 | NaN | NaN | Heard about #earthquake is different cities, s... |

| 2 | 3 | NaN | NaN | there is a forest fire at spot pond, geese are... |

| 3 | 9 | NaN | NaN | Apocalypse lighting. #Spokane #wildfires |

| 4 | 11 | NaN | NaN | Typhoon Soudelor kills 28 in China and Taiwan |

Let's check how many examples of each target we have.

# How many examples of each class?

train_df.target.value_counts()

0 4342 1 3271 Name: target, dtype: int64

Since we have two target values, we're dealing with a binary classification problem.

It's fairly balanced too, about 60% negative class (target = 0) and 40% positive class (target = 1).

Where,

1= a real disaster Tweet0= not a real disaster Tweet

And what about the total number of samples we have?

# How many samples total?

print(f"Total training samples: {len(train_df)}")

print(f"Total test samples: {len(test_df)}")

print(f"Total samples: {len(train_df) + len(test_df)}")

Total training samples: 7613 Total test samples: 3263 Total samples: 10876

Alright, seems like we've got a decent amount of training and test data. If anything, we've got an abundance of testing examples, usually a split of 90/10 (90% training, 10% testing) or 80/20 is suffice.

Okay, time to visualize, let's write some code to visualize random text samples.

🤔 Question: Why visualize random samples? You could visualize samples in order but this could lead to only seeing a certain subset of data. Better to visualize a substantial quantity (100+) of random samples to get an idea of the different kinds of data you're working with. In machine learning, never underestimate the power of randomness.

# Let's visualize some random training examples

import random

random_index = random.randint(0, len(train_df)-5) # create random indexes not higher than the total number of samples

for row in train_df_shuffled[["text", "target"]][random_index:random_index+5].itertuples():

_, text, target = row

print(f"Target: {target}", "(real disaster)" if target > 0 else "(not real disaster)")

print(f"Text:\n{text}\n")

print("---\n")

Target: 0 (not real disaster) Text: @JamesMelville Some old testimony of weapons used to promote conflicts Tactics - corruption & infiltration of groups https://t.co/cyU8zxw1oH --- Target: 1 (real disaster) Text: Now Trending in Nigeria: Police charge traditional ruler others with informantÛªs murder http://t.co/93inFxzhX0 --- Target: 1 (real disaster) Text: REPORTED: HIT & RUN-IN ROADWAY-PROPERTY DAMAGE at 15901 STATESVILLE RD --- Target: 1 (real disaster) Text: ohH NO FUKURODANI DIDN'T SURVIVE THE APOCALYPSE BOKUTO FEELS HORRIBLE my poor boy my ppor child --- Target: 1 (real disaster) Text: Maryland mansion fire that killed 6 caused by damaged plug under Christmas tree report says - Into the flames: Firefighter's bravery... ---

Split data into training and validation sets¶

Since the test set has no labels and we need a way to evalaute our trained models, we'll split off some of the training data and create a validation set.

When our model trains (tries patterns in the Tweet samples), it'll only see data from the training set and we can see how it performs on unseen data using the validation set.

We'll convert our splits from pandas Series datatypes to lists of strings (for the text) and lists of ints (for the labels) for ease of use later.

To split our training dataset and create a validation dataset, we'll use Scikit-Learn's train_test_split() method and dedicate 10% of the training samples to the validation set.

from sklearn.model_selection import train_test_split

# Use train_test_split to split training data into training and validation sets

train_sentences, val_sentences, train_labels, val_labels = train_test_split(train_df_shuffled["text"].to_numpy(),

train_df_shuffled["target"].to_numpy(),

test_size=0.1, # dedicate 10% of samples to validation set

random_state=42) # random state for reproducibility

# Check the lengths

len(train_sentences), len(train_labels), len(val_sentences), len(val_labels)

(6851, 6851, 762, 762)

# View the first 10 training sentences and their labels

train_sentences[:10], train_labels[:10]

(array(['@mogacola @zamtriossu i screamed after hitting tweet',

'Imagine getting flattened by Kurt Zouma',

'@Gurmeetramrahim #MSGDoing111WelfareWorks Green S welfare force ke appx 65000 members har time disaster victim ki help ke liye tyar hai....',

"@shakjn @C7 @Magnums im shaking in fear he's gonna hack the planet",

'Somehow find you and I collide http://t.co/Ee8RpOahPk',

'@EvaHanderek @MarleyKnysh great times until the bus driver held us hostage in the mall parking lot lmfao',

'destroy the free fandom honestly',

'Weapons stolen from National Guard Armory in New Albany still missing #Gunsense http://t.co/lKNU8902JE',

'@wfaaweather Pete when will the heat wave pass? Is it really going to be mid month? Frisco Boy Scouts have a canoe trip in Okla.',

'Patient-reported outcomes in long-term survivors of metastatic colorectal cancer - British Journal of Surgery http://t.co/5Yl4DC1Tqt'],

dtype=object),

array([0, 0, 1, 0, 0, 1, 1, 0, 1, 1]))

Converting text into numbers¶

Wonderful! We've got a training set and a validation set containing Tweets and labels.

Our labels are in numerical form (0 and 1) but our Tweets are in string form.

🤔 Question: What do you think we have to do before we can use a machine learning algorithm with our text data?

If you answered something along the lines of "turn it into numbers", you're correct. A machine learning algorithm requires its inputs to be in numerical form.

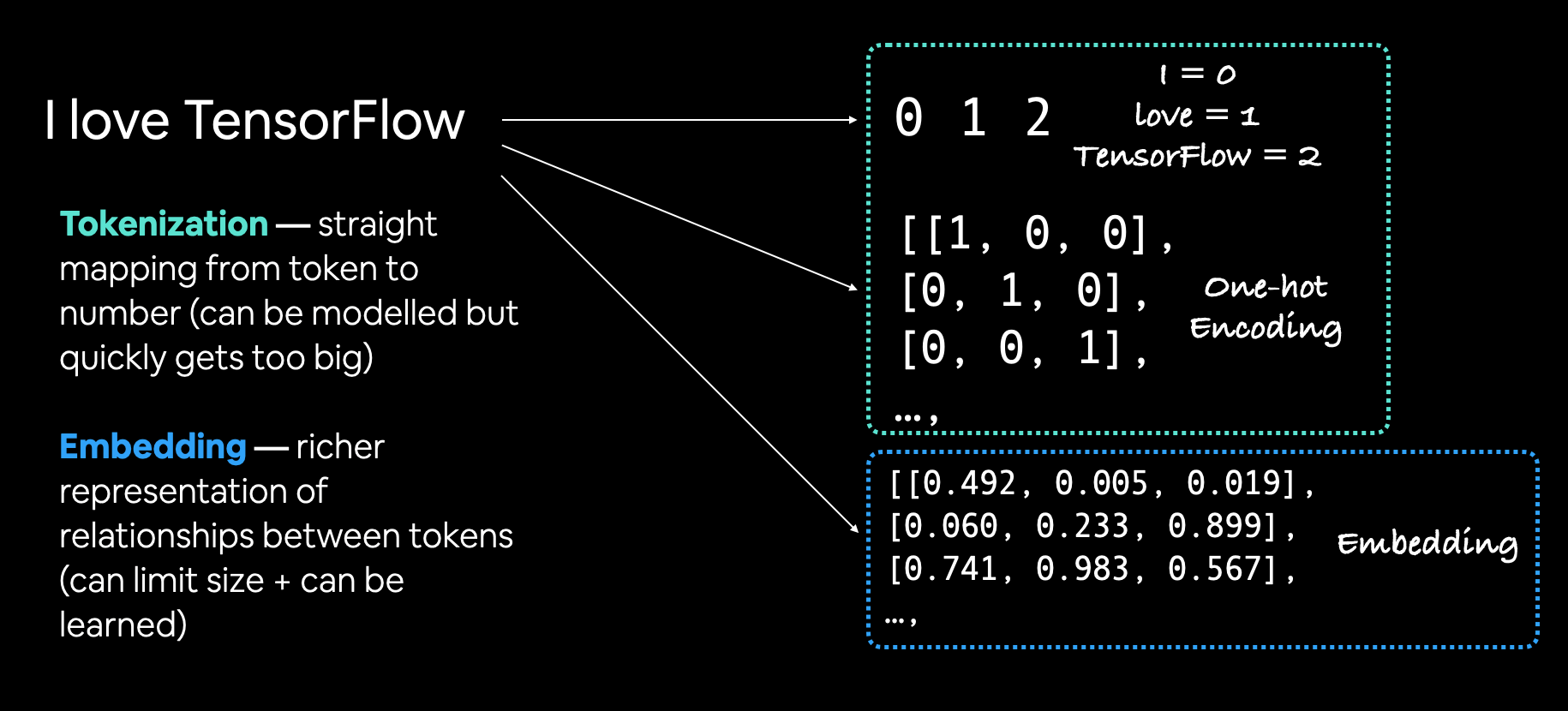

In NLP, there are two main concepts for turning text into numbers:

- Tokenization - A straight mapping from word or character or sub-word to a numerical value. There are three main levels of tokenization:

- Using word-level tokenization with the sentence "I love TensorFlow" might result in "I" being

0, "love" being1and "TensorFlow" being2. In this case, every word in a sequence considered a single token. - Character-level tokenization, such as converting the letters A-Z to values

1-26. In this case, every character in a sequence considered a single token. - Sub-word tokenization is in between word-level and character-level tokenization. It involves breaking invidual words into smaller parts and then converting those smaller parts into numbers. For example, "my favourite food is pineapple pizza" might become "my, fav, avour, rite, fo, oo, od, is, pin, ine, app, le, piz, za". After doing this, these sub-words would then be mapped to a numerical value. In this case, every word could be considered multiple tokens.

- Using word-level tokenization with the sentence "I love TensorFlow" might result in "I" being

- Embeddings - An embedding is a representation of natural language which can be learned. Representation comes in the form of a feature vector. For example, the word "dance" could be represented by the 5-dimensional vector

[-0.8547, 0.4559, -0.3332, 0.9877, 0.1112]. It's important to note here, the size of the feature vector is tuneable. There are two ways to use embeddings:- Create your own embedding - Once your text has been turned into numbers (required for an embedding), you can put them through an embedding layer (such as

tf.keras.layers.Embedding) and an embedding representation will be learned during model training. - Reuse a pre-learned embedding - Many pre-trained embeddings exist online. These pre-trained embeddings have often been learned on large corpuses of text (such as all of Wikipedia) and thus have a good underlying representation of natural language. You can use a pre-trained embedding to initialize your model and fine-tune it to your own specific task.

- Create your own embedding - Once your text has been turned into numbers (required for an embedding), you can put them through an embedding layer (such as

Example of tokenization (straight mapping from word to number) and embedding (richer representation of relationships between tokens).

Example of tokenization (straight mapping from word to number) and embedding (richer representation of relationships between tokens).

🤔 Question: What level of tokenzation should I use? What embedding should should I choose?

It depends on your problem. You could try character-level tokenization/embeddings and word-level tokenization/embeddings and see which perform best. You might even want to try stacking them (e.g. combining the outputs of your embedding layers using tf.keras.layers.concatenate).

If you're looking for pre-trained word embeddings, Word2vec embeddings, GloVe embeddings and many of the options available on TensorFlow Hub are great places to start.

🔑 Note: Much like searching for a pre-trained computer vision model, you can search for pre-trained word embeddings to use for your problem. Try searching for something like "use pre-trained word embeddings in TensorFlow".

Text vectorization (tokenization)¶

Enough talking about tokenization and embeddings, let's create some.

We'll practice tokenzation (mapping our words to numbers) first.

To tokenize our words, we'll use the helpful preprocessing layer tf.keras.layers.experimental.preprocessing.TextVectorization.

The TextVectorization layer takes the following parameters:

max_tokens- The maximum number of words in your vocabulary (e.g. 20000 or the number of unique words in your text), includes a value for OOV (out of vocabulary) tokens.standardize- Method for standardizing text. Default is"lower_and_strip_punctuation"which lowers text and removes all punctuation marks.split- How to split text, default is"whitespace"which splits on spaces.ngrams- How many words to contain per token split, for example,ngrams=2splits tokens into continuous sequences of 2.output_mode- How to output tokens, can be"int"(integer mapping),"binary"(one-hot encoding),"count"or"tf-idf". See documentation for more.output_sequence_length- Length of tokenized sequence to output. For example, ifoutput_sequence_length=150, all tokenized sequences will be 150 tokens long.pad_to_max_tokens- Defaults toFalse, ifTrue, the output feature axis will be padded tomax_tokenseven if the number of unique tokens in the vocabulary is less thanmax_tokens. Only valid in certain modes, see docs for more.

Let's see it in action.

import tensorflow as tf

from tensorflow.keras.layers import TextVectorization # after TensorFlow 2.6

# Before TensorFlow 2.6

# from tensorflow.keras.layers.experimental.preprocessing import TextVectorization

# Note: in TensorFlow 2.6+, you no longer need "layers.experimental.preprocessing"

# you can use: "tf.keras.layers.TextVectorization", see https://github.com/tensorflow/tensorflow/releases/tag/v2.6.0 for more

# Use the default TextVectorization variables

text_vectorizer = TextVectorization(max_tokens=None, # how many words in the vocabulary (all of the different words in your text)

standardize="lower_and_strip_punctuation", # how to process text

split="whitespace", # how to split tokens

ngrams=None, # create groups of n-words?

output_mode="int", # how to map tokens to numbers

output_sequence_length=None) # how long should the output sequence of tokens be?

# pad_to_max_tokens=True) # Not valid if using max_tokens=None

We've initialized a TextVectorization object with the default settings but let's customize it a little bit for our own use case.

In particular, let's set values for max_tokens and output_sequence_length.

For max_tokens (the number of words in the vocabulary), multiples of 10,000 (10,000, 20,000, 30,000) or the exact number of unique words in your text (e.g. 32,179) are common values.

For our use case, we'll use 10,000.

And for the output_sequence_length we'll use the average number of tokens per Tweet in the training set. But first, we'll need to find it.

# Find average number of tokens (words) in training Tweets

round(sum([len(i.split()) for i in train_sentences])/len(train_sentences))

15

Now let's create another TextVectorization object using our custom parameters.

# Setup text vectorization with custom variables

max_vocab_length = 10000 # max number of words to have in our vocabulary

max_length = 15 # max length our sequences will be (e.g. how many words from a Tweet does our model see?)

text_vectorizer = TextVectorization(max_tokens=max_vocab_length,

output_mode="int",

output_sequence_length=max_length)

Beautiful!

To map our TextVectorization instance text_vectorizer to our data, we can call the adapt() method on it whilst passing it our training text.

# Fit the text vectorizer to the training text

text_vectorizer.adapt(train_sentences)

Training data mapped! Let's try our text_vectorizer on a custom sentence (one similar to what you might see in the training data).

# Create sample sentence and tokenize it

sample_sentence = "There's a flood in my street!"

text_vectorizer([sample_sentence])

<tf.Tensor: shape=(1, 15), dtype=int64, numpy=

array([[264, 3, 232, 4, 13, 698, 0, 0, 0, 0, 0, 0, 0,

0, 0]])>

Wonderful, it seems we've got a way to turn our text into numbers (in this case, word-level tokenization). Notice the 0's at the end of the returned tensor, this is because we set output_sequence_length=15, meaning no matter the size of the sequence we pass to text_vectorizer, it always returns a sequence with a length of 15.

How about we try our text_vectorizer on a few random sentences?

# Choose a random sentence from the training dataset and tokenize it

random_sentence = random.choice(train_sentences)

print(f"Original text:\n{random_sentence}\

\n\nVectorized version:")

text_vectorizer([random_sentence])

Original text: new summer long thin body bag hip A word skirt Blue http://t.co/lvKoEMsq8m http://t.co/CjiRhHh4vj Vectorized version:

<tf.Tensor: shape=(1, 15), dtype=int64, numpy=

array([[ 50, 270, 480, 3335, 83, 322, 2436, 3, 1448, 3407, 824,

1, 1, 0, 0]])>

Looking good!

Finally, we can check the unique tokens in our vocabulary using the get_vocabulary() method.

# Get the unique words in the vocabulary

words_in_vocab = text_vectorizer.get_vocabulary()

top_5_words = words_in_vocab[:5] # most common tokens (notice the [UNK] token for "unknown" words)

bottom_5_words = words_in_vocab[-5:] # least common tokens

print(f"Number of words in vocab: {len(words_in_vocab)}")

print(f"Top 5 most common words: {top_5_words}")

print(f"Bottom 5 least common words: {bottom_5_words}")

Number of words in vocab: 10000 Top 5 most common words: ['', '[UNK]', 'the', 'a', 'in'] Bottom 5 least common words: ['pages', 'paeds', 'pads', 'padres', 'paddytomlinson1']

Creating an Embedding using an Embedding Layer¶

We've got a way to map our text to numbers. How about we go a step further and turn those numbers into an embedding?

The powerful thing about an embedding is it can be learned during training. This means rather than just being static (e.g. 1 = I, 2 = love, 3 = TensorFlow), a word's numeric representation can be improved as a model goes through data samples.

We can see what an embedding of a word looks like by using the tf.keras.layers.Embedding layer.

The main parameters we're concerned about here are:

input_dim- The size of the vocabulary (e.g.len(text_vectorizer.get_vocabulary()).output_dim- The size of the output embedding vector, for example, a value of100outputs a feature vector of size 100 for each word.embeddings_initializer- How to initialize the embeddings matrix, default is"uniform"which randomly initalizes embedding matrix with uniform distribution. This can be changed for using pre-learned embeddings.input_length- Length of sequences being passed to embedding layer.

Knowing these, let's make an embedding layer.

tf.random.set_seed(42)

from tensorflow.keras import layers

embedding = layers.Embedding(input_dim=max_vocab_length, # set input shape

output_dim=128, # set size of embedding vector

embeddings_initializer="uniform", # default, intialize randomly

input_length=max_length, # how long is each input

name="embedding_1")

embedding

<keras.layers.core.embedding.Embedding at 0x7f0c118dcc40>

Excellent, notice how embedding is a TensoFlow layer? This is important because we can use it as part of a model, meaning its parameters (word representations) can be updated and improved as the model learns.

How about we try it out on a sample sentence?

# Get a random sentence from training set

random_sentence = random.choice(train_sentences)

print(f"Original text:\n{random_sentence}\

\n\nEmbedded version:")

# Embed the random sentence (turn it into numerical representation)

sample_embed = embedding(text_vectorizer([random_sentence]))

sample_embed

Original text: Now on #ComDev #Asia: Radio stations in #Bangladesh broadcasting #programs ?to address the upcoming cyclone #komen http://t.co/iOVr4yMLKp Embedded version:

<tf.Tensor: shape=(1, 15, 128), dtype=float32, numpy=

array([[[-0.04868475, -0.03902867, -0.01375594, ..., 0.01682534,

-0.0439401 , -0.04604518],

[-0.04827927, -0.00328457, 0.02171678, ..., -0.03261749,

-0.01061803, -0.0481179 ],

[-0.02431345, 0.01104342, 0.00933889, ..., -0.04607272,

-0.00651377, 0.03853123],

...,

[-0.03270339, 0.03608486, 0.03573406, ..., 0.03622421,

0.03427652, -0.03483479],

[-0.0489977 , 0.01962234, 0.02186165, ..., 0.03139002,

-0.00744159, 0.0428594 ],

[ 0.01265842, 0.02462569, -0.04731182, ..., 0.00403734,

0.0431679 , 0.03959754]]], dtype=float32)>

Each token in the sentence gets turned into a length 128 feature vector.

# Check out a single token's embedding

sample_embed[0][0]

<tf.Tensor: shape=(128,), dtype=float32, numpy=

array([-0.04868475, -0.03902867, -0.01375594, 0.01587117, -0.02964617,

0.00738639, -0.03109504, 0.03008839, -0.01458266, -0.03069887,

-0.04926676, -0.03454053, -0.04019499, -0.04406711, 0.01975099,

0.02852687, -0.04052209, -0.03800124, 0.03438697, -0.0118026 ,

-0.03470664, -0.01146972, 0.0449667 , -0.00269016, 0.02131964,

-0.04141569, -0.03724197, 0.01624352, 0.03269556, 0.03813741,

0.03606123, 0.00698509, -0.03569689, 0.02056131, -0.03467314,

0.01110398, 0.02095172, 0.02219674, -0.04576088, -0.04229112,

-0.02345047, 0.02578488, 0.02985479, -0.00203061, 0.03920727,

0.04065951, 0.03973453, 0.03947322, 0.01699554, 0.0021927 ,

0.03676197, -0.04327145, 0.02495482, 0.02447238, -0.04413594,

-0.01388069, -0.00375951, -0.0328602 , -0.00067427, 0.01808068,

0.04227355, 0.02817165, 0.01965401, -0.01514393, 0.01905935,

-0.03820103, -0.04916845, 0.02303007, 0.00830983, 0.01011454,

-0.04043181, 0.02080727, -0.03319015, 0.04188809, -0.01183917,

-0.01822531, -0.02172413, 0.03059311, 0.02727925, -0.00328885,

-0.00808424, -0.02095444, -0.00894216, 0.00770078, -0.00439024,

0.03637768, 0.02007255, -0.02650907, -0.01374531, 0.01806785,

-0.03309877, -0.01076321, -0.04107616, 0.01709371, 0.04567242,

-0.01824218, 0.02805582, 0.02974418, -0.04001283, -0.04077357,

0.00323737, 0.04038842, -0.00992844, -0.03974843, 0.04533138,

0.04738795, 0.02837384, 0.03874009, -0.01673441, -0.00258055,

-0.01975214, -0.04166807, -0.02483889, -0.02804886, 0.04608755,

0.03544754, 0.02697959, 0.00242041, 0.00101637, -0.01162767,

-0.00497937, 0.00540714, -0.01258825, 0.00779672, 0.02742722,

0.01682534, -0.0439401 , -0.04604518], dtype=float32)>

These values might not mean much to us but they're what our computer sees each word as. When our model looks for patterns in different samples, these values will be updated as necessary.

🔑 Note: The previous two concepts (tokenization and embeddings) are the foundation for many NLP tasks. So if you're not sure about anything, be sure to research and conduct your own experiments to further help your understanding.

Modelling a text dataset¶

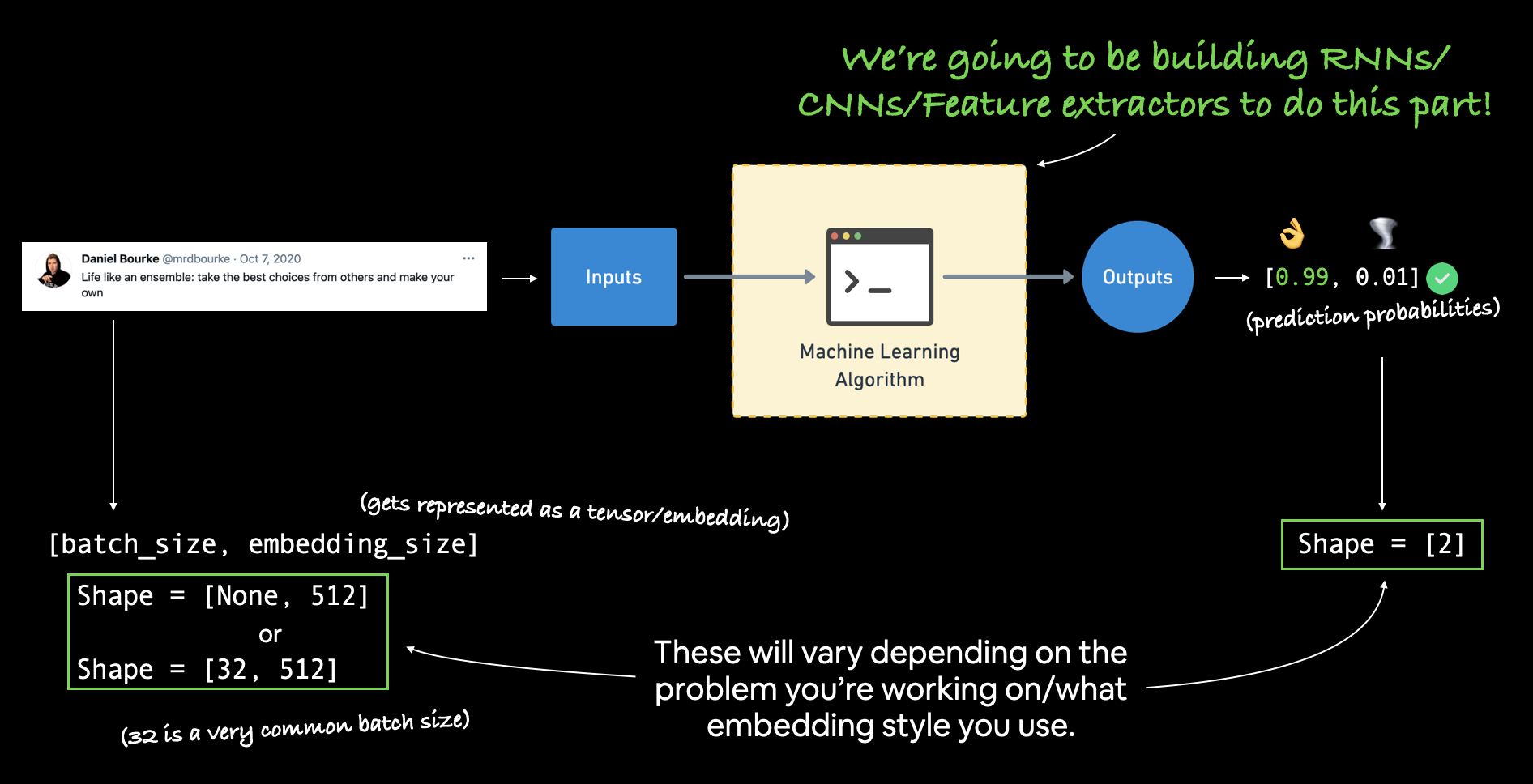

Once you've got your inputs and outputs prepared, it's a matter of figuring out which machine learning model to build in between them to bridge the gap.

Once you've got your inputs and outputs prepared, it's a matter of figuring out which machine learning model to build in between them to bridge the gap.

Now that we've got a way to turn our text data into numbers, we can start to build machine learning models to model it.

To get plenty of practice, we're going to build a series of different models, each as its own experiment. We'll then compare the results of each model and see which one performed best.

More specifically, we'll be building the following:

- Model 0: Naive Bayes (baseline)

- Model 1: Feed-forward neural network (dense model)

- Model 2: LSTM model

- Model 3: GRU model

- Model 4: Bidirectional-LSTM model

- Model 5: 1D Convolutional Neural Network

- Model 6: TensorFlow Hub Pretrained Feature Extractor

- Model 7: Same as model 6 with 10% of training data

Model 0 is the simplest to acquire a baseline which we'll expect each other of the other deeper models to beat.

Each experiment will go through the following steps:

- Construct the model

- Train the model

- Make predictions with the model

- Track prediction evaluation metrics for later comparison

Let's get started.

Model 0: Getting a baseline¶

As with all machine learning modelling experiments, it's important to create a baseline model so you've got a benchmark for future experiments to build upon.

To create our baseline, we'll create a Scikit-Learn Pipeline using the TF-IDF (term frequency-inverse document frequency) formula to convert our words to numbers and then model them with the Multinomial Naive Bayes algorithm. This was chosen via referring to the Scikit-Learn machine learning map.

📖 Reading: The ins and outs of TF-IDF algorithm is beyond the scope of this notebook, however, the curious reader is encouraged to check out the Scikit-Learn documentation for more.

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.pipeline import Pipeline

# Create tokenization and modelling pipeline

model_0 = Pipeline([

("tfidf", TfidfVectorizer()), # convert words to numbers using tfidf

("clf", MultinomialNB()) # model the text

])

# Fit the pipeline to the training data

model_0.fit(train_sentences, train_labels)

Pipeline(steps=[('tfidf', TfidfVectorizer()), ('clf', MultinomialNB())])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('tfidf', TfidfVectorizer()), ('clf', MultinomialNB())])TfidfVectorizer()

MultinomialNB()

The benefit of using a shallow model like Multinomial Naive Bayes is that training is very fast.

Let's evaluate our model and find our baseline metric.

baseline_score = model_0.score(val_sentences, val_labels)

print(f"Our baseline model achieves an accuracy of: {baseline_score*100:.2f}%")

Our baseline model achieves an accuracy of: 79.27%

How about we make some predictions with our baseline model?

# Make predictions

baseline_preds = model_0.predict(val_sentences)

baseline_preds[:20]

array([1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1])

Creating an evaluation function for our model experiments¶

We could evaluate these as they are but since we're going to be evaluating several models in the same way going forward, let's create a helper function which takes an array of predictions and ground truth labels and computes the following:

- Accuracy

- Precision

- Recall

- F1-score

🔑 Note: Since we're dealing with a classification problem, the above metrics are the most appropriate. If we were working with a regression problem, other metrics such as MAE (mean absolute error) would be a better choice.

# Function to evaluate: accuracy, precision, recall, f1-score

from sklearn.metrics import accuracy_score, precision_recall_fscore_support

def calculate_results(y_true, y_pred):

"""

Calculates model accuracy, precision, recall and f1 score of a binary classification model.

Args:

-----

y_true = true labels in the form of a 1D array

y_pred = predicted labels in the form of a 1D array

Returns a dictionary of accuracy, precision, recall, f1-score.

"""

# Calculate model accuracy

model_accuracy = accuracy_score(y_true, y_pred) * 100

# Calculate model precision, recall and f1 score using "weighted" average

model_precision, model_recall, model_f1, _ = precision_recall_fscore_support(y_true, y_pred, average="weighted")

model_results = {"accuracy": model_accuracy,

"precision": model_precision,

"recall": model_recall,

"f1": model_f1}

return model_results

# Get baseline results

baseline_results = calculate_results(y_true=val_labels,

y_pred=baseline_preds)

baseline_results

{'accuracy': 79.26509186351706,

'precision': 0.8111390004213173,

'recall': 0.7926509186351706,

'f1': 0.7862189758049549}

Model 1: A simple dense model¶

The first "deep" model we're going to build is a single layer dense model. In fact, it's barely going to have a single layer.

It'll take our text and labels as input, tokenize the text, create an embedding, find the average of the embedding (using Global Average Pooling) and then pass the average through a fully connected layer with one output unit and a sigmoid activation function.

If the previous sentence sounds like a mouthful, it'll make sense when we code it out (remember, if in doubt, code it out).

And since we're going to be building a number of TensorFlow deep learning models, we'll import our create_tensorboard_callback() function from helper_functions.py to keep track of the results of each.

# Create tensorboard callback (need to create a new one for each model)

from helper_functions import create_tensorboard_callback

# Create directory to save TensorBoard logs

SAVE_DIR = "model_logs"

Now we've got a TensorBoard callback function ready to go, let's build our first deep model.

# Build model with the Functional API

from tensorflow.keras import layers

inputs = layers.Input(shape=(1,), dtype="string") # inputs are 1-dimensional strings

x = text_vectorizer(inputs) # turn the input text into numbers

x = embedding(x) # create an embedding of the numerized numbers

x = layers.GlobalAveragePooling1D()(x) # lower the dimensionality of the embedding (try running the model without this layer and see what happens)

outputs = layers.Dense(1, activation="sigmoid")(x) # create the output layer, want binary outputs so use sigmoid activation

model_1 = tf.keras.Model(inputs, outputs, name="model_1_dense") # construct the model

Looking good. Our model takes a 1-dimensional string as input (in our case, a Tweet), it then tokenizes the string using text_vectorizer and creates an embedding using embedding.

We then (optionally) pool the outputs of the embedding layer to reduce the dimensionality of the tensor we pass to the output layer.

🛠 Exercise: Try building

model_1with and without aGlobalAveragePooling1D()layer after theembeddinglayer. What happens? Why do you think this is?

Finally, we pass the output of the pooling layer to a dense layer with sigmoid activation (we use sigmoid since our problem is binary classification).

Before we can fit our model to the data, we've got to compile it. Since we're working with binary classification, we'll use "binary_crossentropy" as our loss function and the Adam optimizer.

# Compile model

model_1.compile(loss="binary_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

Model compiled. Let's get a summary.

# Get a summary of the model

model_1.summary()

Model: "model_1_dense"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 1)] 0

text_vectorization_1 (TextV (None, 15) 0

ectorization)

embedding_1 (Embedding) (None, 15, 128) 1280000

global_average_pooling1d (G (None, 128) 0

lobalAveragePooling1D)

dense (Dense) (None, 1) 129

=================================================================

Total params: 1,280,129

Trainable params: 1,280,129

Non-trainable params: 0

_________________________________________________________________

Most of the trainable parameters are contained within the embedding layer. Recall we created an embedding of size 128 (output_dim=128) for a vocabulary of size 10,000 (input_dim=10000), hence the 1,280,000 trainable parameters.

Alright, our model is compiled, let's fit it to our training data for 5 epochs. We'll also pass our TensorBoard callback function to make sure our model's training metrics are logged.

# Fit the model

model_1_history = model_1.fit(train_sentences, # input sentences can be a list of strings due to text preprocessing layer built-in model

train_labels,

epochs=5,

validation_data=(val_sentences, val_labels),

callbacks=[create_tensorboard_callback(dir_name=SAVE_DIR,

experiment_name="simple_dense_model")])

Saving TensorBoard log files to: model_logs/simple_dense_model/20230526-001451 Epoch 1/5 215/215 [==============================] - 18s 55ms/step - loss: 0.6098 - accuracy: 0.6936 - val_loss: 0.5360 - val_accuracy: 0.7559 Epoch 2/5 215/215 [==============================] - 2s 11ms/step - loss: 0.4417 - accuracy: 0.8194 - val_loss: 0.4691 - val_accuracy: 0.7887 Epoch 3/5 215/215 [==============================] - 2s 10ms/step - loss: 0.3471 - accuracy: 0.8616 - val_loss: 0.4588 - val_accuracy: 0.7887 Epoch 4/5 215/215 [==============================] - 2s 7ms/step - loss: 0.2856 - accuracy: 0.8921 - val_loss: 0.4637 - val_accuracy: 0.7913 Epoch 5/5 215/215 [==============================] - 2s 8ms/step - loss: 0.2388 - accuracy: 0.9115 - val_loss: 0.4760 - val_accuracy: 0.7861

Nice! Since we're using such a simple model, each epoch processes very quickly.

Let's check our model's performance on the validation set.

# Check the results

model_1.evaluate(val_sentences, val_labels)

24/24 [==============================] - 0s 2ms/step - loss: 0.4760 - accuracy: 0.7861

[0.4760194718837738, 0.7860892415046692]

embedding.weights

[<tf.Variable 'embedding_1/embeddings:0' shape=(10000, 128) dtype=float32, numpy=

array([[-0.01078545, 0.05590528, 0.03125916, ..., -0.0312557 ,

-0.05340781, -0.03800201],

[-0.02370532, 0.01161508, 0.0097667 , ..., -0.04962142,

-0.00636482, 0.03781125],

[-0.05472897, 0.05356752, 0.02146765, ..., 0.05501205,

0.01705659, -0.05321405],

...,

[ 0.01756669, -0.03676652, -0.00949616, ..., -0.00987446,

-0.04183743, 0.03016822],

[-0.07823883, 0.06081628, -0.07657789, ..., 0.07998865,

-0.05281445, -0.02332675],

[-0.03393482, 0.08871375, -0.06819566, ..., 0.06992952,

-0.09992232, -0.02705033]], dtype=float32)>]

embed_weights = model_1.get_layer("embedding_1").get_weights()[0]

print(embed_weights.shape)

(10000, 128)

And since we tracked our model's training logs with TensorBoard, how about we visualize them?

We can do so by uploading our TensorBoard log files (contained in the model_logs directory) to TensorBoard.dev.

🔑 Note: Remember, whatever you upload to TensorBoard.dev becomes public. If there are training logs you don't want to share, don't upload them.

# # View tensorboard logs of transfer learning modelling experiments (should be 4 models)

# # Upload TensorBoard dev records

# !tensorboard dev upload --logdir ./model_logs \

# --name "First deep model on text data" \

# --description "Trying a dense model with an embedding layer" \

# --one_shot # exits the uploader when upload has finished

# If you need to remove previous experiments, you can do so using the following command

# !tensorboard dev delete --experiment_id EXPERIMENT_ID_TO_DELETE

The TensorBoard.dev experiment for our first deep model can be viewed here: https://tensorboard.dev/experiment/5d1Xm10aT6m6MgyW3HAGfw/

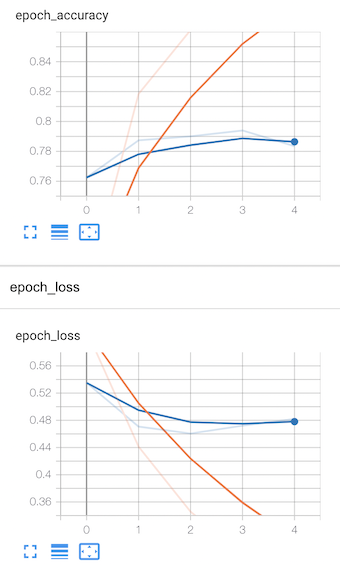

What the training curves of our model look like on TensorBoard. From looking at the curves can you tell if the model is overfitting or underfitting?

Beautiful! Those are some colorful training curves. Would you say the model is overfitting or underfitting?

We've built and trained our first deep model, the next step is to make some predictions with it.

# Make predictions (these come back in the form of probabilities)

model_1_pred_probs = model_1.predict(val_sentences)

model_1_pred_probs[:10] # only print out the first 10 prediction probabilities

24/24 [==============================] - 0s 2ms/step

array([[0.4068562 ],

[0.74714756],

[0.9978309 ],

[0.10913013],

[0.10925023],

[0.93645686],

[0.91428435],

[0.99250424],

[0.96829313],

[0.26842445]], dtype=float32)

Since our final layer uses a sigmoid activation function, we get our predictions back in the form of probabilities.

To convert them to prediction classes, we'll use tf.round(), meaning prediction probabilities below 0.5 will be rounded to 0 and those above 0.5 will be rounded to 1.

🔑 Note: In practice, the output threshold of a sigmoid prediction probability doesn't necessarily have to 0.5. For example, through testing, you may find that a cut off of 0.25 is better for your chosen evaluation metrics. A common example of this threshold cutoff is the precision-recall tradeoff (search for the keyword "tradeoff" to learn about the phenomenon).

# Turn prediction probabilities into single-dimension tensor of floats

model_1_preds = tf.squeeze(tf.round(model_1_pred_probs)) # squeeze removes single dimensions

model_1_preds[:20]

<tf.Tensor: shape=(20,), dtype=float32, numpy=

array([0., 1., 1., 0., 0., 1., 1., 1., 1., 0., 0., 1., 0., 0., 0., 0., 0.,

0., 0., 1.], dtype=float32)>

Now we've got our model's predictions in the form of classes, we can use our calculate_results() function to compare them to the ground truth validation labels.

# Calculate model_1 metrics

model_1_results = calculate_results(y_true=val_labels,

y_pred=model_1_preds)

model_1_results

{'accuracy': 78.60892388451444,

'precision': 0.7903277546022673,

'recall': 0.7860892388451444,

'f1': 0.7832971347503846}

How about we compare our first deep model to our baseline model?

# Is our simple Keras model better than our baseline model?

import numpy as np

np.array(list(model_1_results.values())) > np.array(list(baseline_results.values()))

array([False, False, False, False])

Since we'll be doing this kind of comparison (baseline compared to new model) quite a few times, let's create a function to help us out.

# Create a helper function to compare our baseline results to new model results

def compare_baseline_to_new_results(baseline_results, new_model_results):

for key, value in baseline_results.items():

print(f"Baseline {key}: {value:.2f}, New {key}: {new_model_results[key]:.2f}, Difference: {new_model_results[key]-value:.2f}")

compare_baseline_to_new_results(baseline_results=baseline_results,

new_model_results=model_1_results)

Baseline accuracy: 79.27, New accuracy: 78.61, Difference: -0.66 Baseline precision: 0.81, New precision: 0.79, Difference: -0.02 Baseline recall: 0.79, New recall: 0.79, Difference: -0.01 Baseline f1: 0.79, New f1: 0.78, Difference: -0.00

Visualizing learned embeddings¶

Our first model (model_1) contained an embedding layer (embedding) which learned a way of representing words as feature vectors by passing over the training data.

Hearing this for the first few times may sound confusing.

So to further help understand what a text embedding is, let's visualize the embedding our model learned.

To do so, let's remind ourselves of the words in our vocabulary.

# Get the vocabulary from the text vectorization layer

words_in_vocab = text_vectorizer.get_vocabulary()

len(words_in_vocab), words_in_vocab[:10]

(10000, ['', '[UNK]', 'the', 'a', 'in', 'to', 'of', 'and', 'i', 'is'])

And now let's get our embedding layer's weights (these are the numerical representations of each word).

model_1.summary()

Model: "model_1_dense"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 1)] 0

text_vectorization_1 (TextV (None, 15) 0

ectorization)

embedding_1 (Embedding) (None, 15, 128) 1280000

global_average_pooling1d (G (None, 128) 0

lobalAveragePooling1D)

dense (Dense) (None, 1) 129

=================================================================

Total params: 1,280,129

Trainable params: 1,280,129

Non-trainable params: 0

_________________________________________________________________

# Get the weight matrix of embedding layer

# (these are the numerical patterns between the text in the training dataset the model has learned)

embed_weights = model_1.get_layer("embedding_1").get_weights()[0]

print(embed_weights.shape) # same size as vocab size and embedding_dim (each word is a embedding_dim size vector)

(10000, 128)

Now we've got these two objects, we can use the Embedding Projector tool to visualize our embedding.

To use the Embedding Projector tool, we need two files:

- The embedding vectors (same as embedding weights).

- The meta data of the embedding vectors (the words they represent - our vocabulary).

Right now, we've got of these files as Python objects. To download them to file, we're going to use the code example available on the TensorFlow word embeddings tutorial page.

# # Code below is adapted from: https://www.tensorflow.org/tutorials/text/word_embeddings#retrieve_the_trained_word_embeddings_and_save_them_to_disk

# import io

# # Create output writers

# out_v = io.open("embedding_vectors.tsv", "w", encoding="utf-8")

# out_m = io.open("embedding_metadata.tsv", "w", encoding="utf-8")

# # Write embedding vectors and words to file

# for num, word in enumerate(words_in_vocab):

# if num == 0:

# continue # skip padding token

# vec = embed_weights[num]

# out_m.write(word + "\n") # write words to file

# out_v.write("\t".join([str(x) for x in vec]) + "\n") # write corresponding word vector to file

# out_v.close()

# out_m.close()

# # Download files locally to upload to Embedding Projector

# try:

# from google.colab import files

# except ImportError:

# pass

# else:

# files.download("embedding_vectors.tsv")

# files.download("embedding_metadata.tsv")

Once you've downloaded the embedding vectors and metadata, you can visualize them using Embedding Vector tool:

- Go to http://projector.tensorflow.org/

- Click on "Load data"

- Upload the two files you downloaded (

embedding_vectors.tsvandembedding_metadata.tsv) - Explore

- Optional: You can share the data you've created by clicking "Publish"

What do you find?

Are words with similar meanings close together?

Remember, they might not be. The embeddings we downloaded are how our model interprets words, not necessarily how we interpret them.

Also, since the embedding has been learned purely from Tweets, it may contain some strange values as Tweets are a very unique style of natural language.

🤔 Question: Do you have to visualize embeddings every time?

No. Although helpful for gaining an intuition of what natural language embeddings are, it's not completely necessary. Especially as the dimensions of your vocabulary and embeddings grow, trying to comprehend them would become an increasingly difficult task.

Recurrent Neural Networks (RNN's)¶

For our next series of modelling experiments we're going to be using a special kind of neural network called a Recurrent Neural Network (RNN).

The premise of an RNN is simple: use information from the past to help you with the future (this is where the term recurrent comes from). In other words, take an input (X) and compute an output (y) based on all previous inputs.

This concept is especially helpful when dealing with sequences such as passages of natural language text (such as our Tweets).

For example, when you read this sentence, you take into context the previous words when deciphering the meaning of the current word dog.

See what happened there?

I put the word "dog" at the end which is a valid word but it doesn't make sense in the context of the rest of the sentence.

When an RNN looks at a sequence of text (already in numerical form), the patterns it learns are continually updated based on the order of the sequence.

For a simple example, take two sentences:

- Massive earthquake last week, no?

- No massive earthquake last week.

Both contain exactly the same words but have different meaning. The order of the words determines the meaning (one could argue punctuation marks also dictate the meaning but for simplicity sake, let's stay focused on the words).

Recurrent neural networks can be used for a number of sequence-based problems:

- One to one: one input, one output, such as image classification.

- One to many: one input, many outputs, such as image captioning (image input, a sequence of text as caption output).

- Many to one: many inputs, one outputs, such as text classification (classifying a Tweet as real diaster or not real diaster).

- Many to many: many inputs, many outputs, such as machine translation (translating English to Spanish) or speech to text (audio wave as input, text as output).

When you come across RNN's in the wild, you'll most likely come across variants of the following:

- Long short-term memory cells (LSTMs).

- Gated recurrent units (GRUs).

- Bidirectional RNN's (passes forward and backward along a sequence, left to right and right to left).

Going into the details of each these is beyond the scope of this notebook (we're going to focus on using them instead), the main thing you should know for now is that they've proven very effective at modelling sequences.

For a deeper understanding of what's happening behind the scenes of the code we're about to write, I'd recommend the following resources:

📖 Resources:

- MIT Deep Learning Lecture on Recurrent Neural Networks - explains the background of recurrent neural networks and introduces LSTMs.

- The Unreasonable Effectiveness of Recurrent Neural Networks by Andrej Karpathy - demonstrates the power of RNN's with examples generating various sequences.

- Understanding LSTMs by Chris Olah - an in-depth (and technical) look at the mechanics of the LSTM cell, possibly the most popular RNN building block.

Model 2: LSTM¶

With all this talk of what RNN's are and what they're good for, I'm sure you're eager to build one.

We're going to start with an LSTM-powered RNN.

To harness the power of the LSTM cell (LSTM cell and LSTM layer are often used interchangably) in TensorFlow, we'll use tensorflow.keras.layers.LSTM().

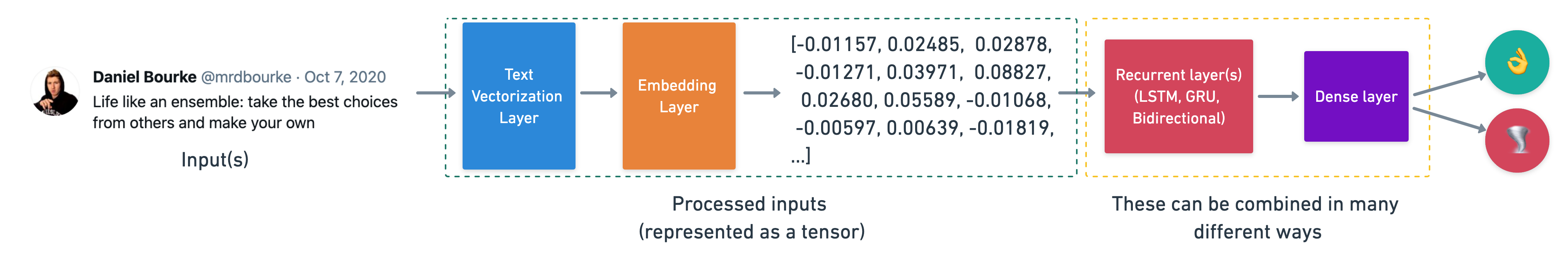

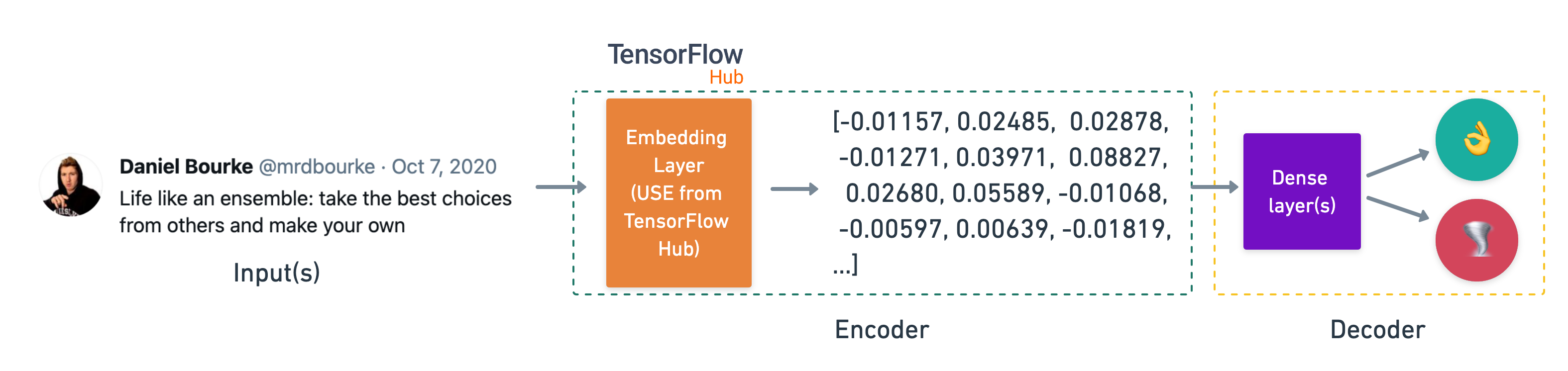

Coloured block example of the structure of an recurrent neural network.

Coloured block example of the structure of an recurrent neural network.

Our model is going to take on a very similar structure to model_1:

Input (text) -> Tokenize -> Embedding -> Layers -> Output (label probability)

The main difference will be that we're going to add an LSTM layer between our embedding and output.

And to make sure we're not getting reusing trained embeddings (this would involve data leakage between models, leading to an uneven comparison later on), we'll create another embedding layer (model_2_embedding) for our model. The text_vectorizer layer can be reused since it doesn't get updated during training.

🔑 Note: The reason we use a new embedding layer for each model is since the embedding layer is a learned representation of words (as numbers), if we were to use the same embedding layer (

embedding_1) for each model, we'd be mixing what one model learned with the next. And because we want to compare our models later on, starting them with their own embedding layer each time is a better idea.

# Set random seed and create embedding layer (new embedding layer for each model)

tf.random.set_seed(42)

from tensorflow.keras import layers

model_2_embedding = layers.Embedding(input_dim=max_vocab_length,

output_dim=128,

embeddings_initializer="uniform",

input_length=max_length,

name="embedding_2")

# Create LSTM model

inputs = layers.Input(shape=(1,), dtype="string")

x = text_vectorizer(inputs)

x = model_2_embedding(x)

print(x.shape)

# x = layers.LSTM(64, return_sequences=True)(x) # return vector for each word in the Tweet (you can stack RNN cells as long as return_sequences=True)

x = layers.LSTM(64)(x) # return vector for whole sequence

print(x.shape)

# x = layers.Dense(64, activation="relu")(x) # optional dense layer on top of output of LSTM cell

outputs = layers.Dense(1, activation="sigmoid")(x)

model_2 = tf.keras.Model(inputs, outputs, name="model_2_LSTM")

(None, 15, 128) (None, 64)

🔑 Note: Reading the documentation for the TensorFlow LSTM layer, you'll find a plethora of parameters. Many of these have been tuned to make sure they compute as fast as possible. The main ones you'll be looking to adjust are

units(number of hidden units) andreturn_sequences(set this toTruewhen stacking LSTM or other recurrent layers).

Now we've got our LSTM model built, let's compile it using "binary_crossentropy" loss and the Adam optimizer.

# Compile model

model_2.compile(loss="binary_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

And before we fit our model to the data, let's get a summary.

model_2.summary()

Model: "model_2_LSTM"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 1)] 0

text_vectorization_1 (TextV (None, 15) 0

ectorization)

embedding_2 (Embedding) (None, 15, 128) 1280000

lstm (LSTM) (None, 64) 49408

dense_1 (Dense) (None, 1) 65

=================================================================

Total params: 1,329,473

Trainable params: 1,329,473

Non-trainable params: 0

_________________________________________________________________

Looking good! You'll notice a fair few more trainable parameters within our LSTM layer than model_1.

If you'd like to know where this number comes from, I recommend going through the above resources as well the following on calculating the number of parameters in an LSTM cell:

- Stack Overflow answer to calculate the number of parameters in an LSTM cell by Marcin Możejko

- Calculating number of parameters in a LSTM unit and layer by Shridhar Priyadarshi

Now our first RNN model's compiled let's fit it to our training data, validating it on the validation data and tracking its training parameters using our TensorBoard callback.

# Fit model

model_2_history = model_2.fit(train_sentences,

train_labels,

epochs=5,

validation_data=(val_sentences, val_labels),

callbacks=[create_tensorboard_callback(SAVE_DIR,

"LSTM")])

Saving TensorBoard log files to: model_logs/LSTM/20230526-001518 Epoch 1/5 215/215 [==============================] - 13s 44ms/step - loss: 0.5074 - accuracy: 0.7460 - val_loss: 0.4590 - val_accuracy: 0.7743 Epoch 2/5 215/215 [==============================] - 2s 10ms/step - loss: 0.3168 - accuracy: 0.8716 - val_loss: 0.5119 - val_accuracy: 0.7756 Epoch 3/5 215/215 [==============================] - 2s 10ms/step - loss: 0.2198 - accuracy: 0.9155 - val_loss: 0.5876 - val_accuracy: 0.7677 Epoch 4/5 215/215 [==============================] - 2s 8ms/step - loss: 0.1577 - accuracy: 0.9442 - val_loss: 0.5923 - val_accuracy: 0.7795 Epoch 5/5 215/215 [==============================] - 2s 8ms/step - loss: 0.1108 - accuracy: 0.9577 - val_loss: 0.8550 - val_accuracy: 0.7559

Nice! We've got our first trained RNN model using LSTM cells. Let's make some predictions with it.

The same thing will happen as before, due to the sigmoid activiation function in the final layer, when we call the predict() method on our model, it'll return prediction probabilities rather than classes.

# Make predictions on the validation dataset

model_2_pred_probs = model_2.predict(val_sentences)

model_2_pred_probs.shape, model_2_pred_probs[:10] # view the first 10

24/24 [==============================] - 0s 2ms/step

((762, 1),

array([[0.00630066],

[0.7862389 ],

[0.9991792 ],

[0.06841089],

[0.00448257],

[0.99932086],

[0.8617405 ],

[0.99968505],

[0.9993248 ],

[0.57989997]], dtype=float32))

We can turn these prediction probabilities into prediction classes by rounding to the nearest integer (by default, prediction probabilities under 0.5 will go to 0 and those over 0.5 will go to 1).

# Round out predictions and reduce to 1-dimensional array

model_2_preds = tf.squeeze(tf.round(model_2_pred_probs))

model_2_preds[:10]

<tf.Tensor: shape=(10,), dtype=float32, numpy=array([0., 1., 1., 0., 0., 1., 1., 1., 1., 1.], dtype=float32)>

Beautiful, now let's use our caculate_results() function to evaluate our LSTM model and our compare_baseline_to_new_results() function to compare it to our baseline model.

# Calculate LSTM model results

model_2_results = calculate_results(y_true=val_labels,

y_pred=model_2_preds)

model_2_results

{'accuracy': 75.59055118110236,

'precision': 0.7567160722556739,

'recall': 0.7559055118110236,

'f1': 0.7539595513230887}

# Compare model 2 to baseline

compare_baseline_to_new_results(baseline_results, model_2_results)

Baseline accuracy: 79.27, New accuracy: 75.59, Difference: -3.67 Baseline precision: 0.81, New precision: 0.76, Difference: -0.05 Baseline recall: 0.79, New recall: 0.76, Difference: -0.04 Baseline f1: 0.79, New f1: 0.75, Difference: -0.03

Model 3: GRU¶

Another popular and effective RNN component is the GRU or gated recurrent unit.

The GRU cell has similar features to an LSTM cell but has less parameters.

📖 Resource: A full explanation of the GRU cell is beyond the scope of this noteook but I'd suggest the following resources to learn more:

- Gated Recurrent Unit Wikipedia page

- Understanding GRU networks by Simeon Kostadinov

To use the GRU cell in TensorFlow, we can call the tensorflow.keras.layers.GRU() class.

The architecture of the GRU-powered model will follow the same structure we've been using:

Input (text) -> Tokenize -> Embedding -> Layers -> Output (label probability)

Again, the only difference will be the layer(s) we use between the embedding and the output.

# Set random seed and create embedding layer (new embedding layer for each model)

tf.random.set_seed(42)

from tensorflow.keras import layers

model_3_embedding = layers.Embedding(input_dim=max_vocab_length,

output_dim=128,

embeddings_initializer="uniform",

input_length=max_length,

name="embedding_3")

# Build an RNN using the GRU cell

inputs = layers.Input(shape=(1,), dtype="string")

x = text_vectorizer(inputs)

x = model_3_embedding(x)

# x = layers.GRU(64, return_sequences=True) # stacking recurrent cells requires return_sequences=True

x = layers.GRU(64)(x)

# x = layers.Dense(64, activation="relu")(x) # optional dense layer after GRU cell

outputs = layers.Dense(1, activation="sigmoid")(x)

model_3 = tf.keras.Model(inputs, outputs, name="model_3_GRU")

TensorFlow makes it easy to use powerful components such as the GRU cell in our models. And now our third model is built, let's compile it, just as before.

# Compile GRU model

model_3.compile(loss="binary_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

What does a summary of our model look like?

# Get a summary of the GRU model

model_3.summary()

Model: "model_3_GRU"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_3 (InputLayer) [(None, 1)] 0

text_vectorization_1 (TextV (None, 15) 0

ectorization)

embedding_3 (Embedding) (None, 15, 128) 1280000

gru (GRU) (None, 64) 37248

dense_2 (Dense) (None, 1) 65

=================================================================

Total params: 1,317,313

Trainable params: 1,317,313

Non-trainable params: 0

_________________________________________________________________

Notice the difference in number of trainable parameters between model_2 (LSTM) and model_3 (GRU). The difference comes from the LSTM cell having more trainable parameters than the GRU cell.

We'll fit our model just as we've been doing previously. We'll also track our models results using our create_tensorboard_callback() function.

# Fit model

model_3_history = model_3.fit(train_sentences,

train_labels,

epochs=5,

validation_data=(val_sentences, val_labels),

callbacks=[create_tensorboard_callback(SAVE_DIR, "GRU")])

Saving TensorBoard log files to: model_logs/GRU/20230526-001539 Epoch 1/5 215/215 [==============================] - 11s 43ms/step - loss: 0.5274 - accuracy: 0.7231 - val_loss: 0.4539 - val_accuracy: 0.7795 Epoch 2/5 215/215 [==============================] - 2s 10ms/step - loss: 0.3179 - accuracy: 0.8686 - val_loss: 0.4850 - val_accuracy: 0.7848 Epoch 3/5 215/215 [==============================] - 2s 9ms/step - loss: 0.2149 - accuracy: 0.9187 - val_loss: 0.5544 - val_accuracy: 0.7717 Epoch 4/5 215/215 [==============================] - 2s 8ms/step - loss: 0.1517 - accuracy: 0.9488 - val_loss: 0.6279 - val_accuracy: 0.7835 Epoch 5/5 215/215 [==============================] - 2s 8ms/step - loss: 0.1145 - accuracy: 0.9609 - val_loss: 0.6063 - val_accuracy: 0.7756

Due to the optimized default settings of the GRU cell in TensorFlow, training doesn't take long at all.

Time to make some predictions on the validation samples.

# Make predictions on the validation data

model_3_pred_probs = model_3.predict(val_sentences)

model_3_pred_probs.shape, model_3_pred_probs[:10]

24/24 [==============================] - 0s 2ms/step

((762, 1),

array([[0.31703022],

[0.9160779 ],

[0.9977792 ],

[0.14830083],

[0.01086212],

[0.9908326 ],

[0.6938264 ],

[0.9978917 ],

[0.99662066],

[0.4299642 ]], dtype=float32))

Again we get an array of prediction probabilities back which we can convert to prediction classes by rounding them.

# Convert prediction probabilities to prediction classes

model_3_preds = tf.squeeze(tf.round(model_3_pred_probs))

model_3_preds[:10]

<tf.Tensor: shape=(10,), dtype=float32, numpy=array([0., 1., 1., 0., 0., 1., 1., 1., 1., 0.], dtype=float32)>

Now we've got predicted classes, let's evaluate them against the ground truth labels.

# Calcuate model_3 results

model_3_results = calculate_results(y_true=val_labels,

y_pred=model_3_preds)

model_3_results

{'accuracy': 77.55905511811024,

'precision': 0.776326889347514,

'recall': 0.7755905511811023,

'f1': 0.7740902496040959}

Finally we can compare our GRU model's results to our baseline.

# Compare to baseline

compare_baseline_to_new_results(baseline_results, model_3_results)

Baseline accuracy: 79.27, New accuracy: 77.56, Difference: -1.71 Baseline precision: 0.81, New precision: 0.78, Difference: -0.03 Baseline recall: 0.79, New recall: 0.78, Difference: -0.02 Baseline f1: 0.79, New f1: 0.77, Difference: -0.01

Model 4: Bidirectonal RNN model¶

Look at us go! We've already built two RNN's with GRU and LSTM cells. Now we're going to look into another kind of RNN, the bidirectional RNN.

A standard RNN will process a sequence from left to right, where as a bidirectional RNN will process the sequence from left to right and then again from right to left.

Intuitively, this can be thought of as if you were reading a sentence for the first time in the normal fashion (left to right) but for some reason it didn't make sense so you traverse back through the words and go back over them again (right to left).

In practice, many sequence models often see and improvement in performance when using bidirectional RNN's.

However, this improvement in performance often comes at the cost of longer training times and increased model parameters (since the model goes left to right and right to left, the number of trainable parameters doubles).

Okay enough talk, let's build a bidirectional RNN.

Once again, TensorFlow helps us out by providing the tensorflow.keras.layers.Bidirectional class. We can use the Bidirectional class to wrap our existing RNNs, instantly making them bidirectional.

# Set random seed and create embedding layer (new embedding layer for each model)

tf.random.set_seed(42)

from tensorflow.keras import layers

model_4_embedding = layers.Embedding(input_dim=max_vocab_length,

output_dim=128,

embeddings_initializer="uniform",

input_length=max_length,

name="embedding_4")

# Build a Bidirectional RNN in TensorFlow

inputs = layers.Input(shape=(1,), dtype="string")

x = text_vectorizer(inputs)

x = model_4_embedding(x)

# x = layers.Bidirectional(layers.LSTM(64, return_sequences=True))(x) # stacking RNN layers requires return_sequences=True

x = layers.Bidirectional(layers.LSTM(64))(x) # bidirectional goes both ways so has double the parameters of a regular LSTM layer

outputs = layers.Dense(1, activation="sigmoid")(x)

model_4 = tf.keras.Model(inputs, outputs, name="model_4_Bidirectional")

🔑 Note: You can use the

Bidirectionalwrapper on any RNN cell in TensorFlow. For example,layers.Bidirectional(layers.GRU(64))creates a bidirectional GRU cell.

Our bidirectional model is built, let's compile it.

# Compile

model_4.compile(loss="binary_crossentropy",

optimizer=tf.keras.optimizers.Adam(),

metrics=["accuracy"])

And of course, we'll check out a summary.

# Get a summary of our bidirectional model

model_4.summary()

Model: "model_4_Bidirectional"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_4 (InputLayer) [(None, 1)] 0

text_vectorization_1 (TextV (None, 15) 0

ectorization)

embedding_4 (Embedding) (None, 15, 128) 1280000

bidirectional (Bidirectiona (None, 128) 98816

l)

dense_3 (Dense) (None, 1) 129

=================================================================

Total params: 1,378,945

Trainable params: 1,378,945

Non-trainable params: 0

_________________________________________________________________

Notice the increased number of trainable parameters in model_4 (bidirectional LSTM) compared to model_2 (regular LSTM). This is due to the bidirectionality we added to our RNN.

Time to fit our bidirectional model and track its performance.

# Fit the model (takes longer because of the bidirectional layers)

model_4_history = model_4.fit(train_sentences,

train_labels,

epochs=5,

validation_data=(val_sentences, val_labels),

callbacks=[create_tensorboard_callback(SAVE_DIR, "bidirectional_RNN")])

Saving TensorBoard log files to: model_logs/bidirectional_RNN/20230526-001559 Epoch 1/5 215/215 [==============================] - 14s 47ms/step - loss: 0.5096 - accuracy: 0.7447 - val_loss: 0.4585 - val_accuracy: 0.7861 Epoch 2/5 215/215 [==============================] - 2s 12ms/step - loss: 0.3140 - accuracy: 0.8726 - val_loss: 0.5086 - val_accuracy: 0.7743 Epoch 3/5 215/215 [==============================] - 2s 11ms/step - loss: 0.2139 - accuracy: 0.9183 - val_loss: 0.5716 - val_accuracy: 0.7730 Epoch 4/5 215/215 [==============================] - 2s 9ms/step - loss: 0.1486 - accuracy: 0.9504 - val_loss: 0.6707 - val_accuracy: 0.7703 Epoch 5/5 215/215 [==============================] - 2s 10ms/step - loss: 0.1058 - accuracy: 0.9648 - val_loss: 0.6658 - val_accuracy: 0.7677

Due to the bidirectionality of our model we see a slight increase in training time.

Not to worry, it's not too dramatic of an increase.

Let's make some predictions with it.

# Make predictions with bidirectional RNN on the validation data

model_4_pred_probs = model_4.predict(val_sentences)

model_4_pred_probs[:10]

24/24 [==============================] - 1s 3ms/step

array([[0.05258294],

[0.8495521 ],

[0.99898857],

[0.15441437],

[0.00566462],

[0.99576193],

[0.952807 ],

[0.9993511 ],

[0.99936384],

[0.19425693]], dtype=float32)

And we'll convert them to prediction classes and evaluate them against the ground truth labels and baseline model.

# Convert prediction probabilities to labels

model_4_preds = tf.squeeze(tf.round(model_4_pred_probs))

model_4_preds[:10]

<tf.Tensor: shape=(10,), dtype=float32, numpy=array([0., 1., 1., 0., 0., 1., 1., 1., 1., 0.], dtype=float32)>

# Calculate bidirectional RNN model results

model_4_results = calculate_results(val_labels, model_4_preds)

model_4_results

{'accuracy': 76.77165354330708,

'precision': 0.7675450859410361,

'recall': 0.7677165354330708,

'f1': 0.7667932666650168}

# Check to see how the bidirectional model performs against the baseline

compare_baseline_to_new_results(baseline_results, model_4_results)

Baseline accuracy: 79.27, New accuracy: 76.77, Difference: -2.49 Baseline precision: 0.81, New precision: 0.77, Difference: -0.04 Baseline recall: 0.79, New recall: 0.77, Difference: -0.02 Baseline f1: 0.79, New f1: 0.77, Difference: -0.02

Convolutional Neural Networks for Text¶

You might've used convolutional neural networks (CNNs) for images before but they can also be used for sequences.

The main difference between using CNNs for images and sequences is the shape of the data. Images come in 2-dimensions (height x width) where as sequences are often 1-dimensional (a string of text).

So to use CNNs with sequences, we use a 1-dimensional convolution instead of a 2-dimensional convolution.

A typical CNN architecture for sequences will look like the following:

Inputs (text) -> Tokenization -> Embedding -> Layers -> Outputs (class probabilities)

You might be thinking "that just looks like the architecture layout we've been using for the other models..."

And you'd be right.

The difference again is in the layers component. Instead of using an LSTM or GRU cell, we're going to use a tensorflow.keras.layers.Conv1D() layer followed by a tensorflow.keras.layers.GlobablMaxPool1D() layer.

- 1-dimensional convolving filters are used as ngram detectors, each filter specializing in a closely-related family of ngrams (an ngram is a collection of n-words, for example, an ngram of 5 might result in "hello, my name is Daniel").

- Max-pooling over time extracts the relevant ngrams for making a decision.

- The rest of the network classifies the text based on this information.

📖 Resource: The intuition here is explained succinctly in the paper Understanding Convolutional Neural Networks for Text Classification, where they state that CNNs classify text through the following steps:

Model 5: Conv1D¶

Before we build a full 1-dimensional CNN model, let's see a 1-dimensional convolutional layer (also called a temporal convolution) in action.

We'll first create an embedding of a sample of text and experiment passing it through a Conv1D() layer and GlobalMaxPool1D() layer.

# Test out the embedding, 1D convolutional and max pooling

embedding_test = embedding(text_vectorizer(["this is a test sentence"])) # turn target sentence into embedding

conv_1d = layers.Conv1D(filters=32, kernel_size=5, activation="relu") # convolve over target sequence 5 words at a time

conv_1d_output = conv_1d(embedding_test) # pass embedding through 1D convolutional layer

max_pool = layers.GlobalMaxPool1D()

max_pool_output = max_pool(conv_1d_output) # get the most important features

embedding_test.shape, conv_1d_output.shape, max_pool_output.shape

(TensorShape([1, 15, 128]), TensorShape([1, 11, 32]), TensorShape([1, 32]))

Notice the output shapes of each layer.

The embedding has an output shape dimension of the parameters we set it to (input_length=15 and output_dim=128).

The 1-dimensional convolutional layer has an output which has been compressed inline with its parameters. And the same goes for the max pooling layer output.

Our text starts out as a string but gets converted to a feature vector of length 64 through various transformation steps (from tokenization to embedding to 1-dimensional convolution to max pool).

Let's take a peak at what each of these transformations looks like.

# See the outputs of each layer

embedding_test[:1], conv_1d_output[:1], max_pool_output[:1]

(<tf.Tensor: shape=(1, 15, 128), dtype=float32, numpy=

array([[[ 0.01675646, -0.03352517, 0.04817378, ..., -0.02946043,

-0.03770737, 0.01220698],

[-0.00607298, 0.06020833, -0.05641982, ..., 0.08325578,

-0.01878556, -0.08398241],

[-0.0362346 , 0.00904451, -0.03833614, ..., 0.0051756 ,

-0.00220015, -0.0017492 ],

...,

[-0.01078545, 0.05590528, 0.03125916, ..., -0.0312557 ,

-0.05340781, -0.03800201],

[-0.01078545, 0.05590528, 0.03125916, ..., -0.0312557 ,

-0.05340781, -0.03800201],

[-0.01078545, 0.05590528, 0.03125916, ..., -0.0312557 ,

-0.05340781, -0.03800201]]], dtype=float32)>,

<tf.Tensor: shape=(1, 11, 32), dtype=float32, numpy=

array([[[0. , 0.10975833, 0. , 0. , 0. ,

0.06834612, 0. , 0.02298634, 0. , 0. ,

0. , 0. , 0. , 0. , 0.06889185,

0.08162662, 0. , 0. , 0.03804683, 0. ,

0. , 0. , 0. , 0.00810859, 0.02383356,

0. , 0.00385817, 0. , 0.01310921, 0. ,

0. , 0.16110645],

[0.05000008, 0. , 0.03852113, 0.0149918 , 0.03014192,

0.04613257, 0. , 0. , 0. , 0.05233994,

0. , 0. , 0.07095916, 0.03590994, 0. ,

0. , 0. , 0. , 0. , 0.05599808,

0.04344876, 0.04021783, 0. , 0.06110618, 0. ,

0. , 0. , 0.00198402, 0. , 0.03175152,

0. , 0.04452901],

[0. , 0.05068349, 0.06747732, 0. , 0. ,

0.04893802, 0. , 0. , 0. , 0. ,

0. , 0. , 0.0853087 , 0.01114925, 0.00223987,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.10797694, 0.02317763,

0. , 0.01130794, 0. , 0.01777459, 0. ,

0. , 0.02142338],

[0. , 0.01030538, 0. , 0. , 0.02127263,

0.06377578, 0. , 0. , 0. , 0. ,

0.03660904, 0. , 0.13293687, 0.06086106, 0. ,

0. , 0. , 0.03161986, 0.00114628, 0.02163697,

0. , 0. , 0. , 0.04408561, 0. ,