10. Milestone Project 3: Time series forecasting in TensorFlow (BitPredict 💰📈)¶

The goal of this notebook is to get you familiar with working with time series data.

We're going to be building a series of models in an attempt to predict the price of Bitcoin.

Welcome to Milestone Project 3, BitPredict 💰📈!

🔑 Note: ⚠️ This is not financial advice, as you'll see time series forecasting for stock market prices is actually quite terrible.

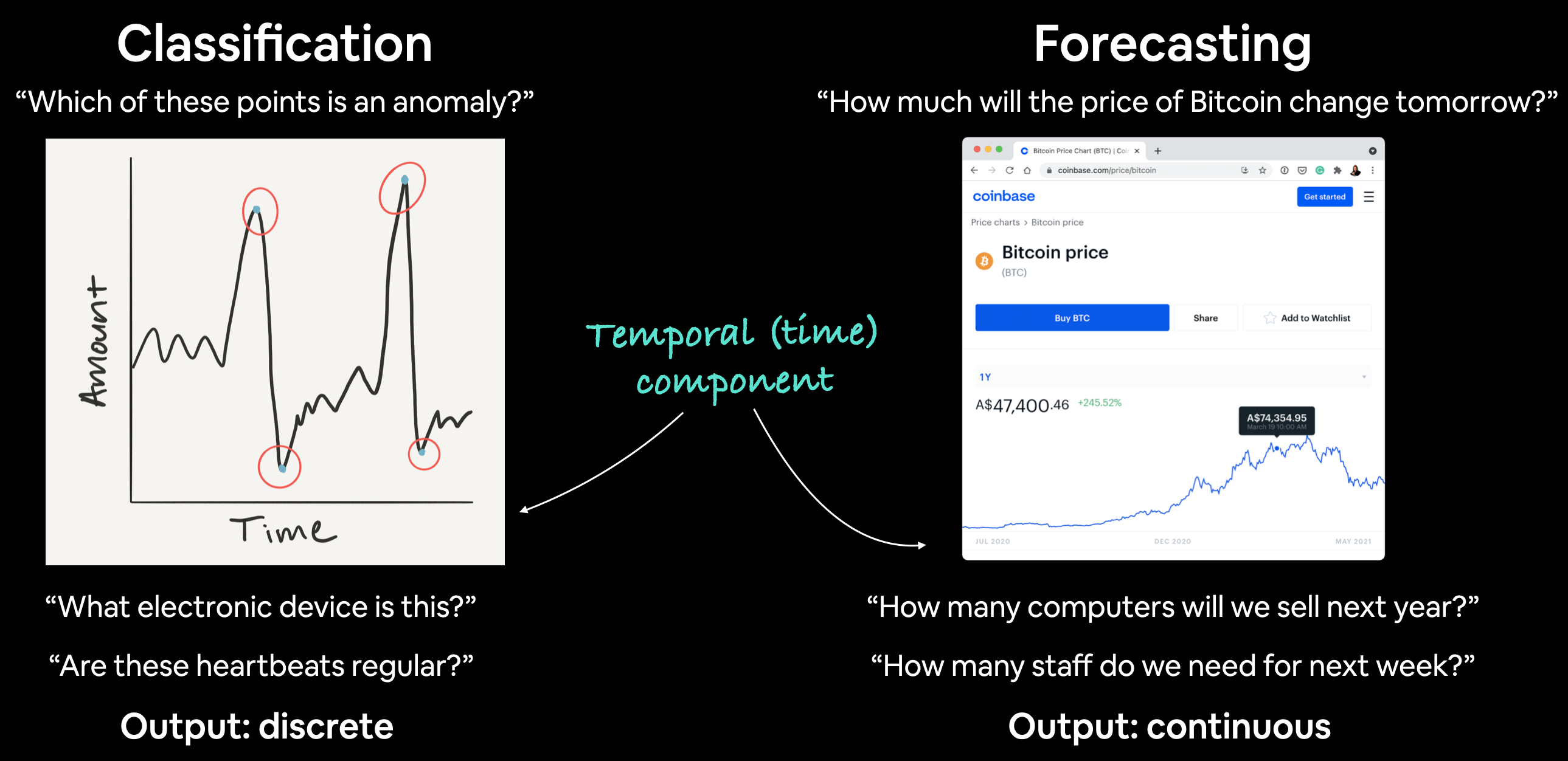

What is a time series problem?¶

Time series problems deal with data over time.

Such as, the number of staff members in a company over 10-years, sales of computers for the past 5-years, electricity usage for the past 50-years.

The timeline can be short (seconds/minutes) or long (years/decades). And the problems you might investigate using can usually be broken down into two categories.

| Problem Type | Examples | Output |

|---|---|---|

| Classification | Anomaly detection, time series identification (where did this time series come from?) | Discrete (a label) |

| Forecasting | Predicting stock market prices, forecasting future demand for a product, stocking inventory requirements | Continuous (a number) |

In both cases above, a supervised learning approach is often used. Meaning, you'd have some example data and a label assosciated with that data.

For example, in forecasting the price of Bitcoin, your data could be the historical price of Bitcoin for the past month and the label could be today's price (the label can't be tomorrow's price because that's what we'd want to predict).

Can you guess what kind of problem BitPredict 💰📈 is?

What we're going to cover¶

Are you ready?

We've got a lot to go through.

- Get time series data (the historical price of Bitcoin)

- Load in time series data using pandas/Python's CSV module

- Format data for a time series problem

- Creating training and test sets (the wrong way)

- Creating training and test sets (the right way)

- Visualizing time series data

- Turning time series data into a supervised learning problem (windowing)

- Preparing univariate and multivariate (more than one variable) data

- Evaluating a time series forecasting model

- Setting up a series of deep learning modelling experiments

- Dense (fully-connected) networks

- Sequence models (LSTM and 1D CNN)

- Ensembling (combining multiple models together)

- Multivariate models

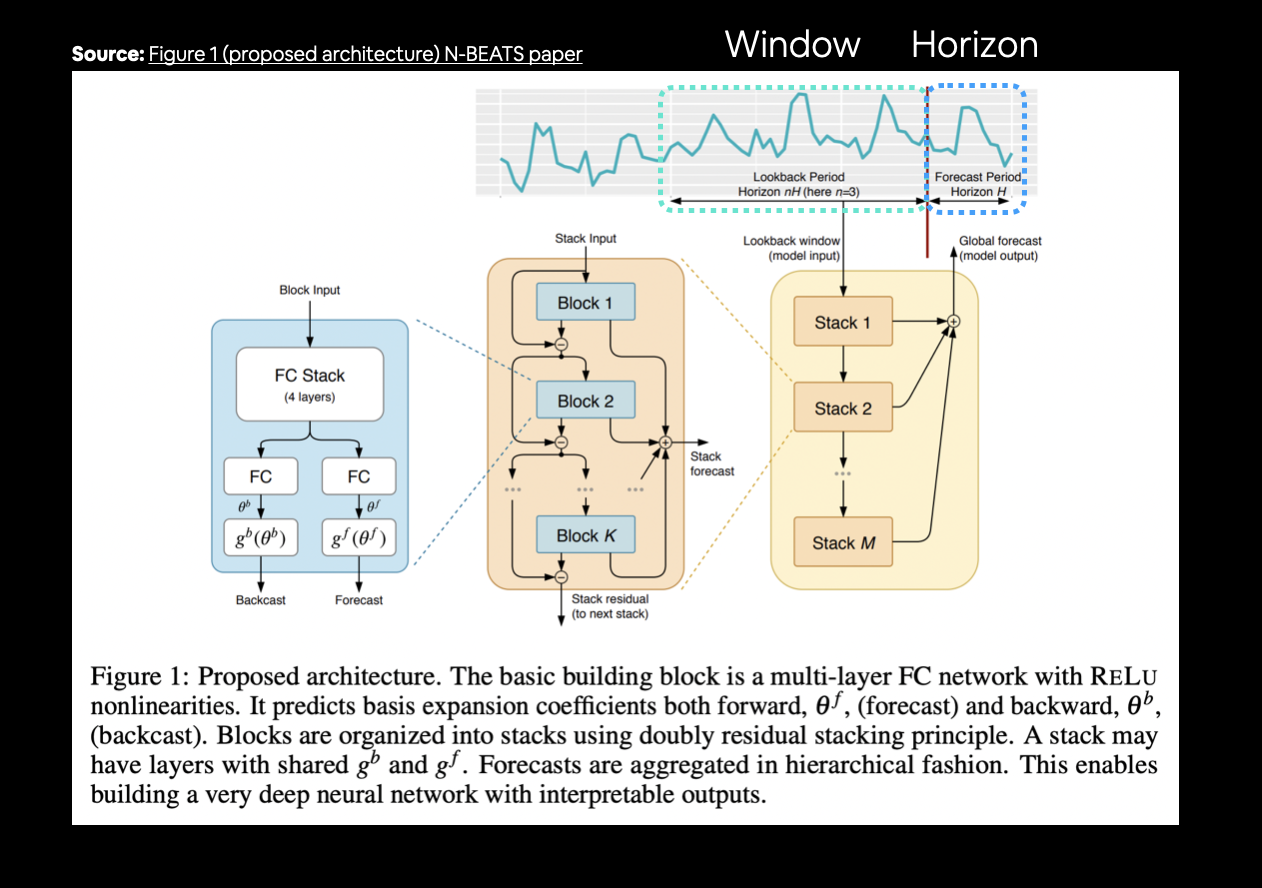

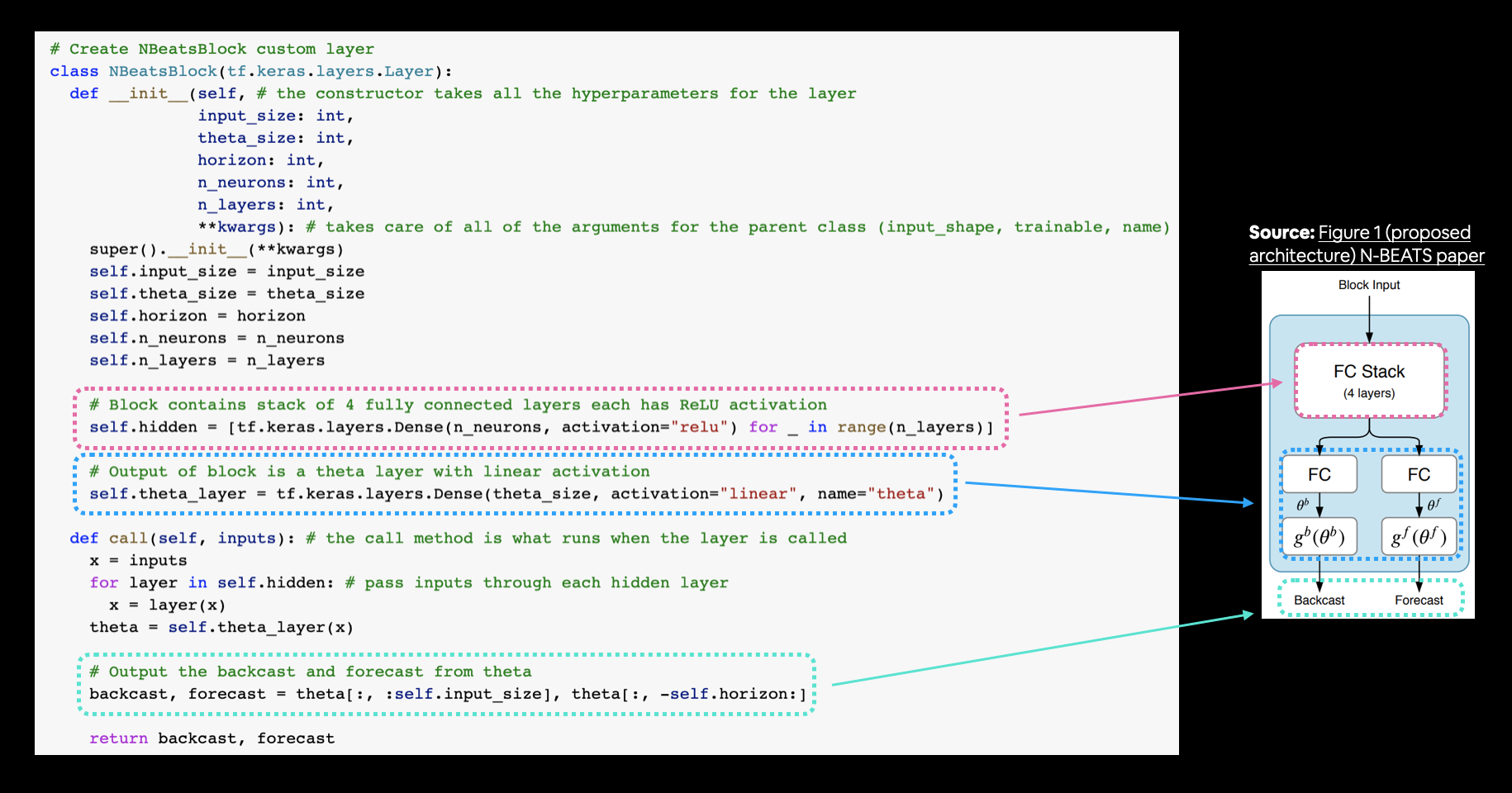

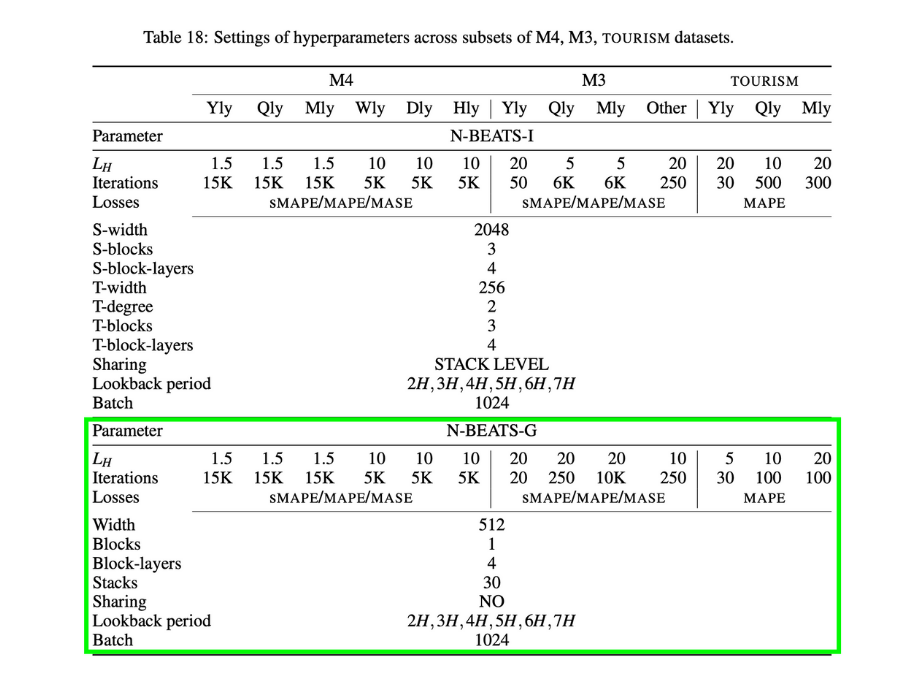

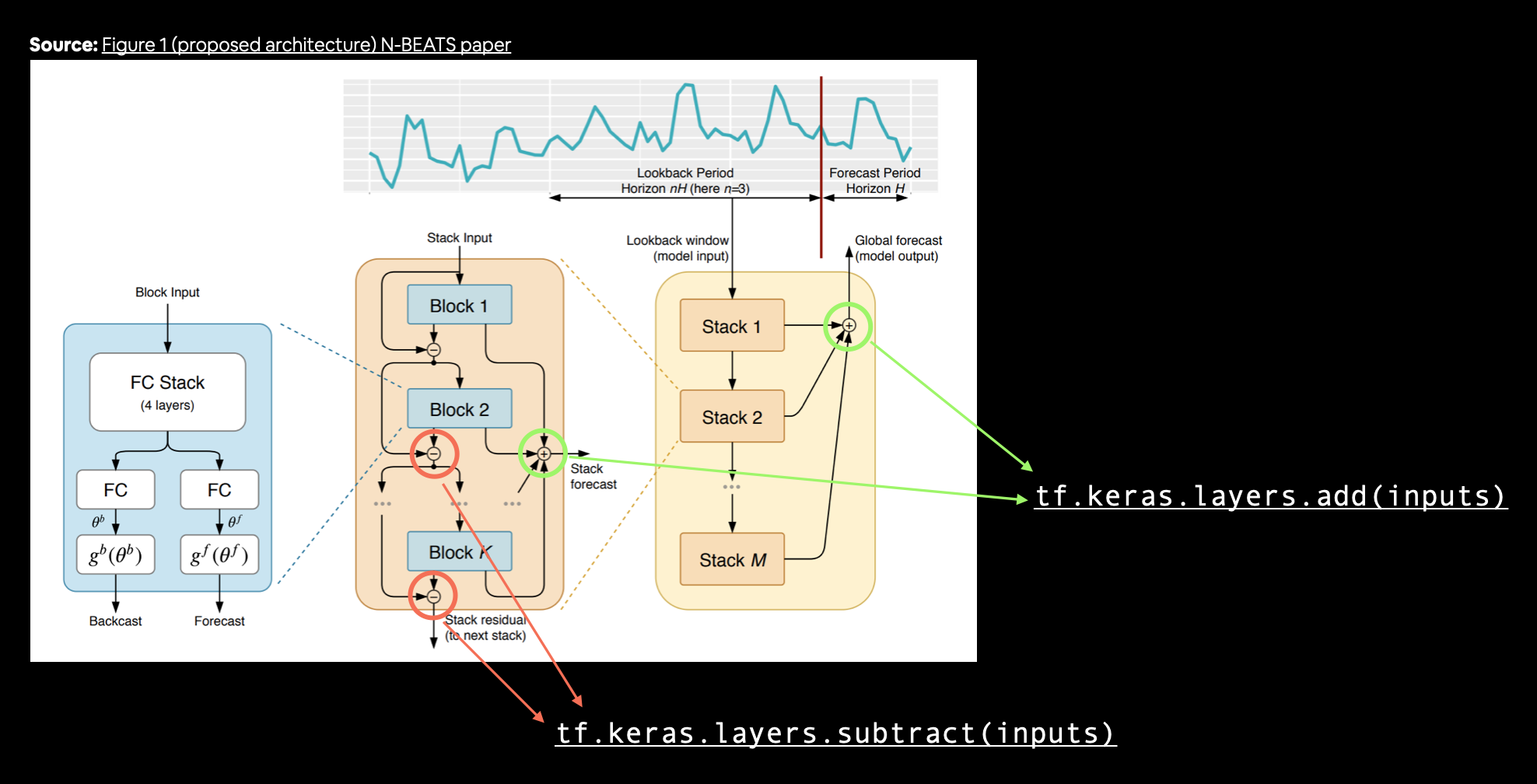

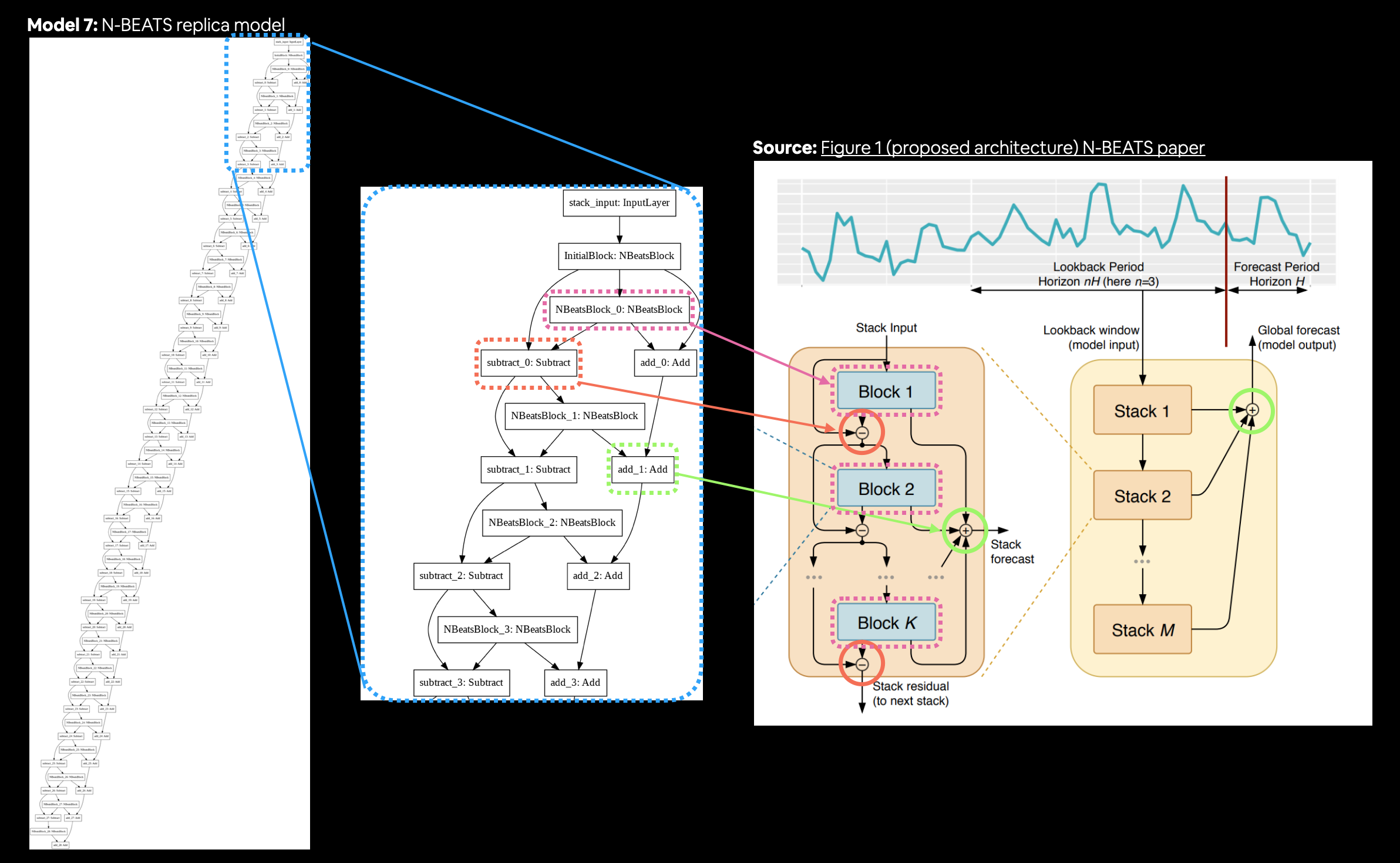

- Replicating the N-BEATS algorithm using TensorFlow layer subclassing

- Creating a modelling checkpoint to save the best performing model during training

- Making predictions (forecasts) with a time series model

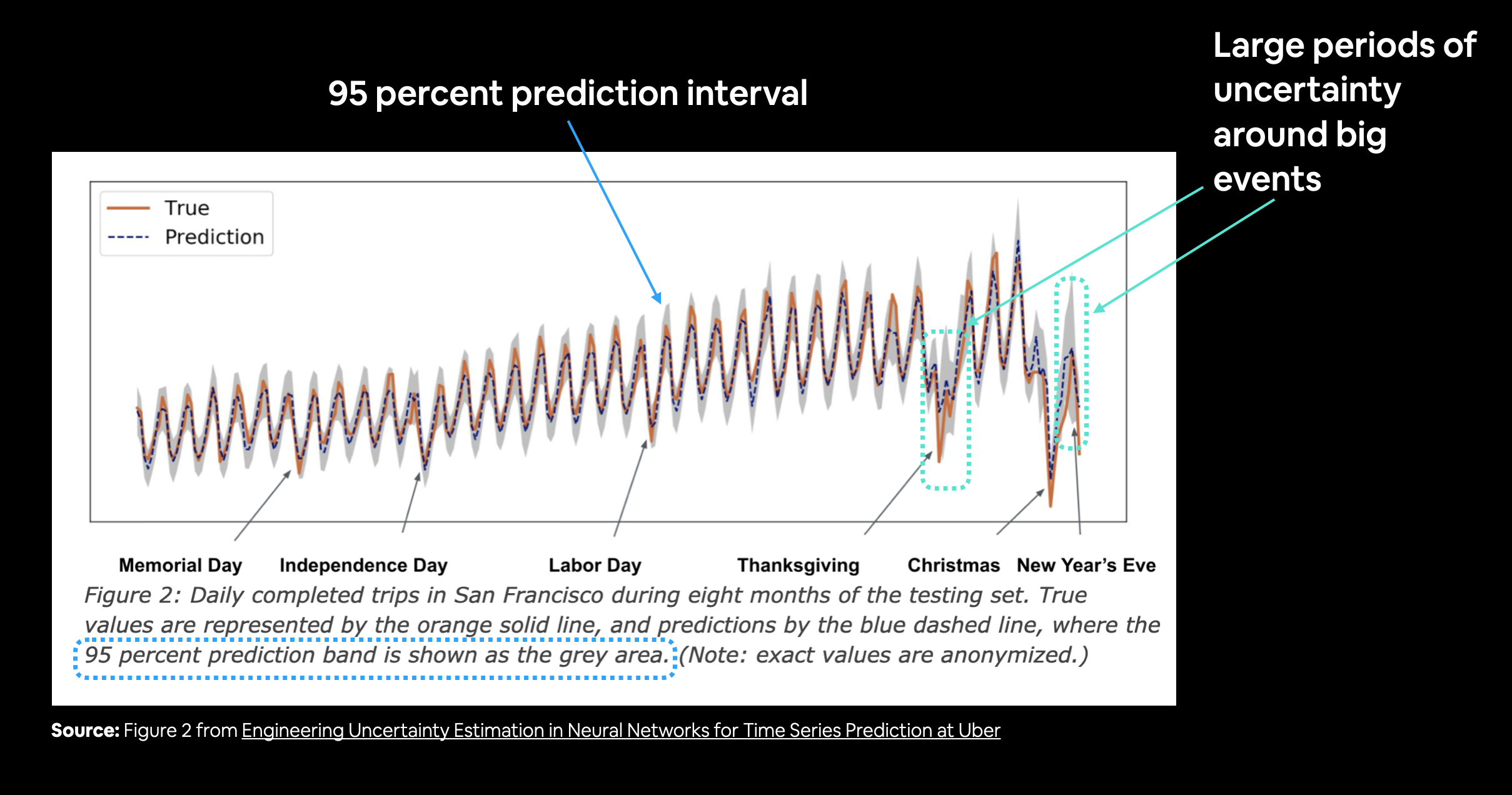

- Creating prediction intervals for time series model forecasts

- Discussing two different types of uncertainty in machine learning (data uncertainty and model uncertainty)

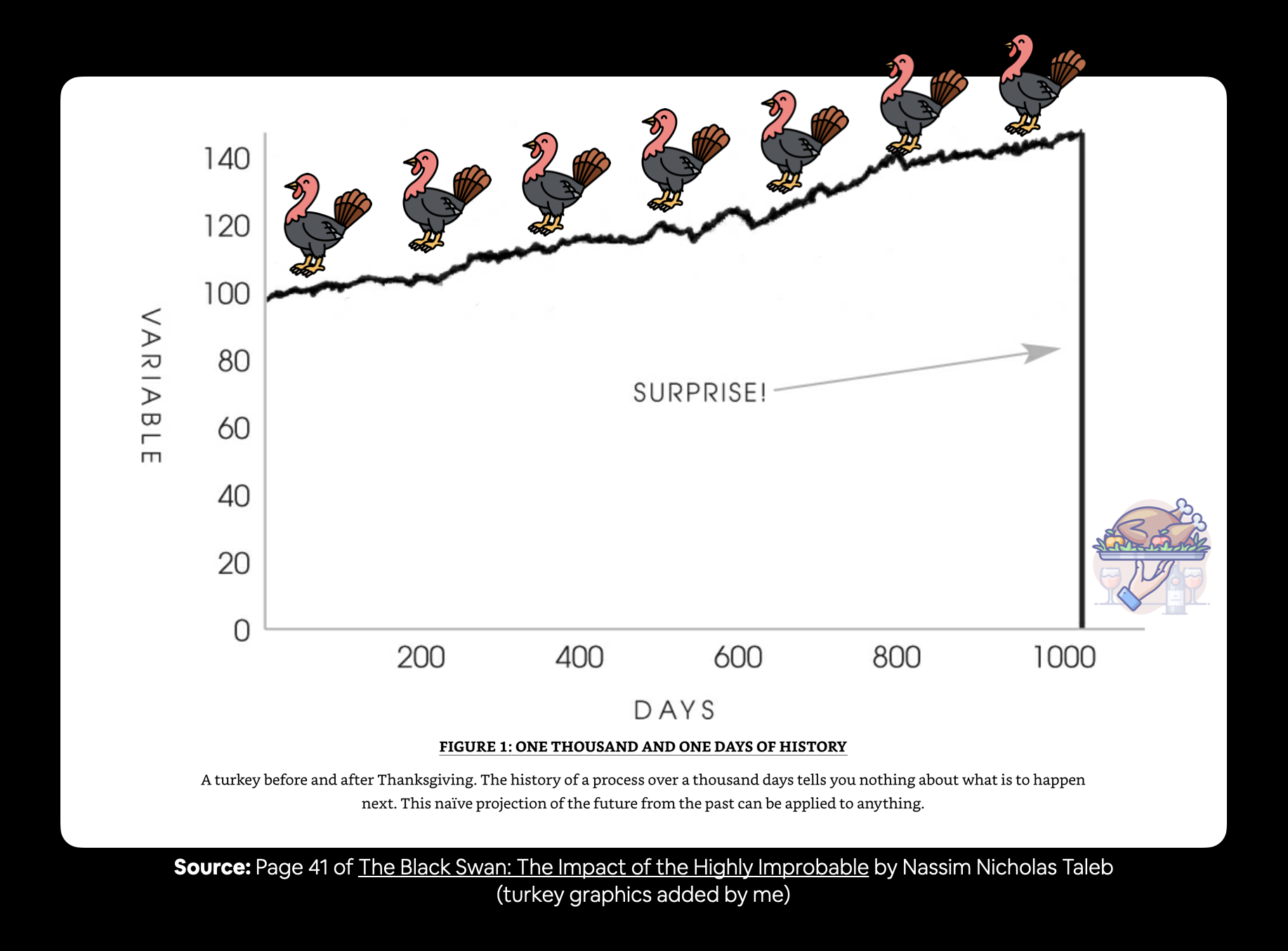

- Demonstrating why forecasting in an open system is BS (the turkey problem)

How you can use this notebook¶

You can read through the descriptions and the code (it should all run), but there's a better option.

Write all of the code yourself.

Yes. I'm serious. Create a new notebook, and rewrite each line by yourself. Investigate it, see if you can break it, why does it break?

You don't have to write the text descriptions but writing the code yourself is a great way to get hands-on experience.

Don't worry if you make mistakes, we all do. The way to get better and make less mistakes is to write more code.

📖 Resource: Get all of the materials you need for this notebook on the course GitHub.

Check for GPU¶

In order for our deep learning models to run as fast as possible, we'll need access to a GPU.

In Google Colab, you can set this up by going to Runtime -> Change runtime type -> Hardware accelerator -> GPU.

After selecting GPU, you may have to restart the runtime.

# Check for GPU

!nvidia-smi -L

GPU 0: Tesla K80 (UUID: GPU-c7456639-4229-1150-8316-e4197bf2c93e)

Get data¶

To build a time series forecasting model, the first thing we're going to need is data.

And since we're trying to predict the price of Bitcoin, we'll need Bitcoin data.

Specifically, we're going to get the prices of Bitcoin from 01 October 2013 to 18 May 2021.

Why these dates?

Because 01 October 2013 is when our data source (Coindesk) started recording the price of Bitcoin and 18 May 2021 is when this notebook was created.

If you're going through this notebook at a later date, you'll be able to use what you learn to predict on later dates of Bitcoin, you'll just have to adjust the data source.

📖 Resource: To get the Bitcoin historical data, I went to the Coindesk page for Bitcoin prices, clicked on "all" and then clicked on "Export data" and selected "CSV".

You can find the data we're going to use on GitHub.

# Download Bitcoin historical data from GitHub

# Note: you'll need to select "Raw" to download the data in the correct format

!wget https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv

--2021-09-27 03:40:22-- https://raw.githubusercontent.com/mrdbourke/tensorflow-deep-learning/main/extras/BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ... Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 178509 (174K) [text/plain] Saving to: ‘BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv’ BTC_USD_2013-10-01_ 100%[===================>] 174.33K --.-KB/s in 0.01s 2021-09-27 03:40:22 (16.5 MB/s) - ‘BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv’ saved [178509/178509]

Importing time series data with pandas¶

Now we've got some data to work with, let's import it using pandas so we can visualize it.

Because our data is in CSV (comma separated values) format (a very common data format for time series), we'll use the pandas read_csv() function.

And because our data has a date component, we'll tell pandas to parse the dates using the parse_dates parameter passing it the name our of the date column ("Date").

# Import with pandas

import pandas as pd

# Parse dates and set date column to index

df = pd.read_csv("/content/BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv",

parse_dates=["Date"],

index_col=["Date"]) # parse the date column (tell pandas column 1 is a datetime)

df.head()

| Currency | Closing Price (USD) | 24h Open (USD) | 24h High (USD) | 24h Low (USD) | |

|---|---|---|---|---|---|

| Date | |||||

| 2013-10-01 | BTC | 123.65499 | 124.30466 | 124.75166 | 122.56349 |

| 2013-10-02 | BTC | 125.45500 | 123.65499 | 125.75850 | 123.63383 |

| 2013-10-03 | BTC | 108.58483 | 125.45500 | 125.66566 | 83.32833 |

| 2013-10-04 | BTC | 118.67466 | 108.58483 | 118.67500 | 107.05816 |

| 2013-10-05 | BTC | 121.33866 | 118.67466 | 121.93633 | 118.00566 |

Looking good! Let's get some more info.

df.info()

<class 'pandas.core.frame.DataFrame'> DatetimeIndex: 2787 entries, 2013-10-01 to 2021-05-18 Data columns (total 5 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Currency 2787 non-null object 1 Closing Price (USD) 2787 non-null float64 2 24h Open (USD) 2787 non-null float64 3 24h High (USD) 2787 non-null float64 4 24h Low (USD) 2787 non-null float64 dtypes: float64(4), object(1) memory usage: 130.6+ KB

Because we told pandas to parse the date column and set it as the index, its not in the list of columns.

You can also see there isn't many samples.

# How many samples do we have?

len(df)

2787

We've collected the historical price of Bitcoin for the past ~8 years but there's only 2787 total samples.

This is something you'll run into with time series data problems. Often, the number of samples isn't as large as other kinds of data.

For example, collecting one sample at different time frames results in:

| 1 sample per timeframe | Number of samples per year |

|---|---|

| Second | 31,536,000 |

| Hour | 8,760 |

| Day | 365 |

| Week | 52 |

| Month | 12 |

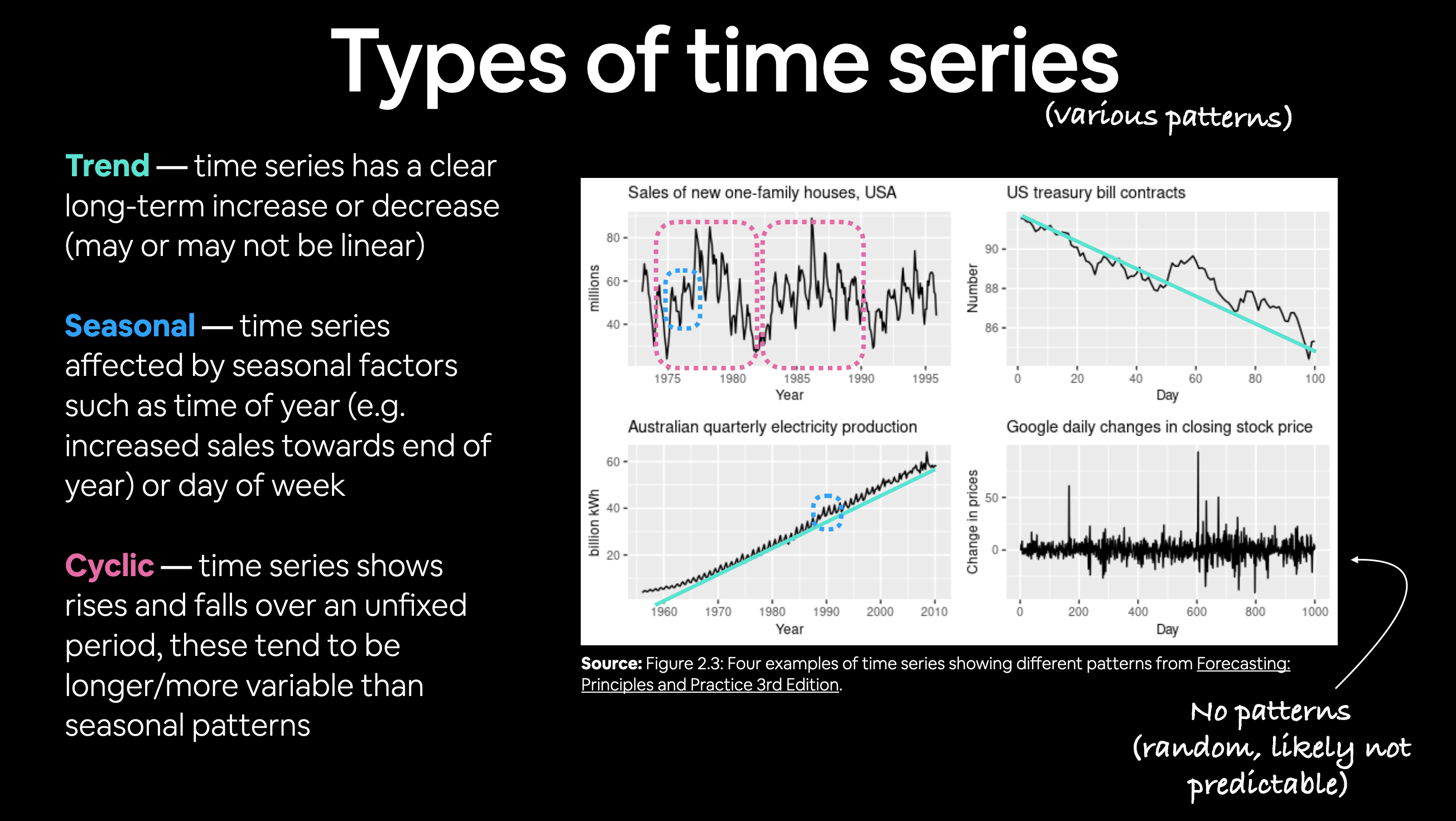

🔑 Note: The frequency at which a time series value is collected is often referred to as seasonality. This is usually mesaured in number of samples per year. For example, collecting the price of Bitcoin once per day would result in a time series with a seasonality of 365. Time series data collected with different seasonality values often exhibit seasonal patterns (e.g. electricity demand behing higher in Summer months for air conditioning than Winter months). For more on different time series patterns, see Forecasting: Principles and Practice Chapter 2.3.

Example of different kinds of patterns you'll see in time series data. Notice the bottom right time series (Google stock price changes) has little to no patterns, making it difficult to predict. See Forecasting: Principles and Practice Chapter 2.3 for full graphic.

Example of different kinds of patterns you'll see in time series data. Notice the bottom right time series (Google stock price changes) has little to no patterns, making it difficult to predict. See Forecasting: Principles and Practice Chapter 2.3 for full graphic.

Deep learning algorithms usually flourish with lots of data, in the range of thousands to millions of samples.

In our case, we've got the daily prices of Bitcoin, a max of 365 samples per year.

But that doesn't we can't try them with our data.

To simplify, let's remove some of the columns from our data so we're only left with a date index and the closing price.

# Only want closing price for each day

bitcoin_prices = pd.DataFrame(df["Closing Price (USD)"]).rename(columns={"Closing Price (USD)": "Price"})

bitcoin_prices.head()

| Price | |

|---|---|

| Date | |

| 2013-10-01 | 123.65499 |

| 2013-10-02 | 125.45500 |

| 2013-10-03 | 108.58483 |

| 2013-10-04 | 118.67466 |

| 2013-10-05 | 121.33866 |

Much better!

But that's only five days worth of Bitcoin prices, let's plot everything we've got.

import matplotlib.pyplot as plt

bitcoin_prices.plot(figsize=(10, 7))

plt.ylabel("BTC Price")

plt.title("Price of Bitcoin from 1 Oct 2013 to 18 May 2021", fontsize=16)

plt.legend(fontsize=14);

Woah, looks like it would've been a good idea to buy Bitcoin back in 2014.

Importing time series data with Python's CSV module¶

If your time series data comes in CSV form you don't necessarily have to use pandas.

You can use Python's in-built csv module. And if you're working with dates, you might also want to use Python's datetime.

Let's see how we can replicate the plot we created before except this time using Python's csv and datetime modules.

📖 Resource: For a great guide on using Python's

csvmodule, check out Real Python's tutorial on Reading and Writing CSV files in Python.

# Importing and formatting historical Bitcoin data with Python

import csv

from datetime import datetime

timesteps = []

btc_price = []

with open("/content/BTC_USD_2013-10-01_2021-05-18-CoinDesk.csv", "r") as f:

csv_reader = csv.reader(f, delimiter=",") # read in the target CSV

next(csv_reader) # skip first line (this gets rid of the column titles)

for line in csv_reader:

timesteps.append(datetime.strptime(line[1], "%Y-%m-%d")) # get the dates as dates (not strings), strptime = string parse time

btc_price.append(float(line[2])) # get the closing price as float

# View first 10 of each

timesteps[:10], btc_price[:10]

([datetime.datetime(2013, 10, 1, 0, 0), datetime.datetime(2013, 10, 2, 0, 0), datetime.datetime(2013, 10, 3, 0, 0), datetime.datetime(2013, 10, 4, 0, 0), datetime.datetime(2013, 10, 5, 0, 0), datetime.datetime(2013, 10, 6, 0, 0), datetime.datetime(2013, 10, 7, 0, 0), datetime.datetime(2013, 10, 8, 0, 0), datetime.datetime(2013, 10, 9, 0, 0), datetime.datetime(2013, 10, 10, 0, 0)], [123.65499, 125.455, 108.58483, 118.67466, 121.33866, 120.65533, 121.795, 123.033, 124.049, 125.96116])

Beautiful! Now, let's see how things look.

# Plot from CSV

import matplotlib.pyplot as plt

import numpy as np

plt.figure(figsize=(10, 7))

plt.plot(timesteps, btc_price)

plt.title("Price of Bitcoin from 1 Oct 2013 to 18 May 2021", fontsize=16)

plt.xlabel("Date")

plt.ylabel("BTC Price");

Ho ho! Would you look at that! Just like the pandas plot. And because we formatted the timesteps to be datetime objects, matplotlib displays a fantastic looking date axis.

Format Data Part 1: Creatining train and test sets for time series data¶

Alrighty. What's next?

If you guessed preparing our data for a model, you'd be right.

What's the most important first step for preparing any machine learning dataset?

Scaling?

No...

Removing outliers?

No...

How about creating train and test splits?

Yes!

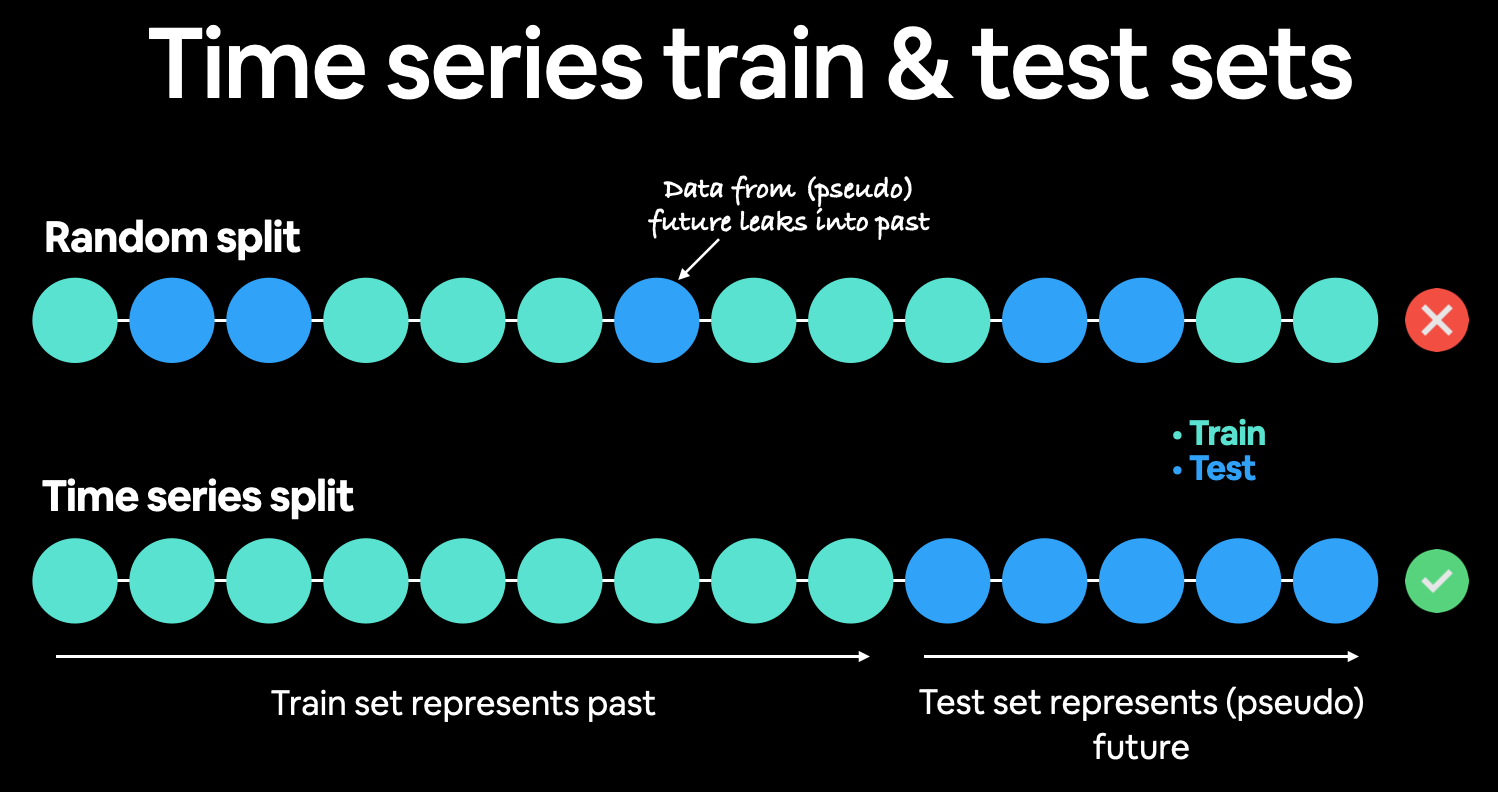

Usually, you could create a train and test split using a function like Scikit-Learn's outstanding train_test_split() but as we'll see in a moment, this doesn't really cut it for time series data.

But before we do create splits, it's worth talking about what kind of data we have.

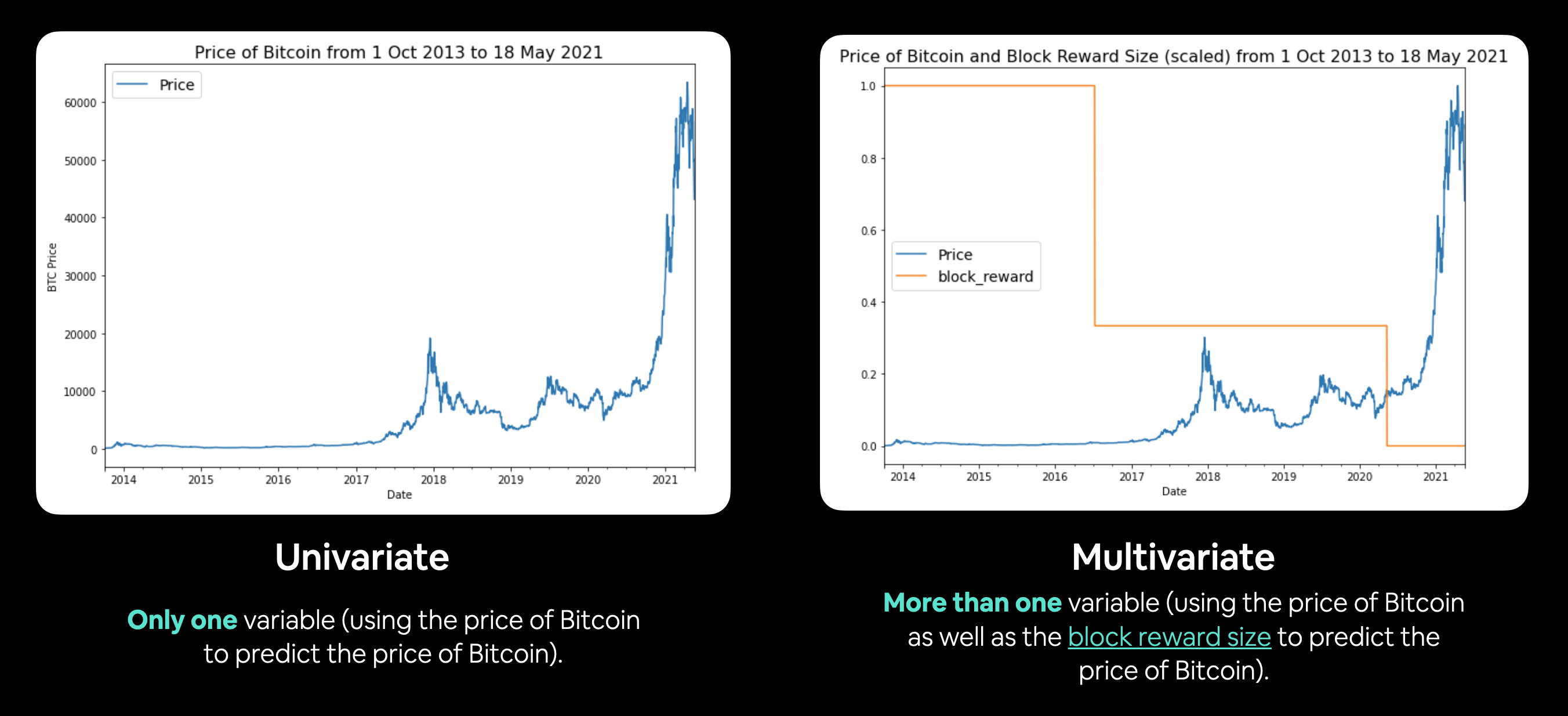

In time series problems, you'll either have univariate or multivariate data.

Can you guess what our data is?

- Univariate time series data deals with one variable, for example, using the price of Bitcoin to predict the price of Bitcoin.

- Multivariate time series data deals with more than one variable, for example, predicting electricity demand using the day of week, time of year and number of houses in a region.

Example of univariate and multivariate time series data. Univariate involves using the target to predict the target. Multivariate inolves using the target as well as another time series to predict the target.

Example of univariate and multivariate time series data. Univariate involves using the target to predict the target. Multivariate inolves using the target as well as another time series to predict the target.

Create train & test sets for time series (the wrong way)¶

Okay, we've figured out we're dealing with a univariate time series, so we only have to make a split on one variable (for multivariate time series, you will have to split multiple variables).

How about we first see the wrong way for splitting time series data?

Let's turn our DataFrame index and column into NumPy arrays.

# Get bitcoin date array

timesteps = bitcoin_prices.index.to_numpy()

prices = bitcoin_prices["Price"].to_numpy()

timesteps[:10], prices[:10]

(array(['2013-10-01T00:00:00.000000000', '2013-10-02T00:00:00.000000000',

'2013-10-03T00:00:00.000000000', '2013-10-04T00:00:00.000000000',

'2013-10-05T00:00:00.000000000', '2013-10-06T00:00:00.000000000',

'2013-10-07T00:00:00.000000000', '2013-10-08T00:00:00.000000000',

'2013-10-09T00:00:00.000000000', '2013-10-10T00:00:00.000000000'],

dtype='datetime64[ns]'),

array([123.65499, 125.455 , 108.58483, 118.67466, 121.33866, 120.65533,

121.795 , 123.033 , 124.049 , 125.96116]))

And now we'll use the ever faithful train_test_split from Scikit-Learn to create our train and test sets.

# Wrong way to make train/test sets for time series

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(timesteps, # dates

prices, # prices

test_size=0.2,

random_state=42)

X_train.shape, X_test.shape, y_train.shape, y_test.shape

((2229,), (558,), (2229,), (558,))

Looks like the splits worked well, but let's not trust numbers on a page, let's visualize, visualize, visualize!

# Let's plot wrong train and test splits

plt.figure(figsize=(10, 7))

plt.scatter(X_train, y_train, s=5, label="Train data")

plt.scatter(X_test, y_test, s=5, label="Test data")

plt.xlabel("Date")

plt.ylabel("BTC Price")

plt.legend(fontsize=14)

plt.show();

Hmmm... what's wrong with this plot?

Well, let's remind ourselves of what we're trying to do.

We're trying to use the historical price of Bitcoin to predict future prices of Bitcoin.

With this in mind, our seen data (training set) is what?

Prices of Bitcoin in the past.

And our unseen data (test set) is?

Prices of Bitcoin in the future.

Does the plot above reflect this?

No.

Our test data is scattered all throughout the training data.

This kind of random split is okay for datasets without a time component (such as images or passages of text for classification problems) but for time series, we've got to take the time factor into account.

To fix this, we've got to split our data in a way that reflects what we're actually trying to do.

We need to split our historical Bitcoin data to have a dataset that reflects the past (train set) and a dataset that reflects the future (test set).

Create train & test sets for time series (the right way)¶

Of course, there's no way we can actually access data from the future.

But we can engineer our test set to be in the future with respect to the training set.

To do this, we can create an abitrary point in time to split our data.

Everything before the point in time can be considered the training set and everything after the point in time can be considered the test set.

Demonstration of time series split. Rather than a traditionaly random train/test split, it's best to split the time series data sequentially. Meaning, the test data should be data from the future when compared to the training data.

Demonstration of time series split. Rather than a traditionaly random train/test split, it's best to split the time series data sequentially. Meaning, the test data should be data from the future when compared to the training data.

# Create train and test splits the right way for time series data

split_size = int(0.8 * len(prices)) # 80% train, 20% test

# Create train data splits (everything before the split)

X_train, y_train = timesteps[:split_size], prices[:split_size]

# Create test data splits (everything after the split)

X_test, y_test = timesteps[split_size:], prices[split_size:]

len(X_train), len(X_test), len(y_train), len(y_test)

(2229, 558, 2229, 558)

Okay, looks like our custom made splits are the same lengths as the splits we made with train_test_split.

But again, these are numbers on a page.

And you know how the saying goes, trust one eye more than two ears.

Let's visualize.

# Plot correctly made splits

plt.figure(figsize=(10, 7))

plt.scatter(X_train, y_train, s=5, label="Train data")

plt.scatter(X_test, y_test, s=5, label="Test data")

plt.xlabel("Date")

plt.ylabel("BTC Price")

plt.legend(fontsize=14)

plt.show();

That looks much better!

Do you see what's happened here?

We're going to be using the training set (past) to train a model to try and predict values on the test set (future).

Because the test set is an artificial future, we can guage how our model might perform on actual future data.

🔑 Note: The amount of data you reserve for your test set not set in stone. You could have 80/20, 90/10, 95/5 splits or in some cases, you might not even have enough data to split into train and test sets (see the resource below). The point is to remember the test set is a pseudofuture and not the actual future, it is only meant to give you an indication of how the models you're building are performing.

📖 Resource: Working with time series data can be tricky compared to other kinds of data. And there are a few pitfalls to watch out for, such as how much data to use for a test set. The article 3 facts about time series forecasting that surprise experienced machine learning practitioners talks about different things to watch out for when working with time series data, I'd recommend reading it.

Create a plotting function¶

Rather than retyping matplotlib commands to continuously plot data, let's make a plotting function we can reuse later.

# Create a function to plot time series data

def plot_time_series(timesteps, values, format='.', start=0, end=None, label=None):

"""

Plots a timesteps (a series of points in time) against values (a series of values across timesteps).

Parameters

---------

timesteps : array of timesteps

values : array of values across time

format : style of plot, default "."

start : where to start the plot (setting a value will index from start of timesteps & values)

end : where to end the plot (setting a value will index from end of timesteps & values)

label : label to show on plot of values

"""

# Plot the series

plt.plot(timesteps[start:end], values[start:end], format, label=label)

plt.xlabel("Time")

plt.ylabel("BTC Price")

if label:

plt.legend(fontsize=14) # make label bigger

plt.grid(True)

# Try out our plotting function

plt.figure(figsize=(10, 7))

plot_time_series(timesteps=X_train, values=y_train, label="Train data")

plot_time_series(timesteps=X_test, values=y_test, label="Test data")

Looking good!

Time for some modelling experiments.

Modelling Experiments¶

We can build almost any kind of model for our problem as long as the data inputs and outputs are formatted correctly.

However, just because we can build almost any kind of model, doesn't mean it'll perform well/should be used in a production setting.

We'll see what this means as we build and evaluate models throughout.

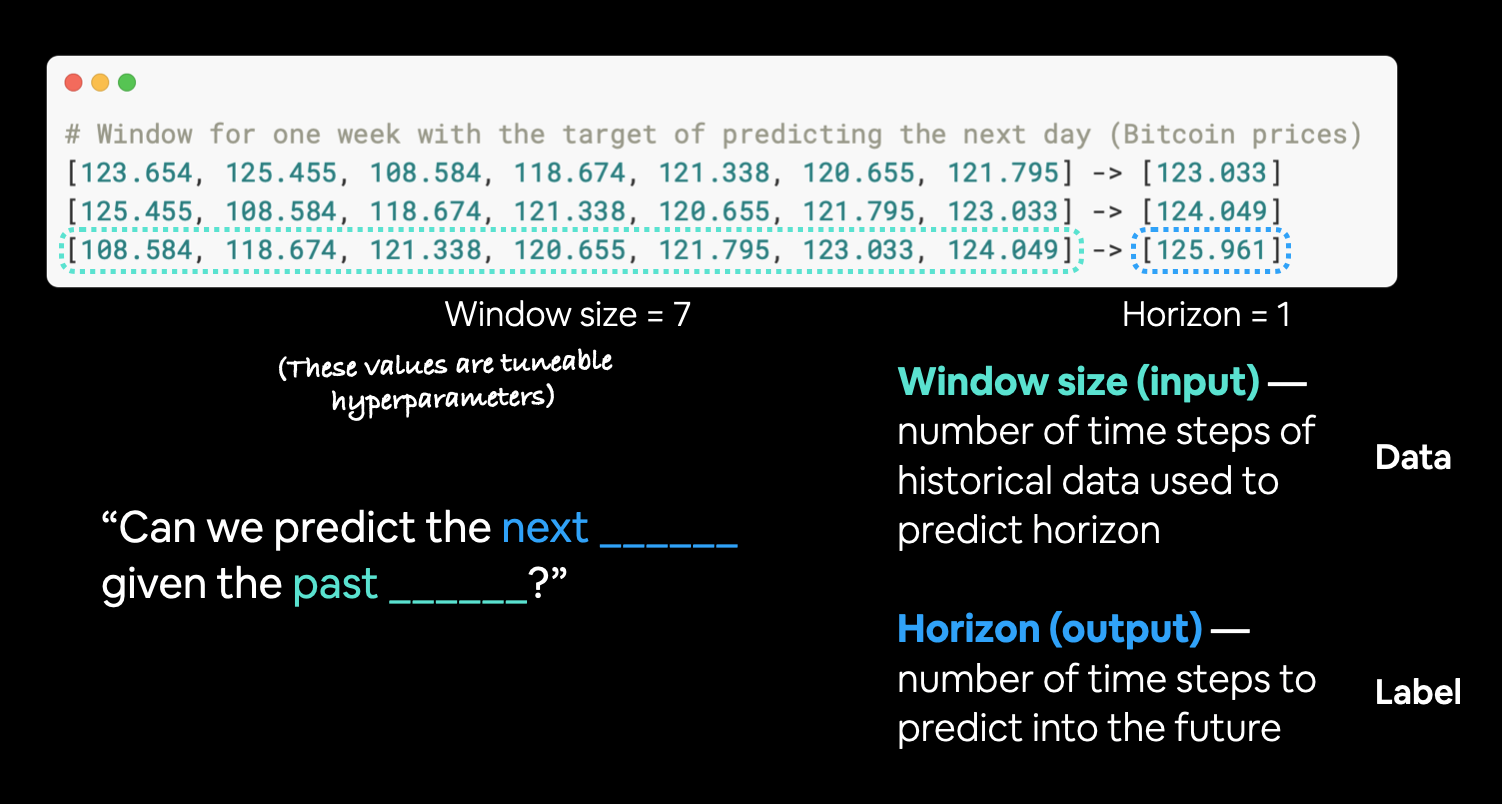

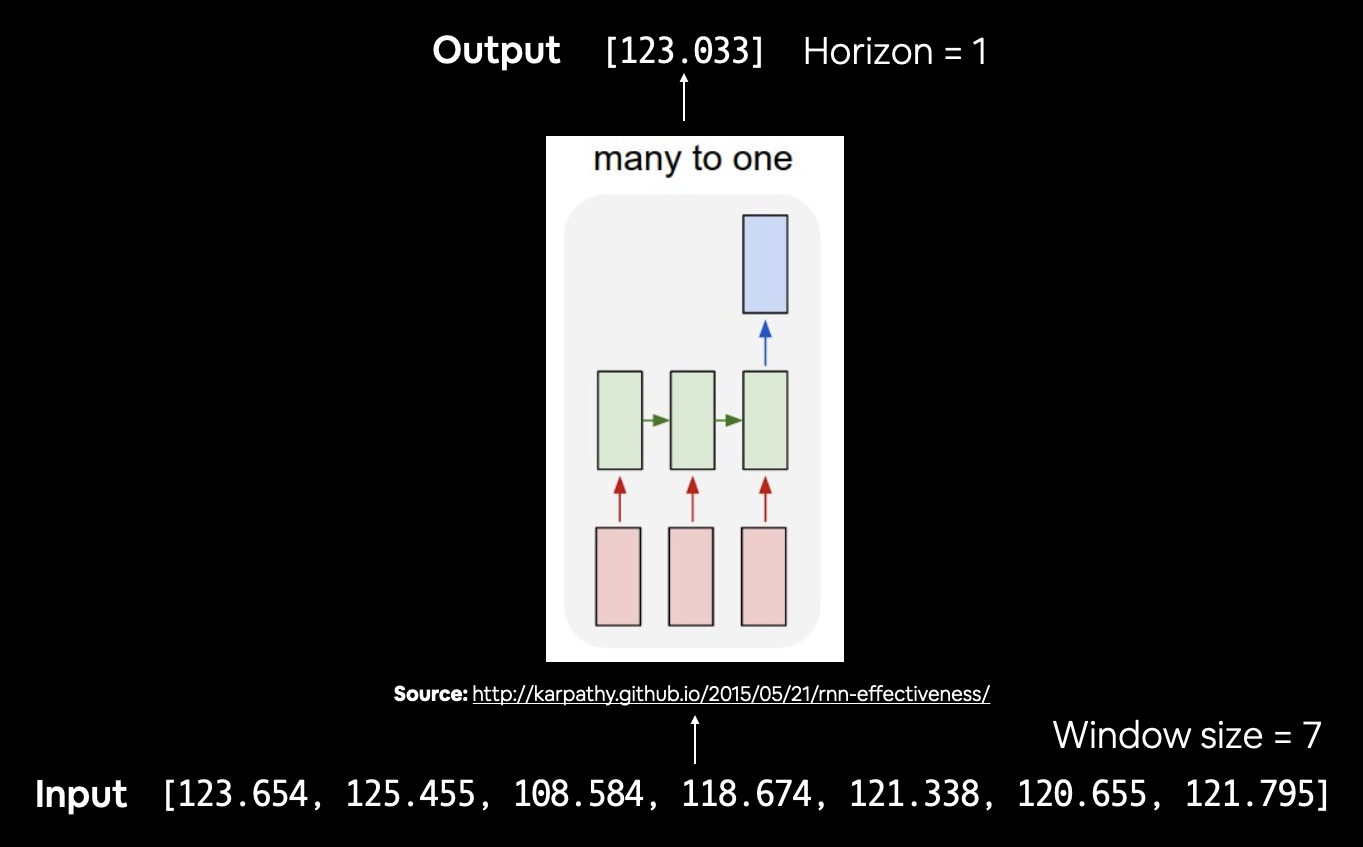

Before we discuss what modelling experiments we're going to run, there are two terms you should be familiar with, horizon and window.

- horizon = number of timesteps to predict into future

- window = number of timesteps from past used to predict horizon

For example, if we wanted to predict the price of Bitcoin for tomorrow (1 day in the future) using the previous week's worth of Bitcoin prices (7 days in the past), the horizon would be 1 and the window would be 7.

Now, how about those modelling experiments?

| Model Number | Model Type | Horizon size | Window size | Extra data |

|---|---|---|---|---|

| 0 | Naïve model (baseline) | NA | NA | NA |

| 1 | Dense model | 1 | 7 | NA |

| 2 | Same as 1 | 1 | 30 | NA |

| 3 | Same as 1 | 7 | 30 | NA |

| 4 | Conv1D | 1 | 7 | NA |

| 5 | LSTM | 1 | 7 | NA |

| 6 | Same as 1 (but with multivariate data) | 1 | 7 | Block reward size |

| 7 | N-BEATs Algorithm | 1 | 7 | NA |

| 8 | Ensemble (multiple models optimized on different loss functions) | 1 | 7 | NA |

| 9 | Future prediction model (model to predict future values) | 1 | 7 | NA |

| 10 | Same as 1 (but with turkey 🦃 data introduced) | 1 | 7 | NA |

🔑 Note: To reiterate, as you can see, we can build many types of models for the data we're working with. But that doesn't mean that they'll perform well. Deep learning is a powerful technique but it doesn't always work. And as always, start with a simple model first and then add complexity as needed.

Model 0: Naïve forecast (baseline)¶

As usual, let's start with a baseline.

One of the most common baseline models for time series forecasting, the naïve model (also called the naïve forecast), requires no training at all.

That's because all the naïve model does is use the previous timestep value to predict the next timestep value.

The formula looks like this:

$$\hat{y}_{t} = y_{t-1}$$

In English:

The prediction at timestep

t(y-hat) is equal to the value at timestept-1(the previous timestep).

Sound simple?

Maybe not.

In an open system (like a stock market or crypto market), you'll often find beating the naïve forecast with any kind of model is quite hard.

🔑 Note: For the sake of this notebook, an open system is a system where inputs and outputs can freely flow, such as a market (stock or crypto). Where as, a closed system the inputs and outputs are contained within the system (like a poker game with your buddies, you know the buy in and you know how much the winner can get). Time series forecasting in open systems is generally quite poor.

# Create a naïve forecast

naive_forecast = y_test[:-1] # Naïve forecast equals every value excluding the last value

naive_forecast[:10], naive_forecast[-10:] # View frist 10 and last 10

(array([9226.48582088, 8794.35864452, 8798.04205463, 9081.18687849,

8711.53433917, 8760.89271814, 8749.52059102, 8656.97092235,

8500.64355816, 8469.2608989 ]),

array([57107.12067189, 58788.20967893, 58102.19142623, 55715.54665129,

56573.5554719 , 52147.82118698, 49764.1320816 , 50032.69313676,

47885.62525472, 45604.61575361]))

# Plot naive forecast

plt.figure(figsize=(10, 7))

plot_time_series(timesteps=X_train, values=y_train, label="Train data")

plot_time_series(timesteps=X_test, values=y_test, label="Test data")

plot_time_series(timesteps=X_test[1:], values=naive_forecast, format="-", label="Naive forecast");

The naive forecast looks like it's following the data well.

Let's zoom in to take a better look.

We can do so by creating an offset value and passing it to the start parameter of our plot_time_series() function.

plt.figure(figsize=(10, 7))

offset = 300 # offset the values by 300 timesteps

plot_time_series(timesteps=X_test, values=y_test, start=offset, label="Test data")

plot_time_series(timesteps=X_test[1:], values=naive_forecast, format="-", start=offset, label="Naive forecast");

When we zoom in we see the naïve forecast comes slightly after the test data. This makes sense because the naive forecast uses the previous timestep value to predict the next timestep value.

Forecast made. Time to evaluate it.

Evaluating a time series model¶

Time series forecasting often involves predicting a number (in our case, the price of Bitcoin).

And what kind of problem is predicting a number?

Ten points if you said regression.

With this known, we can use regression evaluation metrics to evaluate our time series forecasts.

The main thing we will be evaluating is: how do our model's predictions (y_pred) compare against the actual values (y_true or ground truth values)?

📖 Resource: We're going to be using several metrics to evaluate our different model's time series forecast accuracy. Many of them are sourced and explained mathematically and conceptually in Forecasting: Principles and Practice chapter 5.8, I'd recommend reading through here for a more in-depth overview of what we're going to practice.

For all of the following metrics, lower is better (for example an MAE of 0 is better than an MAE 100).

Scale-dependent errors¶

These are metrics which can be used to compare time series values and forecasts that are on the same scale.

For example, Bitcoin historical prices in USD veresus Bitcoin forecast values in USD.

| Metric | Details | Code |

|---|---|---|

| MAE (mean absolute error) | Easy to interpret (a forecast is X amount different from actual amount). Forecast methods which minimises the MAE will lead to forecasts of the median. | tf.keras.metrics.mean_absolute_error() |

| RMSE (root mean square error) | Forecasts which minimise the RMSE lead to forecasts of the mean. | tf.sqrt(tf.keras.metrics.mean_square_error()) |

Percentage errors¶

Percentage errors do not have units, this means they can be used to compare forecasts across different datasets.

| Metric | Details | Code |

|---|---|---|

| MAPE (mean absolute percentage error) | Most commonly used percentage error. May explode (not work) if y=0. |

tf.keras.metrics.mean_absolute_percentage_error() |

| sMAPE (symmetric mean absolute percentage error) | Recommended not to be used by Forecasting: Principles and Practice, though it is used in forecasting competitions. | Custom implementation |

Scaled errors¶

Scaled errors are an alternative to percentage errors when comparing forecast performance across different time series.

| Metric | Details | Code |

|---|---|---|

| MASE (mean absolute scaled error). | MASE equals one for the naive forecast (or very close to one). A forecast which performs better than the naïve should get <1 MASE. | See sktime's mase_loss() |

🤔 Question: There are so many metrics... which one should I pay most attention to? It's going to depend on your problem. However, since its ease of interpretation (you can explain it in a sentence to your grandma), MAE is often a very good place to start.

Since we're going to be evaluing a lot of models, let's write a function to help us calculate evaluation metrics on their forecasts.

First we'll need TensorFlow.

# Let's get TensorFlow!

import tensorflow as tf

And since TensorFlow doesn't have a ready made version of MASE (mean aboslute scaled error), how about we create our own?

We'll take inspiration from sktime's (Scikit-Learn for time series) MeanAbsoluteScaledError class which calculates the MASE.

# MASE implemented courtesy of sktime - https://github.com/alan-turing-institute/sktime/blob/ee7a06843a44f4aaec7582d847e36073a9ab0566/sktime/performance_metrics/forecasting/_functions.py#L16

def mean_absolute_scaled_error(y_true, y_pred):

"""

Implement MASE (assuming no seasonality of data).

"""

mae = tf.reduce_mean(tf.abs(y_true - y_pred))

# Find MAE of naive forecast (no seasonality)

mae_naive_no_season = tf.reduce_mean(tf.abs(y_true[1:] - y_true[:-1])) # our seasonality is 1 day (hence the shifting of 1 day)

return mae / mae_naive_no_season

You'll notice the version of MASE above doesn't take in the training values like sktime's mae_loss(). In our case, we're comparing the MAE of our predictions on the test to the MAE of the naïve forecast on the test set.

In practice, if we've created the function correctly, the naïve model should achieve an MASE of 1 (or very close to 1). Any model worse than the naïve forecast will achieve an MASE of >1 and any model better than the naïve forecast will achieve an MASE of <1.

Let's put each of our different evaluation metrics together into a function.

def evaluate_preds(y_true, y_pred):

# Make sure float32 (for metric calculations)

y_true = tf.cast(y_true, dtype=tf.float32)

y_pred = tf.cast(y_pred, dtype=tf.float32)

# Calculate various metrics

mae = tf.keras.metrics.mean_absolute_error(y_true, y_pred)

mse = tf.keras.metrics.mean_squared_error(y_true, y_pred) # puts and emphasis on outliers (all errors get squared)

rmse = tf.sqrt(mse)

mape = tf.keras.metrics.mean_absolute_percentage_error(y_true, y_pred)

mase = mean_absolute_scaled_error(y_true, y_pred)

return {"mae": mae.numpy(),

"mse": mse.numpy(),

"rmse": rmse.numpy(),

"mape": mape.numpy(),

"mase": mase.numpy()}

Looking good! How about we test our function on the naive forecast?

naive_results = evaluate_preds(y_true=y_test[1:],

y_pred=naive_forecast)

naive_results

{'mae': 567.9802,

'mape': 2.516525,

'mase': 0.99957,

'mse': 1147547.0,

'rmse': 1071.2362}

Alright, looks like we've got some baselines to beat.

Taking a look at the naïve forecast's MAE, it seems on average each forecast is ~$567 different than the actual Bitcoin price.

How does this compare to the average price of Bitcoin in the test dataset?

# Find average price of Bitcoin in test dataset

tf.reduce_mean(y_test).numpy()

20056.632963737226

Okay, looking at these two values is starting to give us an idea of how our model is performing:

The average price of Bitcoin in the test dataset is: $20,056 (note: average may not be the best measure here, since the highest price is over 3x this value and the lowest price is over 4x lower)

Each prediction in naive forecast is on average off by: $567

Is this enough to say it's a good model?

That's up your own interpretation. Personally, I'd prefer a model which was closer to the mark.

How about we try and build one?

Other kinds of time series forecasting models which can be used for baselines and actual forecasts¶

Since we've got a naïve forecast baseline to work with, it's time we start building models to try and beat it.

And because this course is focused on TensorFlow and deep learning, we're going to be using TensorFlow to build deep learning models to try and improve on our naïve forecasting results.

That being said, there are many other kinds of models you may want to look into for building baselines/performing forecasts.

Some of them may even beat our best performing models in this notebook, however, I'll leave trying them out for extra-curriculum.

| Model/Library Name | Resource |

|---|---|

| Moving average | https://machinelearningmastery.com/moving-average-smoothing-for-time-series-forecasting-python/ |

| ARIMA (Autoregression Integrated Moving Average) | https://machinelearningmastery.com/arima-for-time-series-forecasting-with-python/ |

| sktime (Scikit-Learn for time series) | https://github.com/alan-turing-institute/sktime |

| TensorFlow Decision Forests (random forest, gradient boosting trees) | https://www.tensorflow.org/decision_forests |

| Facebook Kats (purpose-built forecasting and time series analysis library by Facebook) | https://github.com/facebookresearch/Kats |

| LinkedIn Greykite (flexible, intuitive and fast forecasts) | https://github.com/linkedin/greykite |

Format Data Part 2: Windowing dataset¶

Surely we'd be ready to start building models by now?

We're so close! Only one more step (really two) to go.

We've got to window our time series.

Why do we window?

Windowing is a method to turn a time series dataset into supervised learning problem.

In other words, we want to use windows of the past to predict the future.

For example for a univariate time series, windowing for one week (window=7) to predict the next single value (horizon=1) might look like:

Window for one week (univariate time series)

[0, 1, 2, 3, 4, 5, 6] -> [7]

[1, 2, 3, 4, 5, 6, 7] -> [8]

[2, 3, 4, 5, 6, 7, 8] -> [9]

Or for the price of Bitcoin, it'd look like:

Window for one week with the target of predicting the next day (Bitcoin prices)

[123.654, 125.455, 108.584, 118.674, 121.338, 120.655, 121.795] -> [123.033]

[125.455, 108.584, 118.674, 121.338, 120.655, 121.795, 123.033] -> [124.049]

[108.584, 118.674, 121.338, 120.655, 121.795, 123.033, 124.049] -> [125.961]

Example of windows and horizons for Bitcoin data. Windowing can be used to turn time series data into a supervised learning problem.

Example of windows and horizons for Bitcoin data. Windowing can be used to turn time series data into a supervised learning problem.

Let's build some functions which take in a univariate time series and turn it into windows and horizons of specified sizes.

We'll start with the default horizon size of 1 and a window size of 7 (these aren't necessarily the best values to use, I've just picked them).

HORIZON = 1 # predict 1 step at a time

WINDOW_SIZE = 7 # use a week worth of timesteps to predict the horizon

Now we'll write a function to take in an array and turn it into a window and horizon.

# Create function to label windowed data

def get_labelled_windows(x, horizon=1):

"""

Creates labels for windowed dataset.

E.g. if horizon=1 (default)

Input: [1, 2, 3, 4, 5, 6] -> Output: ([1, 2, 3, 4, 5], [6])

"""

return x[:, :-horizon], x[:, -horizon:]

# Test out the window labelling function

test_window, test_label = get_labelled_windows(tf.expand_dims(tf.range(8)+1, axis=0), horizon=HORIZON)

print(f"Window: {tf.squeeze(test_window).numpy()} -> Label: {tf.squeeze(test_label).numpy()}")

Window: [1 2 3 4 5 6 7] -> Label: 8

Oh yeah, that's what I'm talking about!

Now we need a way to make windows for an entire time series.

We could do this with Python for loops, however, for large time series, that'd be quite slow.

To speed things up, we'll leverage NumPy's array indexing.

Let's write a function which:

- Creates a window step of specific window size, for example:

[[0, 1, 2, 3, 4, 5, 6, 7]] - Uses NumPy indexing to create a 2D of multiple window steps, for example:

[[0, 1, 2, 3, 4, 5, 6, 7],

[1, 2, 3, 4, 5, 6, 7, 8],

[2, 3, 4, 5, 6, 7, 8, 9]]

- Uses the 2D array of multuple window steps to index on a target series

- Uses the

get_labelled_windows()function we created above to turn the window steps into windows with a specified horizon

📖 Resource: The function created below has been adapted from Syafiq Kamarul Azman's article Fast and Robust Sliding Window Vectorization with NumPy.

# Create function to view NumPy arrays as windows

def make_windows(x, window_size=7, horizon=1):

"""

Turns a 1D array into a 2D array of sequential windows of window_size.

"""

# 1. Create a window of specific window_size (add the horizon on the end for later labelling)

window_step = np.expand_dims(np.arange(window_size+horizon), axis=0)

# print(f"Window step:\n {window_step}")

# 2. Create a 2D array of multiple window steps (minus 1 to account for 0 indexing)

window_indexes = window_step + np.expand_dims(np.arange(len(x)-(window_size+horizon-1)), axis=0).T # create 2D array of windows of size window_size

# print(f"Window indexes:\n {window_indexes[:3], window_indexes[-3:], window_indexes.shape}")

# 3. Index on the target array (time series) with 2D array of multiple window steps

windowed_array = x[window_indexes]

# 4. Get the labelled windows

windows, labels = get_labelled_windows(windowed_array, horizon=horizon)

return windows, labels

Phew! A few steps there... let's see how it goes.

full_windows, full_labels = make_windows(prices, window_size=WINDOW_SIZE, horizon=HORIZON)

len(full_windows), len(full_labels)

(2780, 2780)

Of course we have to visualize, visualize, visualize!

# View the first 3 windows/labels

for i in range(3):

print(f"Window: {full_windows[i]} -> Label: {full_labels[i]}")

Window: [123.65499 125.455 108.58483 118.67466 121.33866 120.65533 121.795 ] -> Label: [123.033] Window: [125.455 108.58483 118.67466 121.33866 120.65533 121.795 123.033 ] -> Label: [124.049] Window: [108.58483 118.67466 121.33866 120.65533 121.795 123.033 124.049 ] -> Label: [125.96116]

# View the last 3 windows/labels

for i in range(3):

print(f"Window: {full_windows[i-3]} -> Label: {full_labels[i-3]}")

Window: [58788.20967893 58102.19142623 55715.54665129 56573.5554719 52147.82118698 49764.1320816 50032.69313676] -> Label: [47885.62525472] Window: [58102.19142623 55715.54665129 56573.5554719 52147.82118698 49764.1320816 50032.69313676 47885.62525472] -> Label: [45604.61575361] Window: [55715.54665129 56573.5554719 52147.82118698 49764.1320816 50032.69313676 47885.62525472 45604.61575361] -> Label: [43144.47129086]

🔑 Note: You can find a function which achieves similar results to the ones we implemented above at

tf.keras.preprocessing.timeseries_dataset_from_array(). Just like ours, it takes in an array and returns a windowed dataset. It has the benefit of returning data in the form of a tf.data.Dataset instance (we'll see how to do this with our own data later).

Turning windows into training and test sets¶

Look how good those windows look! Almost like the stain glass windows on the Sistine Chapel, well, maybe not that good but still.

Time to turn our windows into training and test splits.

We could've windowed our existing training and test splits, however, with the nature of windowing (windowing often requires an offset at some point in the data), it usually works better to window the data first, then split it into training and test sets.

Let's write a function which takes in full sets of windows and their labels and splits them into train and test splits.

# Make the train/test splits

def make_train_test_splits(windows, labels, test_split=0.2):

"""

Splits matching pairs of windows and labels into train and test splits.

"""

split_size = int(len(windows) * (1-test_split)) # this will default to 80% train/20% test

train_windows = windows[:split_size]

train_labels = labels[:split_size]

test_windows = windows[split_size:]

test_labels = labels[split_size:]

return train_windows, test_windows, train_labels, test_labels

Look at that amazing function, lets test it.

train_windows, test_windows, train_labels, test_labels = make_train_test_splits(full_windows, full_labels)

len(train_windows), len(test_windows), len(train_labels), len(test_labels)

(2224, 556, 2224, 556)

Notice the default split of 80% training data and 20% testing data (this split can be adjusted if needed).

How do the first 5 samples of the training windows and labels looks?

train_windows[:5], train_labels[:5]

(array([[123.65499, 125.455 , 108.58483, 118.67466, 121.33866, 120.65533,

121.795 ],

[125.455 , 108.58483, 118.67466, 121.33866, 120.65533, 121.795 ,

123.033 ],

[108.58483, 118.67466, 121.33866, 120.65533, 121.795 , 123.033 ,

124.049 ],

[118.67466, 121.33866, 120.65533, 121.795 , 123.033 , 124.049 ,

125.96116],

[121.33866, 120.65533, 121.795 , 123.033 , 124.049 , 125.96116,

125.27966]]), array([[123.033 ],

[124.049 ],

[125.96116],

[125.27966],

[125.9275 ]]))

# Check to see if same (accounting for horizon and window size)

np.array_equal(np.squeeze(train_labels[:-HORIZON-1]), y_train[WINDOW_SIZE:])

True

Make a modelling checkpoint¶

We're so close to building models. So so so close.

Because our model's performance will fluctuate from experiment to experiment, we'll want to make sure we're comparing apples to apples.

What I mean by this is in order for a fair comparison, we want to compare each model's best performance against each model's best performance.

For example, if model_1 performed incredibly well on epoch 55 but its performance fell off toward epoch 100, we want the version of the model from epoch 55 to compare to other models rather than the version of the model from epoch 100.

And the same goes for each of our other models: compare the best against the best.

To take of this, we'll implement a ModelCheckpoint callback.

The ModelCheckpoint callback will monitor our model's performance during training and save the best model to file by setting save_best_only=True.

That way when evaluating our model we could restore its best performing configuration from file.

🔑 Note: Because of the size of the dataset (smaller than usual), you'll notice our modelling experiment results fluctuate quite a bit during training (hence the implementation of the

ModelCheckpointcallback to save the best model).

Because we're going to be running multiple experiments, it makes sense to keep track of them by saving models to file under different names.

To do this, we'll write a small function to create a ModelCheckpoint callback which saves a model to specified filename.

import os

# Create a function to implement a ModelCheckpoint callback with a specific filename

def create_model_checkpoint(model_name, save_path="model_experiments"):

return tf.keras.callbacks.ModelCheckpoint(filepath=os.path.join(save_path, model_name), # create filepath to save model

verbose=0, # only output a limited amount of text

save_best_only=True) # save only the best model to file

Model 1: Dense model (window = 7, horizon = 1)¶

Finally!

Time to build one of our models.

If you think we've been through a fair bit of preprocessing before getting here, you're right.

Often, preparing data for a model is one of the largest parts of any machine learning project.

And once you've got a good model in place, you'll probably notice far more improvements from manipulating the data (e.g. collecting more, improving the quality) than manipulating the model.

We're going to start by keeping it simple, model_1 will have:

- A single dense layer with 128 hidden units and ReLU (rectified linear unit) activation

- An output layer with linear activation (or no activation)

- Adam optimizer and MAE loss function

- Batch size of 128

- 100 epochs

Why these values?

I picked them out of experimentation.

A batch size of 32 works pretty well too and we could always train for less epochs but since the model runs so fast (you'll see in a second, it's because the number of samples we have isn't massive) we might as well train for more.

🔑 Note: As always, many of the values for machine learning problems are experimental. A reminder that the values you can set yourself in a machine learning algorithm (the hidden units, the batch size, horizon size, window size) are called hyperparameters. And experimenting to find the best values for hyperparameters is called hyperparameter tuning. Where as parameters learned by a model itself (patterns in the data, formally called weights & biases) are referred to as parameters.

Let's import TensorFlow and build our first deep learning model for time series.

import tensorflow as tf

from tensorflow.keras import layers

# Set random seed for as reproducible results as possible

tf.random.set_seed(42)

# Construct model

model_1 = tf.keras.Sequential([

layers.Dense(128, activation="relu"),

layers.Dense(HORIZON, activation="linear") # linear activation is the same as having no activation

], name="model_1_dense") # give the model a name so we can save it

# Compile model

model_1.compile(loss="mae",

optimizer=tf.keras.optimizers.Adam(),

metrics=["mae"]) # we don't necessarily need this when the loss function is already MAE

# Fit model

model_1.fit(x=train_windows, # train windows of 7 timesteps of Bitcoin prices

y=train_labels, # horizon value of 1 (using the previous 7 timesteps to predict next day)

epochs=100,

verbose=1,

batch_size=128,

validation_data=(test_windows, test_labels),

callbacks=[create_model_checkpoint(model_name=model_1.name)]) # create ModelCheckpoint callback to save best model

Epoch 1/100 18/18 [==============================] - 3s 12ms/step - loss: 780.3455 - mae: 780.3455 - val_loss: 2279.6526 - val_mae: 2279.6526 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 2/100 18/18 [==============================] - 0s 4ms/step - loss: 247.6756 - mae: 247.6756 - val_loss: 1005.9991 - val_mae: 1005.9991 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 3/100 18/18 [==============================] - 0s 4ms/step - loss: 188.4116 - mae: 188.4116 - val_loss: 923.2862 - val_mae: 923.2862 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 4/100 18/18 [==============================] - 0s 4ms/step - loss: 169.4340 - mae: 169.4340 - val_loss: 900.5872 - val_mae: 900.5872 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 5/100 18/18 [==============================] - 0s 4ms/step - loss: 165.0895 - mae: 165.0895 - val_loss: 895.2238 - val_mae: 895.2238 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 6/100 18/18 [==============================] - 0s 4ms/step - loss: 158.5210 - mae: 158.5210 - val_loss: 855.1982 - val_mae: 855.1982 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 7/100 18/18 [==============================] - 0s 5ms/step - loss: 151.3566 - mae: 151.3566 - val_loss: 840.9168 - val_mae: 840.9168 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 8/100 18/18 [==============================] - 0s 5ms/step - loss: 145.2560 - mae: 145.2560 - val_loss: 803.5956 - val_mae: 803.5956 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 9/100 18/18 [==============================] - 0s 4ms/step - loss: 144.3546 - mae: 144.3546 - val_loss: 799.5455 - val_mae: 799.5455 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 10/100 18/18 [==============================] - 0s 4ms/step - loss: 141.2943 - mae: 141.2943 - val_loss: 763.5010 - val_mae: 763.5010 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 11/100 18/18 [==============================] - 0s 4ms/step - loss: 135.6595 - mae: 135.6595 - val_loss: 771.3357 - val_mae: 771.3357 Epoch 12/100 18/18 [==============================] - 0s 5ms/step - loss: 134.1700 - mae: 134.1700 - val_loss: 782.8079 - val_mae: 782.8079 Epoch 13/100 18/18 [==============================] - 0s 4ms/step - loss: 134.6015 - mae: 134.6015 - val_loss: 784.4449 - val_mae: 784.4449 Epoch 14/100 18/18 [==============================] - 0s 4ms/step - loss: 130.6127 - mae: 130.6127 - val_loss: 751.3234 - val_mae: 751.3234 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 15/100 18/18 [==============================] - 0s 5ms/step - loss: 128.8347 - mae: 128.8347 - val_loss: 696.5757 - val_mae: 696.5757 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 16/100 18/18 [==============================] - 0s 5ms/step - loss: 124.7739 - mae: 124.7739 - val_loss: 702.4698 - val_mae: 702.4698 Epoch 17/100 18/18 [==============================] - 0s 4ms/step - loss: 123.4474 - mae: 123.4474 - val_loss: 704.9241 - val_mae: 704.9241 Epoch 18/100 18/18 [==============================] - 0s 5ms/step - loss: 122.2105 - mae: 122.2105 - val_loss: 667.9725 - val_mae: 667.9725 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 19/100 18/18 [==============================] - 0s 4ms/step - loss: 121.7263 - mae: 121.7263 - val_loss: 718.8797 - val_mae: 718.8797 Epoch 20/100 18/18 [==============================] - 0s 4ms/step - loss: 119.2420 - mae: 119.2420 - val_loss: 657.0667 - val_mae: 657.0667 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 21/100 18/18 [==============================] - 0s 4ms/step - loss: 121.2275 - mae: 121.2275 - val_loss: 637.0330 - val_mae: 637.0330 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 22/100 18/18 [==============================] - 0s 4ms/step - loss: 119.9544 - mae: 119.9544 - val_loss: 671.2490 - val_mae: 671.2490 Epoch 23/100 18/18 [==============================] - 0s 5ms/step - loss: 121.9248 - mae: 121.9248 - val_loss: 633.3593 - val_mae: 633.3593 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 24/100 18/18 [==============================] - 0s 5ms/step - loss: 116.3666 - mae: 116.3666 - val_loss: 624.4852 - val_mae: 624.4852 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 25/100 18/18 [==============================] - 0s 4ms/step - loss: 114.6816 - mae: 114.6816 - val_loss: 619.7571 - val_mae: 619.7571 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 26/100 18/18 [==============================] - 0s 4ms/step - loss: 116.4455 - mae: 116.4455 - val_loss: 615.6364 - val_mae: 615.6364 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 27/100 18/18 [==============================] - 0s 5ms/step - loss: 116.5868 - mae: 116.5868 - val_loss: 615.9631 - val_mae: 615.9631 Epoch 28/100 18/18 [==============================] - 0s 4ms/step - loss: 113.4691 - mae: 113.4691 - val_loss: 608.0920 - val_mae: 608.0920 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 29/100 18/18 [==============================] - 0s 5ms/step - loss: 113.7598 - mae: 113.7598 - val_loss: 621.9306 - val_mae: 621.9306 Epoch 30/100 18/18 [==============================] - 0s 4ms/step - loss: 116.8613 - mae: 116.8613 - val_loss: 604.4056 - val_mae: 604.4056 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 31/100 18/18 [==============================] - 0s 4ms/step - loss: 111.9375 - mae: 111.9375 - val_loss: 609.3882 - val_mae: 609.3882 Epoch 32/100 18/18 [==============================] - 0s 4ms/step - loss: 112.4175 - mae: 112.4175 - val_loss: 603.0588 - val_mae: 603.0588 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 33/100 18/18 [==============================] - 0s 4ms/step - loss: 112.6697 - mae: 112.6697 - val_loss: 645.6975 - val_mae: 645.6975 Epoch 34/100 18/18 [==============================] - 0s 4ms/step - loss: 111.9867 - mae: 111.9867 - val_loss: 604.7632 - val_mae: 604.7632 Epoch 35/100 18/18 [==============================] - 0s 4ms/step - loss: 110.9451 - mae: 110.9451 - val_loss: 593.4648 - val_mae: 593.4648 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 36/100 18/18 [==============================] - 0s 5ms/step - loss: 114.4816 - mae: 114.4816 - val_loss: 608.0073 - val_mae: 608.0073 Epoch 37/100 18/18 [==============================] - 0s 4ms/step - loss: 110.2017 - mae: 110.2017 - val_loss: 597.2309 - val_mae: 597.2309 Epoch 38/100 18/18 [==============================] - 0s 5ms/step - loss: 112.2372 - mae: 112.2372 - val_loss: 637.9797 - val_mae: 637.9797 Epoch 39/100 18/18 [==============================] - 0s 4ms/step - loss: 115.1289 - mae: 115.1289 - val_loss: 587.4679 - val_mae: 587.4679 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 40/100 18/18 [==============================] - 0s 5ms/step - loss: 110.0854 - mae: 110.0854 - val_loss: 592.7117 - val_mae: 592.7117 Epoch 41/100 18/18 [==============================] - 0s 4ms/step - loss: 110.6343 - mae: 110.6343 - val_loss: 593.8997 - val_mae: 593.8997 Epoch 42/100 18/18 [==============================] - 0s 4ms/step - loss: 113.5762 - mae: 113.5762 - val_loss: 636.3674 - val_mae: 636.3674 Epoch 43/100 18/18 [==============================] - 0s 5ms/step - loss: 116.2286 - mae: 116.2286 - val_loss: 662.9264 - val_mae: 662.9264 Epoch 44/100 18/18 [==============================] - 0s 4ms/step - loss: 120.0192 - mae: 120.0192 - val_loss: 635.6360 - val_mae: 635.6360 Epoch 45/100 18/18 [==============================] - 0s 4ms/step - loss: 110.9675 - mae: 110.9675 - val_loss: 601.9926 - val_mae: 601.9926 Epoch 46/100 18/18 [==============================] - 0s 4ms/step - loss: 111.6012 - mae: 111.6012 - val_loss: 593.3531 - val_mae: 593.3531 Epoch 47/100 18/18 [==============================] - 0s 6ms/step - loss: 109.6161 - mae: 109.6161 - val_loss: 637.0014 - val_mae: 637.0014 Epoch 48/100 18/18 [==============================] - 0s 5ms/step - loss: 109.1368 - mae: 109.1368 - val_loss: 598.4199 - val_mae: 598.4199 Epoch 49/100 18/18 [==============================] - 0s 5ms/step - loss: 112.4355 - mae: 112.4355 - val_loss: 579.7040 - val_mae: 579.7040 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 50/100 18/18 [==============================] - 0s 4ms/step - loss: 110.2108 - mae: 110.2108 - val_loss: 639.2326 - val_mae: 639.2326 Epoch 51/100 18/18 [==============================] - 0s 5ms/step - loss: 111.0958 - mae: 111.0958 - val_loss: 597.3575 - val_mae: 597.3575 Epoch 52/100 18/18 [==============================] - 0s 4ms/step - loss: 110.7351 - mae: 110.7351 - val_loss: 580.7227 - val_mae: 580.7227 Epoch 53/100 18/18 [==============================] - 0s 5ms/step - loss: 111.1785 - mae: 111.1785 - val_loss: 648.3588 - val_mae: 648.3588 Epoch 54/100 18/18 [==============================] - 0s 4ms/step - loss: 114.0832 - mae: 114.0832 - val_loss: 593.2007 - val_mae: 593.2007 Epoch 55/100 18/18 [==============================] - 0s 4ms/step - loss: 110.4910 - mae: 110.4910 - val_loss: 579.5065 - val_mae: 579.5065 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 56/100 18/18 [==============================] - 0s 5ms/step - loss: 108.0489 - mae: 108.0489 - val_loss: 807.3851 - val_mae: 807.3851 Epoch 57/100 18/18 [==============================] - 0s 4ms/step - loss: 125.0614 - mae: 125.0614 - val_loss: 674.1654 - val_mae: 674.1654 Epoch 58/100 18/18 [==============================] - 0s 4ms/step - loss: 115.4340 - mae: 115.4340 - val_loss: 582.2698 - val_mae: 582.2698 Epoch 59/100 18/18 [==============================] - 0s 5ms/step - loss: 110.0881 - mae: 110.0881 - val_loss: 606.7637 - val_mae: 606.7637 Epoch 60/100 18/18 [==============================] - 0s 4ms/step - loss: 108.7156 - mae: 108.7156 - val_loss: 602.3102 - val_mae: 602.3102 Epoch 61/100 18/18 [==============================] - 0s 4ms/step - loss: 108.1525 - mae: 108.1525 - val_loss: 573.9990 - val_mae: 573.9990 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 62/100 18/18 [==============================] - 0s 4ms/step - loss: 107.3727 - mae: 107.3727 - val_loss: 581.7012 - val_mae: 581.7012 Epoch 63/100 18/18 [==============================] - 0s 4ms/step - loss: 110.7667 - mae: 110.7667 - val_loss: 637.5252 - val_mae: 637.5252 Epoch 64/100 18/18 [==============================] - 0s 4ms/step - loss: 110.1539 - mae: 110.1539 - val_loss: 586.6601 - val_mae: 586.6601 Epoch 65/100 18/18 [==============================] - 0s 5ms/step - loss: 108.2325 - mae: 108.2325 - val_loss: 573.5620 - val_mae: 573.5620 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 66/100 18/18 [==============================] - 0s 5ms/step - loss: 108.6825 - mae: 108.6825 - val_loss: 572.2206 - val_mae: 572.2206 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 67/100 18/18 [==============================] - 0s 4ms/step - loss: 106.6371 - mae: 106.6371 - val_loss: 646.6349 - val_mae: 646.6349 Epoch 68/100 18/18 [==============================] - 0s 4ms/step - loss: 114.1603 - mae: 114.1603 - val_loss: 681.8561 - val_mae: 681.8561 Epoch 69/100 18/18 [==============================] - 0s 5ms/step - loss: 124.5514 - mae: 124.5514 - val_loss: 655.9885 - val_mae: 655.9885 Epoch 70/100 18/18 [==============================] - 0s 4ms/step - loss: 125.0235 - mae: 125.0235 - val_loss: 601.0032 - val_mae: 601.0032 Epoch 71/100 18/18 [==============================] - 0s 6ms/step - loss: 110.3652 - mae: 110.3652 - val_loss: 595.3962 - val_mae: 595.3962 Epoch 72/100 18/18 [==============================] - 0s 5ms/step - loss: 107.9285 - mae: 107.9285 - val_loss: 573.7085 - val_mae: 573.7085 Epoch 73/100 18/18 [==============================] - 0s 4ms/step - loss: 109.5085 - mae: 109.5085 - val_loss: 580.4180 - val_mae: 580.4180 Epoch 74/100 18/18 [==============================] - 0s 4ms/step - loss: 108.7380 - mae: 108.7380 - val_loss: 576.1211 - val_mae: 576.1211 Epoch 75/100 18/18 [==============================] - 0s 5ms/step - loss: 107.9404 - mae: 107.9404 - val_loss: 591.1477 - val_mae: 591.1477 Epoch 76/100 18/18 [==============================] - 0s 5ms/step - loss: 109.4232 - mae: 109.4232 - val_loss: 597.8605 - val_mae: 597.8605 Epoch 77/100 18/18 [==============================] - 0s 4ms/step - loss: 107.5879 - mae: 107.5879 - val_loss: 571.9299 - val_mae: 571.9299 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 78/100 18/18 [==============================] - 0s 4ms/step - loss: 108.1598 - mae: 108.1598 - val_loss: 575.2383 - val_mae: 575.2383 Epoch 79/100 18/18 [==============================] - 0s 4ms/step - loss: 107.9175 - mae: 107.9175 - val_loss: 617.3071 - val_mae: 617.3071 Epoch 80/100 18/18 [==============================] - 0s 4ms/step - loss: 108.9510 - mae: 108.9510 - val_loss: 583.4847 - val_mae: 583.4847 Epoch 81/100 18/18 [==============================] - 0s 5ms/step - loss: 106.0505 - mae: 106.0505 - val_loss: 570.0802 - val_mae: 570.0802 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 82/100 18/18 [==============================] - 0s 4ms/step - loss: 115.6827 - mae: 115.6827 - val_loss: 575.7382 - val_mae: 575.7382 Epoch 83/100 18/18 [==============================] - 0s 5ms/step - loss: 110.9379 - mae: 110.9379 - val_loss: 659.6570 - val_mae: 659.6570 Epoch 84/100 18/18 [==============================] - 0s 5ms/step - loss: 111.4836 - mae: 111.4836 - val_loss: 570.1959 - val_mae: 570.1959 Epoch 85/100 18/18 [==============================] - 0s 4ms/step - loss: 107.5948 - mae: 107.5948 - val_loss: 601.5945 - val_mae: 601.5945 Epoch 86/100 18/18 [==============================] - 0s 4ms/step - loss: 108.9426 - mae: 108.9426 - val_loss: 592.8107 - val_mae: 592.8107 Epoch 87/100 18/18 [==============================] - 0s 5ms/step - loss: 105.7717 - mae: 105.7717 - val_loss: 603.6169 - val_mae: 603.6169 Epoch 88/100 18/18 [==============================] - 0s 5ms/step - loss: 107.9217 - mae: 107.9217 - val_loss: 569.0500 - val_mae: 569.0500 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 89/100 18/18 [==============================] - 0s 5ms/step - loss: 106.0344 - mae: 106.0344 - val_loss: 568.9512 - val_mae: 568.9512 INFO:tensorflow:Assets written to: model_experiments/model_1_dense/assets Epoch 90/100 18/18 [==============================] - 0s 5ms/step - loss: 105.4977 - mae: 105.4977 - val_loss: 581.7681 - val_mae: 581.7681 Epoch 91/100 18/18 [==============================] - 0s 5ms/step - loss: 108.8468 - mae: 108.8468 - val_loss: 573.6023 - val_mae: 573.6023 Epoch 92/100 18/18 [==============================] - 0s 5ms/step - loss: 110.8884 - mae: 110.8884 - val_loss: 576.8247 - val_mae: 576.8247 Epoch 93/100 18/18 [==============================] - 0s 5ms/step - loss: 113.8781 - mae: 113.8781 - val_loss: 608.3018 - val_mae: 608.3018 Epoch 94/100 18/18 [==============================] - 0s 5ms/step - loss: 110.5763 - mae: 110.5763 - val_loss: 601.6047 - val_mae: 601.6047 Epoch 95/100 18/18 [==============================] - 0s 4ms/step - loss: 106.5906 - mae: 106.5906 - val_loss: 570.3652 - val_mae: 570.3652 Epoch 96/100 18/18 [==============================] - 0s 4ms/step - loss: 116.9515 - mae: 116.9515 - val_loss: 615.2581 - val_mae: 615.2581 Epoch 97/100 18/18 [==============================] - 0s 5ms/step - loss: 108.0739 - mae: 108.0739 - val_loss: 580.3073 - val_mae: 580.3073 Epoch 98/100 18/18 [==============================] - 0s 4ms/step - loss: 108.7102 - mae: 108.7102 - val_loss: 586.6512 - val_mae: 586.6512 Epoch 99/100 18/18 [==============================] - 0s 5ms/step - loss: 109.0488 - mae: 109.0488 - val_loss: 570.0629 - val_mae: 570.0629 Epoch 100/100 18/18 [==============================] - 0s 4ms/step - loss: 106.1845 - mae: 106.1845 - val_loss: 585.9763 - val_mae: 585.9763

<keras.callbacks.History at 0x7fdcf48bd110>

Because of the small size of our data (less than 3000 total samples), the model trains very fast.

Let's evaluate it.

# Evaluate model on test data

model_1.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 2ms/step - loss: 585.9762 - mae: 585.9762

[585.9761962890625, 585.9761962890625]

You'll notice the model achieves the same val_loss (in this case, this is MAE) as the last epoch.

But if we load in the version of model_1 which was saved to file using the ModelCheckpoint callback, we should see an improvement in results.

# Load in saved best performing model_1 and evaluate on test data

model_1 = tf.keras.models.load_model("model_experiments/model_1_dense")

model_1.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 3ms/step - loss: 568.9512 - mae: 568.9512

[568.951171875, 568.951171875]

Much better! Due to the fluctuating performance of the model during training, loading back in the best performing model see's a sizeable improvement in MAE.

Making forecasts with a model (on the test dataset)¶

We've trained a model and evaluated the it on the test data, but the project we're working on is called BitPredict 💰📈 so how do you think we could use our model to make predictions?

Since we're going to be running more modelling experiments, let's write a function which:

- Takes in a trained model (just like

model_1) - Takes in some input data (just like the data the model was trained on)

- Passes the input data to the model's

predict()method - Returns the predictions

def make_preds(model, input_data):

"""

Uses model to make predictions on input_data.

Parameters

----------

model: trained model

input_data: windowed input data (same kind of data model was trained on)

Returns model predictions on input_data.

"""

forecast = model.predict(input_data)

return tf.squeeze(forecast) # return 1D array of predictions

Nice!

Now let's use our make_preds() and see how it goes.

# Make predictions using model_1 on the test dataset and view the results

model_1_preds = make_preds(model_1, test_windows)

len(model_1_preds), model_1_preds[:10]

(556, <tf.Tensor: shape=(10,), dtype=float32, numpy=

array([8861.711, 8769.886, 9015.71 , 8795.517, 8723.809, 8730.11 ,

8691.95 , 8502.054, 8460.961, 8516.547], dtype=float32)>)

🔑 Note: With these outputs, our model isn't forecasting yet. It's only making predictions on the test dataset. Forecasting would involve a model making predictions into the future, however, the test dataset is only a pseudofuture.

Excellent! Now we've got some prediction values, let's use the evaluate_preds() we created before to compare them to the ground truth.

# Evaluate preds

model_1_results = evaluate_preds(y_true=tf.squeeze(test_labels), # reduce to right shape

y_pred=model_1_preds)

model_1_results

{'mae': 568.95123,

'mape': 2.5448983,

'mase': 0.9994897,

'mse': 1171744.0,

'rmse': 1082.4713}

How did our model go? Did it beat the naïve forecast?

naive_results

{'mae': 567.9802,

'mape': 2.516525,

'mase': 0.99957,

'mse': 1147547.0,

'rmse': 1071.2362}

It looks like our naïve model beats our first deep model on nearly every metric.

That goes to show the power of the naïve model and the reason for having a baseline for any machine learning project.

And of course, no evaluation would be finished without visualizing the results.

Let's use the plot_time_series() function to plot model_1_preds against the test data.

offset = 300

plt.figure(figsize=(10, 7))

# Account for the test_window offset and index into test_labels to ensure correct plotting

plot_time_series(timesteps=X_test[-len(test_windows):], values=test_labels[:, 0], start=offset, label="Test_data")

plot_time_series(timesteps=X_test[-len(test_windows):], values=model_1_preds, start=offset, format="-", label="model_1_preds")

What's wrong with these predictions?

As mentioned before, they're on the test dataset. So they're not actual forecasts.

With our current model setup, how do you think we'd make forecasts for the future?

Have a think about it for now, we'll cover this later on.

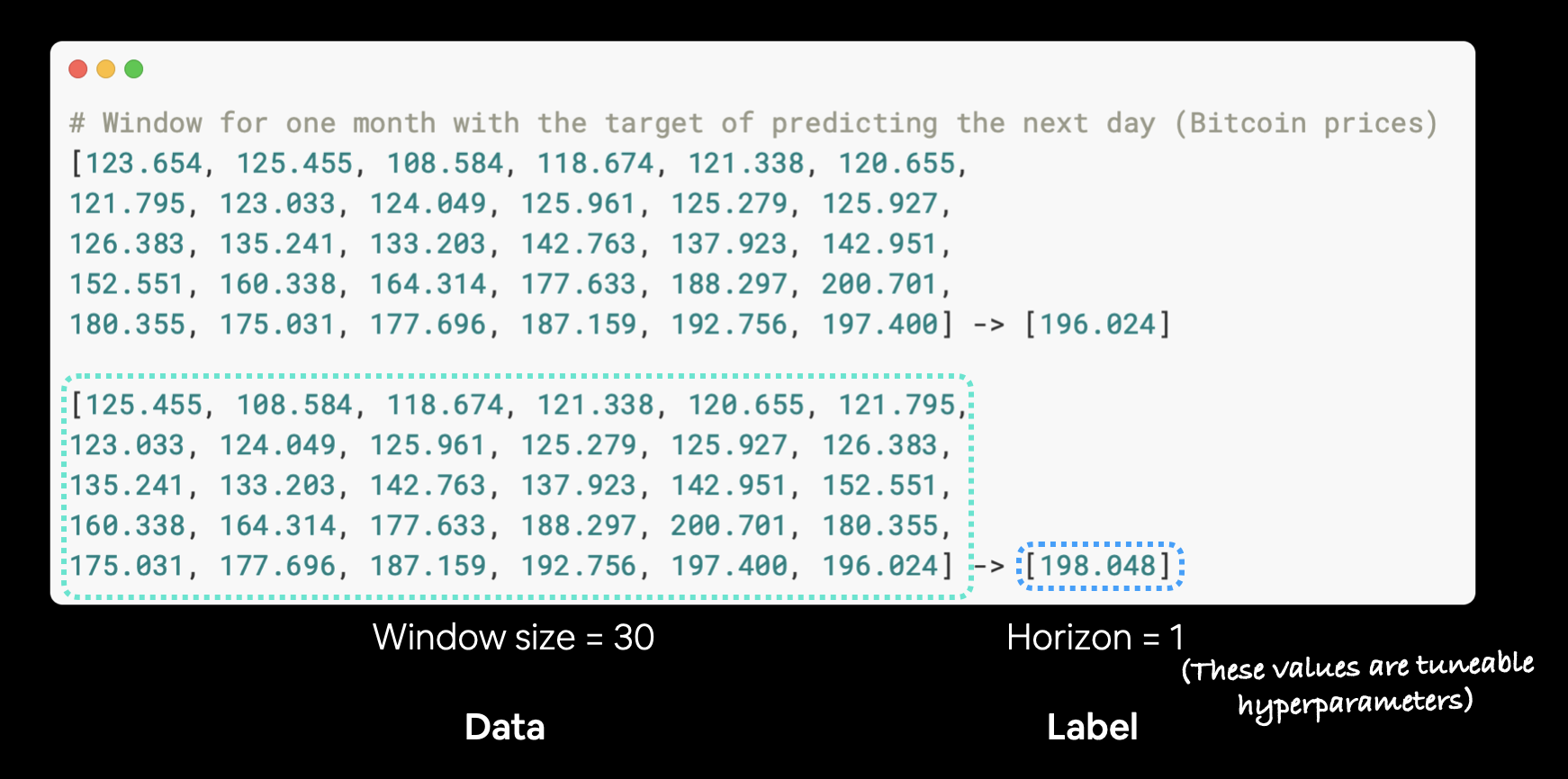

Model 2: Dense (window = 30, horizon = 1)¶

A naïve model is currently beating our handcrafted deep learning model.

We can't let this happen.

Let's continue our modelling experiments.

We'll keep the previous model architecture but use a window size of 30.

In other words, we'll use the previous 30 days of Bitcoin prices to try and predict the next day price.

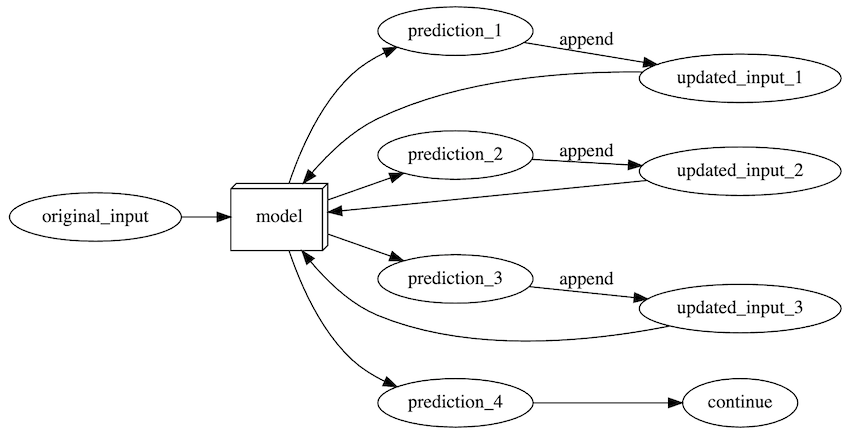

Example of Bitcoin prices windowed for 30 days to predict a horizon of 1.

Example of Bitcoin prices windowed for 30 days to predict a horizon of 1.

🔑 Note: Recall from before, the window size (how many timesteps to use to fuel a forecast) and the horizon (how many timesteps to predict into the future) are hyperparameters. This means you can tune them to try and find values will result in better performance.

We'll start our second modelling experiment by preparing datasets using the functions we created earlier.

HORIZON = 1 # predict one step at a time

WINDOW_SIZE = 30 # use 30 timesteps in the past

# Make windowed data with appropriate horizon and window sizes

full_windows, full_labels = make_windows(prices, window_size=WINDOW_SIZE, horizon=HORIZON)

len(full_windows), len(full_labels)

(2757, 2757)

# Make train and testing windows

train_windows, test_windows, train_labels, test_labels = make_train_test_splits(windows=full_windows, labels=full_labels)

len(train_windows), len(test_windows), len(train_labels), len(test_labels)

(2205, 552, 2205, 552)

Data prepared!

Now let's construct model_2, a model with the same architecture as model_1 as well as the same training routine.

tf.random.set_seed(42)

# Create model (same model as model 1 but data input will be different)

model_2 = tf.keras.Sequential([

layers.Dense(128, activation="relu"),

layers.Dense(HORIZON) # need to predict horizon number of steps into the future

], name="model_2_dense")

model_2.compile(loss="mae",

optimizer=tf.keras.optimizers.Adam())

model_2.fit(train_windows,

train_labels,

epochs=100,

batch_size=128,

verbose=0,

validation_data=(test_windows, test_labels),

callbacks=[create_model_checkpoint(model_name=model_2.name)])

INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_2_dense/assets

<keras.callbacks.History at 0x7fdcf1094290>

Once again, training goes nice and fast.

Let's evaluate our model's performance.

# Evaluate model 2 preds

model_2.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 2ms/step - loss: 608.9615

608.9614868164062

Hmmm... is that the best it did?

How about we try loading in the best performing model_2 which was saved to file thanks to our ModelCheckpoint callback.

# Load in best performing model

model_2 = tf.keras.models.load_model("model_experiments/model_2_dense/")

model_2.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 2ms/step - loss: 608.9615

608.9614868164062

Excellent! Loading back in the best performing model see's a performance boost.

But let's not stop there, let's make some predictions with model_2 and then evaluate them just as we did before.

# Get forecast predictions

model_2_preds = make_preds(model_2,

input_data=test_windows)

# Evaluate results for model 2 predictions

model_2_results = evaluate_preds(y_true=tf.squeeze(test_labels), # remove 1 dimension of test labels

y_pred=model_2_preds)

model_2_results

{'mae': 608.9615,

'mape': 2.7693386,

'mase': 1.0644706,

'mse': 1281438.8,

'rmse': 1132.0065}

It looks like model_2 performs worse than the naïve model as well as model_1!

Does this mean a smaller window size is better? (I'll leave this as a challenge you can experiment with)

How do the predictions look?

offset = 300

plt.figure(figsize=(10, 7))

# Account for the test_window offset

plot_time_series(timesteps=X_test[-len(test_windows):], values=test_labels[:, 0], start=offset, label="test_data")

plot_time_series(timesteps=X_test[-len(test_windows):], values=model_2_preds, start=offset, format="-", label="model_2_preds")

Model 3: Dense (window = 30, horizon = 7)¶

Let's try and predict 7 days ahead given the previous 30 days.

First, we'll update the HORIZON and WINDOW_SIZE variables and create windowed data.

HORIZON = 7

WINDOW_SIZE = 30

full_windows, full_labels = make_windows(prices, window_size=WINDOW_SIZE, horizon=HORIZON)

len(full_windows), len(full_labels)

(2751, 2751)

And we'll split the full dataset windows into training and test sets.

train_windows, test_windows, train_labels, test_labels = make_train_test_splits(windows=full_windows, labels=full_labels, test_split=0.2)

len(train_windows), len(test_windows), len(train_labels), len(test_labels)

(2200, 551, 2200, 551)

Now let's build, compile, fit and evaluate a model.

tf.random.set_seed(42)

# Create model (same as model_1 except with different data input size)

model_3 = tf.keras.Sequential([

layers.Dense(128, activation="relu"),

layers.Dense(HORIZON)

], name="model_3_dense")

model_3.compile(loss="mae",

optimizer=tf.keras.optimizers.Adam())

model_3.fit(train_windows,

train_labels,

batch_size=128,

epochs=100,

verbose=0,

validation_data=(test_windows, test_labels),

callbacks=[create_model_checkpoint(model_name=model_3.name)])

INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets INFO:tensorflow:Assets written to: model_experiments/model_3_dense/assets

<keras.callbacks.History at 0x7fdcf1d36350>

# How did our model with a larger window size and horizon go?

model_3.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 2ms/step - loss: 1321.5201

1321.5201416015625

To compare apples to apples (best performing model to best performing model), we've got to load in the best version of model_3.

# Load in best version of model_3 and evaluate

model_3 = tf.keras.models.load_model("model_experiments/model_3_dense/")

model_3.evaluate(test_windows, test_labels)

18/18 [==============================] - 0s 2ms/step - loss: 1237.5063

1237.50634765625

In this case, the error will be higher because we're predicting 7 steps at a time.

This makes sense though because the further you try and predict, the larger your error will be (think of trying to predict the weather 7 days in advance).

Let's make predictions with our model using the make_preds() function and evaluate them using the evaluate_preds() function.

# The predictions are going to be 7 steps at a time (this is the HORIZON size)

model_3_preds = make_preds(model_3,

input_data=test_windows)

model_3_preds[:5]

<tf.Tensor: shape=(5, 7), dtype=float32, numpy=

array([[9004.693 , 9048.1 , 9425.088 , 9258.258 , 9495.798 , 9558.451 ,

9357.354 ],

[8735.507 , 8840.304 , 9247.793 , 8885.6 , 9097.188 , 9174.328 ,

9156.819 ],

[8672.508 , 8782.388 , 9123.8545, 8770.37 , 9007.13 , 9003.87 ,

9042.724 ],

[8874.398 , 8784.737 , 9043.901 , 8943.051 , 9033.479 , 9176.488 ,

9039.676 ],

[8825.891 , 8777.4375, 8926.779 , 8870.178 , 9213.232 , 9268.156 ,

8942.485 ]], dtype=float32)>

# Calculate model_3 results - these are going to be multi-dimensional because

# we're trying to predict more than one step at a time.